Introduction

What this essay contains

Classical mechanics, electrodynamics, and thermodynamics are all conceptually simple, but settled. Things are less clear with statistical mechanics. To say it kindly, it is a lively field of research. To say it unkindly, it is a field whose very conceptual foundation is in doubt. The main problem is not with quantum mechanics, as quantum statistical mechanics works very well, but with how to handle systems far-from-equilibrium. Fortunately, as long as we stick to the (near-)equilibrium parts, then it is mostly settled, and so this is how this essay is going to be written about. We will avoid quantum because that deserves its own entire essay, and avoid far-from-equilibrium because it is unsettled. What we can present is still a beautifully precise mathematical toolbox with surprisingly wide applications.

Statistical mechanics is technically independent of thermodynamics, but it is strongly related. You can acquire the basic skills in thermodynamics by working through the first half of my previous essay Classical Thermodynamics and Economics.

About half of the essay is taken up with calculations. The theoretical core of statistical mechanics is small, and most of the skills are in applying it to actual systems. Therefore, a lot of worked-through examples are necessary. I have tried to make them flow well and skimmable.

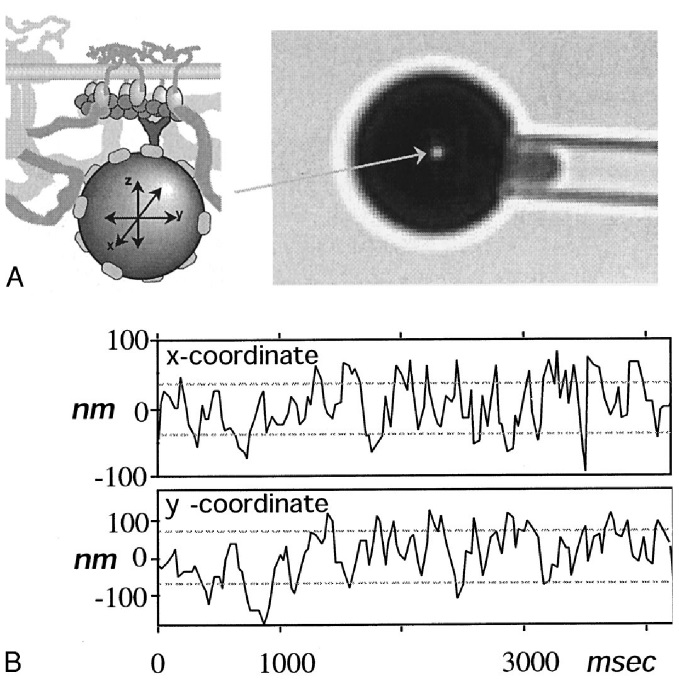

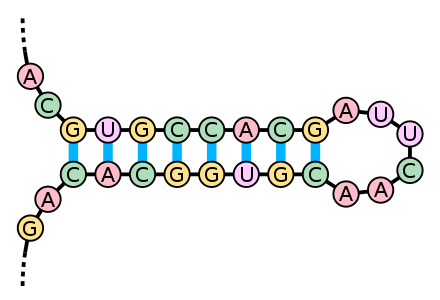

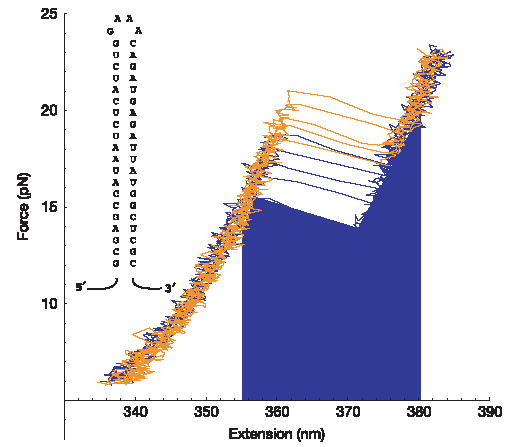

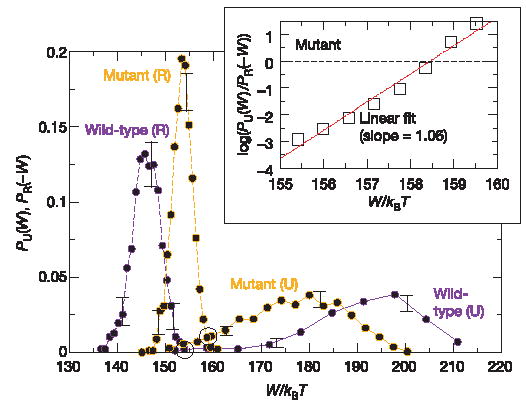

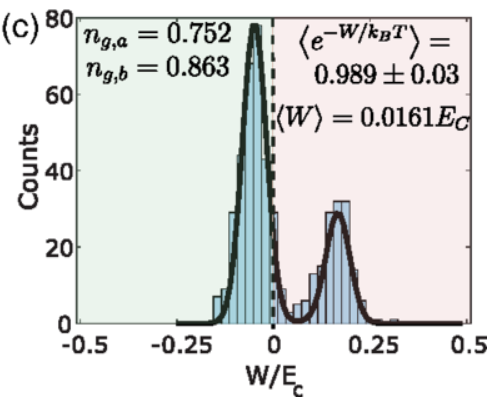

The essay contains: entropy, free entropies, partition function, about 12 useful theorems, fluctuation-dissipation relations, maximal caliber, Crooks fluctuation theorem, Jarzynski equality, rubber bands, kinetic gas theory, van der Waals law, blackbody radiation, combinatorics, chi-squared test, large deviation theory, bacteria hunting, unzipping RNA hairpins, Arrhenius equation, martingales, Maxwell’s demon, Laplace’s demon.

It does not contain: quantum statistical mechanics, nonequilibrium statistical mechanics, linear response theory, Onsager reciprocal relations, statistical field theory, phase transitions, stochastic processes, Langevin equation, diffusion theory, Fokker–Planck equation, Feynman–Kac formula, Brownian ratchets.

The prerequisites are thermodynamics, multivariate calculus, probability, combinatorics, and mathematical maturity. It’s good to be familiar with biology and the basics of random walk as well.

Quick reference

- \(D_{KL}\): Kullback–Leibler divergence.

- \(S[\rho]\): entropy of probability distribution \(\rho\).

- \(S^*\): maximal entropy under constraints.

- \(f[\rho]\): Helmholtz free entropy of probability distribution \(\rho\).

- \(f^*_{X|y}\): maximal Helmholtz free entropy under the constraint that \(Y = y\).

- \(Z\): the partition function.

- \(\beta\): inverse temperature.

- \(N\): number of particles, or some other quantity that can get very large.

- \(n\): number of dimensions, or some other quantity that is fixed.

- \(F\): Helmholtz free energy.

- \(\binom{m}{n}\): binomial coefficient.

- \(\braket{f(x, y) | z}_y\): The expectation of \(f\) where we fix \(x\), let \(y\) vary, and conditional on \(z\).

- \(\mathrm{Var}\): variance.

- \(\int (\cdots) D[x]\): path integral where \(x\) varies over the space of all possible paths.

As usual, we set \(k_B = 1\), so that \(\beta = 1/T\), except when we need a numerical answer in SI units.

Further readings

Unfortunately, I never learned statistical mechanics from any textbook. I just understood things gradually on my own after trying to make sense of things. This means I cannot recommend any introductory textbook based on my personal experience.

- Books I did learn from:

- (Sethna 2021) shows how a modern statistical mechanist thinks. It is a rather eclectic book, because modern statistical mechanics is full of weird applications, from music theory to economics.1

- (Nelson 2003) teaches basic statistical thermodynamics in the context of biology, and (Howard C. Berg 1993) teaches random walks.

- (Ben-Naim 2008; Richard P. Feynman 1996) show how to combine (unify?) statistical mechanics with information theory.

- (Jaynes 2003) gives Jaynes’ entire philosophy of information, one application of which is his theory of why entropy is maximized in statistical mechanics.

- (Lemons, Shanahan, and Buchholtz 2022) closely follows the story of how Planck actually derived the blackbody radiation law. Reading it, you almost have the illusion that you too could have discovered what he discovered.

- (Penrose 2005) gives an elegant mathematical deduction that brings philosophers and mathematical logicians joy. However, it is not useful for applications.

- Books I did not learn from, but feel obliged to recommend:

- (Ma 1985; Tolman 1980; Richard P. Feynman 2018; Schrodinger 1989) are books that apparently every real physicist must read before they die. Like those other “1001 books you must read before you die”, I did not read them.

- (Nash 2006) is a concise introduction for chemistry students.

- (Sommerfeld 1950, vol. 5) is by the master, Sommerfeld. If you want to do 19th century style thermodynamics, then it is very good, but otherwise, I don’t know what this book is for.

1 The book has quite many buzzwords like “fractals”, “complexity”, “avalanche”, and “edge of chaos”, buzzy in the 1990s. A joke is that during the 1980s, as the Cold War was winding down, physicists were overproduced and underemployed, and had to find someway to get employed. Thus, they went into economics, social sciences, etc, resulting in the discipline of “econophysics”, the nebulous non-discipline of “complexity studies”, etc.

Overview

Philosophical comments

It is fair to say that, although it originated in the 19th century like all other classical fields of physics, statistical mechanics is unsettled.

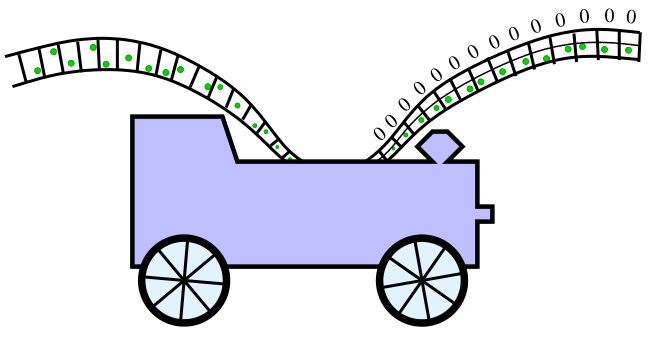

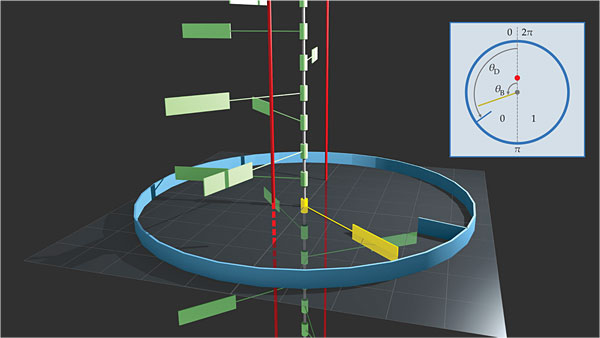

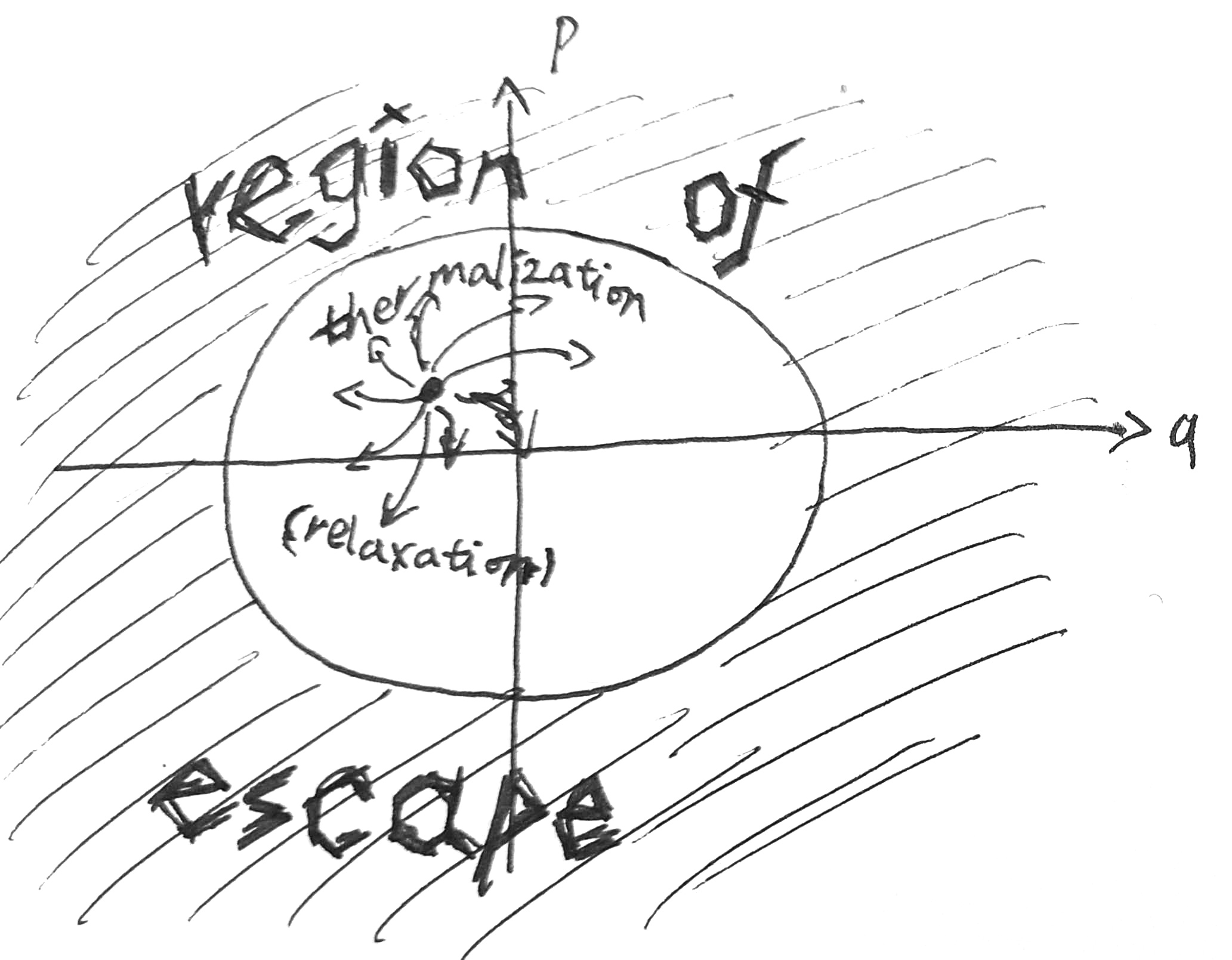

Trajectory-centric statistical mechanics. In this view, we start with the equations of motion for a physical system, then study statistical properties of individual trajectories, or collections of them. For example, if we have a pendulum hanging in air, being hit by air molecules all the time, we would study the total trajectory \((\theta, x_1, y_1, z_1, x_2, y_2, z_2, \dots)\), where \(\theta\) is the angle of the pendulum swing, and \((x_i, y_i, z_i)\) is the location of the \(i\)-th air molecule. Then we may ask that, over a long enough period, how frequent would the pendulum visit a certain angle range of \([\theta_0, \theta_0 + \delta\theta]\):

\[ Pr(\theta \in [\theta_0, \theta_0 + \delta\theta]) = \lim_{T \to \infty} \frac{1}{2T} \int_{-T}^{+T} 1[\theta \in [\theta_0, \theta_0 + \delta\theta]] dt \]

In the trajectory-centric view, there are the following issues:

- Problem of ergodicity: When does time-average equal ensemble-average? A system is called “ergodic” iff for almost all starting conditions, the time-average of the trajectory is the ensemble-average over all trajectories.

- Problem of entropy: How is entropy defined on a single trajectory?

- H-theorem: In what sense, and under what conditions, does entropy increase?

- Problem of equilibrium: What does it mean to say that a trajectory is in equilibrium?

- Approach to equilibrium: In what sense, and under what conditions, does the trajectory converge to an equilibrium?

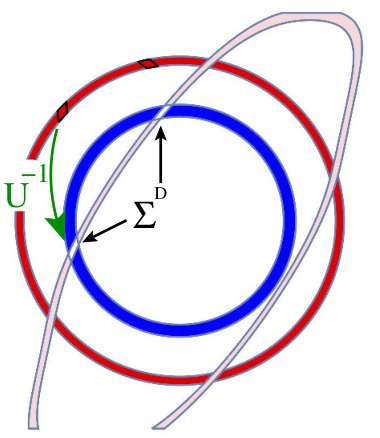

- Reversibility problem (Umkehreinwand): If individual trajectories are reversible, why does entropy increase instead of decrease?

While these philosophical problems are quite diverting, we will avoid them as much as possible, because we will be working with the ensemble-centric equilibrium statistical mechanics. This is the statistical mechanics that every working physicist uses, and this is what we will present. If you are interested in the philosophical issues, read the Stanford Encyclopedia entry on the Philosophy of Statistical Mechanics.

Principles of statistical mechanics

- A physical system is a classical system with a state space, evolving according to some equation of motion.

- An ensemble of that system is a probability distribution over its state space.

- The idea of (ensemble-centric) statistical mechanics is to study the evolution of an entire probability distribution over all possible states.

- The entropy of a probability distribution \(\rho\) is

\[S[\rho] := -\int dx\; \rho(x) \ln \rho(x)\]

- Under any constraint, there exists a unique ensemble, named the equilibrium ensemble, which maximizes entropy under constraint.

Most of the times, the state space is a phase space, and the equation of motion is described by a Hamiltonian function. However, the machinery of statistical mechanics, as given above, is purely mathematical. It can be used to study any problem in probability whatsoever, even those with no physical meaning.

Believe it or not, the above constitutes the entirety of equilibrium statistical mechanics. So far, it is a purely mathematical theory, with no falsifiability (Popperians shouting in the background). To make it falsifiable, we need to add one more assumption, necessarily fuzzy:2

2As far as the laws of mathematics refer to reality, they are not certain; and as far as they are certain, they do not refer to reality.

— Albert Einstein, Address to Prussian Academy of Sciences (1921)

- The equilibrium ensemble is physically meaningful and describes the observable behavior of physical systems.

In other words, when a physical system is at equilibrium, then everything observable can be found by studying it as if it has the maximum entropy distribution under constraint.

Of course, just what that “is physically meaningful” means, is another source of endless philosophical arguments. I would trust that you will know what is physically meaningful, and leave it at that, while those who have a taste for philosophy can grapple with the Duhem–Quine thesis.

Differential entropy depends on coordinates choice

There is a well-known secret among information theorists: differential entropy is ill-defined.

Consider the uniform distribution on \([0, 1]\). It is the maximal-entropy distribution on \([0, 1]\) – relative to the Lebesgue measure. However, why should we pick the Lebesgue measure, and what happens if we don’t?

Suppose we now stretch the \([0, 1]\) interval nonlinearly, by \(f(x) = x^2\), then the maximal-entropy distribution relative to that would no longer be the uniform distribution on \([0, 1]\). Instead, it would be the uniform distribution after stretching.

The problem is this: Differential entropy is not coordinate-free. If we change the coordinates, we change the base measure, and the differential entropy changes as well.

To fix this, we need to use the KL-divergence, which is invariant under a change of base measure, as in \[-D_{KL}(\rho \| \mu) := - \int dx\; \rho(x) \ln\frac{\rho(x)}{\mu(x)}\]

In typical situations, we don’t need to worry ourselves with KL-divergence, as we just pick the uniform distribution \(\mu\). When the state space is infinite in volume, the uniform distribution is not a probability measure, but it will work. Bayesians say that it is an improper prior.

In this interpretation, the principle of “maximum entropy distribution under constraint” becomes the principle of “minimal KL-divergence under constraint”, which is Bayesian inference, with exactly the same formulas.

In almost all cases, we use the uniform prior over phase space. This is how Gibbs did it, and he didn’t really justify it other than saying that it just works, and suggesting it has something to do with Liouville’s theorem. Now with a century of hindsight, we know that it works because of quantum mechanics: We should use the uniform prior over phase space, because phase space volume has a natural unit of measurement: \(h^N\), where \(h\) is Planck’s constant, and \(2N\) is the dimension of phase space. As Planck’s constant is a universal constant, independent of where we are in phase space, we should weight all of the phase space equally, resulting in a uniform prior.

Jaynes’ epistemological interpretation

The question at the foundation of statistical mechanics is: Why maximize entropy? The practical scientist would say, “Because it works.”, and that is well and good, but we will give one possible answer to the why, from the most ardent proponent of maximal entropy theory, E. T. Jaynes.

According to Jaynes, statistical mechanics is epistemological. That is, probability is not out there in the world, but in here in our minds, and statistical mechanics is nothing more than maximal entropy inference applied to physics. Macroscopic properties are evidences, and the ensemble \(\rho\) is the posterior after we incorporate the evidences.

It might be difficult to swallow Jaynes’ interpretation, as it seems obvious that entropy is objective, but epistemology is subjective. How could he explain objective entropy by subjective information? I might make this more palatable by three examples.

In (Jaynes 1992), he proposed the following thought experiment: Suppose we have a tank of neon gas, separated in the middle by a slab, as in the Gibbs paradox. If both sides have the same temperature and pressure, then the system has the same entropy even after we remove the slab. But suddenly, Jaynes’ demon tells us that the left side contains neon-20, while the right side contains neon-22, and thus, we were not subject to the Gibbs paradox after all! And so we have just wasted some perfectly good free energy for nothing.

We ask, “Wasted? How could we have wasted anything unless there is a practical way to extract energy? You say they are two distinct gases, but what difference does it make if you simply call one side ‘neon-20’ and the other ‘neon-22’?”

So Jaynes’ demon gives us two membranes, one permeable only to neon-20, while the other only permeable to neon-22. This allows us to put both of them in the middle, and slowly let the two gases diffuse into the middle, extracting mechanical work by the pressure on the two membranes.

The point of the thought-experiment is that the entropy of a system is, in a practical sense, subjective. The tank of gas might as well have maximal entropy if we don’t have the two membranes. But as soon as we have the two membranes, it expands our space of possible actions, and previously “lost” work, suddenly becomes extractable, and the entropy of the world drops.

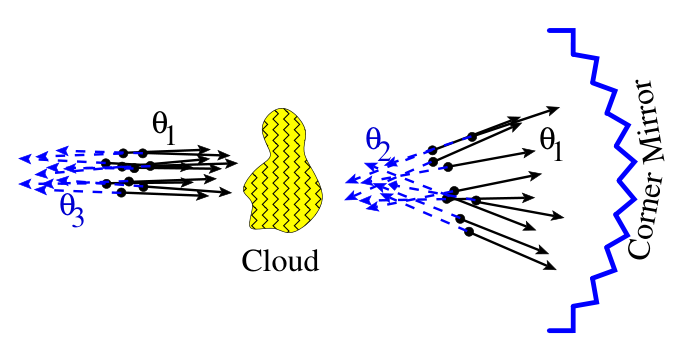

The following is a more concrete example from optics. Shoot some hard balls through a bumpy region (an analogy of shining a laser light through a bumpy sheet of glass). The balls would be scattered. It would increase the entropy of the system… unless we reflect them via a corner mirror, then the balls would be reflected right back through the bumpy region, and return to the previous zero-entropy state!

Did we violate the second law? Not so, if we think of entropy as a measure of our actionable ignorance. Without a corner mirror, we cannot use the detailed information of the scattered ball beam, and have to treat it as essentially random. However, all the detailed information is still there, in the bumpy region and in the scattered beam, and it takes a corner mirror to “unlock” the information for us.

Thus, with a corner mirror, the scattered beam still has zero entropy, but without it, the scattered beam has positive entropy.

The old adage ‘knowledge is power’ is a very cogent truth, both in human relations and in thermodynamics.

— E. T. Jaynes

Still, there is a nagging feeling that, even if we have no corner reflector, and so the beam of light truly has increased in entropy relative to us, as long as the corner reflector is theoretically possible, then the entropy of the beam of light is still unchanged in itself. Isn’t this blatant psychologism? Haven’t we reduced objective entropy out there to subjective information in here? Surely even if nobody is around to see it, if a tank of gas explodes in a forest, entropy still makes a sound.

Responding to this would entangle us into a whole mess of philosophical arguments about objective vs subjective probability, and observer vs observed phenomena. My quick answer is simply that knowledge isn’t magical, and even nature does not laugh at the difficulty of inference.3 If the entropy of a tank of gas appears high to us, then chances are, it would appear even higher to a car engine, for the car engine has only a few ways to interact with this tank of gas, unlike us, who have all of modern technology. A car engine can only burn up the tank of gas, but we can distill it, extract it, push it through membranes, etc. The tank of gas has higher entropy relative to the car engine – yes, the car engine has an opinion about the world, as much as we do. It has subjective beliefs about everything it can touch and burn, and we can listen to it with the language of math.

3 Supposedly, Laplace said “Nature laughs at the difficulties of integration.”, but when I tried to hunt it down, all citations led directly to a 1953 essay, which cites an anonymous “mathematician”. I have tried searching for it in French, with no results. I think this is actually a pseudo-Laplace quote, a paper ghost, much like how people kept attributing things to pseudo-Aristotle.

It would take a physicist a long time to work out the problem and he could achieve only an approximation at that. Yet presumably the coin will stop exactly where it should. Some very rapid calculations have to be made before it can do so, and they are, presumably, always accurate. And then, just as I was blushing at what I supposed he must regard as my folly, the mathematician came to my rescue by informing me that Laplace had been puzzled by exactly the same fact. “Nature laughs at the difficulties of integration.” (Krutch 1953, 148)

However, I’m inclined to believe that even nature does not laugh at the difficulties of integration. In fact, one of my hobbies is to “explain” natural laws as ways for nature to avoid really difficult integrations. For example, Newtonian gravity is “explained” by the Barnes–Hut algorithm that allows n-body gravity to be calculated in \(O(n \ln n)\) time.

What is the payoff of this long detour? I think it is to provide an intuitive feeling of the identity of physics and information. Information is physical, and physics is informational. If you are a physicist, then this allows you to invade into other fields, much like statistical mechanists “invaded” other fields like artificial intelligence and economics. If you are a mathematician or a computer scientist, then this allows you to translate intuition about physical objects into intuition about high-dimensional probability distributions and large combinatorial objects. And if you are Maxwell’s demon, then you won’t listen to me – I would gladly listen to you, since magically transforming information and physics back and forth is your entire reason of existence!

In the above formulation, maximal entropy inference is interpreted as how rational agents can act optimally under limited information. An alternative viewpoint argues that it is not the entropy that is fundamental, but the argmax of something that is fundamental. In this view, if we replaced the entropy function \(S[\cdot]\) with a… kentropy function \(K[\cdot]\), such that any scientist who uses reasons about experiments on a system using

\[\rho^* = \mathop{\mathrm{argmax}}_{\rho: \rho\text{ satisfies constraints }C}K[\rho]\]

would still satisfy certain axioms of rationality, then \(K\) should be as good as \(S\).

In this vein, there is a Shore–Johnson theorem which shows that if a scientist using a certain kentropy function \(K\) would end up satisfying these certain axioms of rationality, then

\[\mathop{\mathrm{argmax}}_{\rho: \rho\text{ satisfies constraints }C}S[\rho] = \mathop{\mathrm{argmax}}_{\rho: \rho\text{ satisfies constraints }C}K[\rho]\]

In other words, as long as we are doing constrained maximization, the choice of the entropy doesn’t matter. In particular, the standard entropy function \(S\) is good enough – any constraint-maximizing rational thinker thinks as if it is doing constraint-maximizing entropy inference, so we might as well use \(S\) and stop worrying about alternative ones like \(K\).

IF you are steeped in Bayesian epistemology, this is in the same vein as those Bayes-theological theorems proving that any rational being must use Bayes theorem for updates. (Pressé et al. 2013) Other examples include Blackwell’s informativeness theorem, Aumann’s agreement theorem, Cox’s theorem, Dutch book theorems, etc.

Mathematical developments

Fundamental theorems

Theorem 1 (Liouville’s theorem) For any phase space and any Hamiltonian over it (which can change with time), phase-space volume is conserved under motion.

For any probability distribution \(\rho_0\), if after time \(t\), it evolves to \(\rho_t\), and a point \(x(0)\) evolves to \(x(t)\), then \(\rho_0(x(0)) = \rho_t(x(t))\).

The proof is found in any textbook, and also Wikipedia. Since it is already simple enough, and I can’t really improve upon it, I won’t.

Corollary 1 (conservation of entropy) For a Hamiltonian system, with any Hamiltonian (which can change with time), for any probability distribution \(\rho\) over its phase space, its entropy is conserved over time.

In particular, we have the following corollary:

Corollary 2 Given any set of constraints, if the Hamiltonian preserves these constraints over time, then any constrained-maximal entropy distribution remains constrained-maximal under time-evolution.

In most cases, the constraint is of a particular form: the expectation is known. In that case, we have the following theorem:

Theorem 2 (maximal entropy under linear constraints) For the following constrained optimization problem

\[ \begin{cases} \max_\rho S[\rho] \\ \int A_1(x) \rho(x) &= \bar A_1 \\ \cdots &= \cdots \\ \int A_n(x) \rho(x) &= \bar A_n \\ \end{cases} \]

Consider the following ansatz

\[ \rho(x) = \frac{1}{Z(a_1, \dots, a_n)} e^{-\sum_i a_i A_i(x)} \]

where \(Z(a_1, \dots, a_n) = \int e^{-\sum_i a_i A_i(x)} dx\), and \(a_1, \dots, a_n\) are chosen such that the constraints \(\int A_i(x) \rho(x) = \bar A_i\) are satisfied.

If the ansatz exists, then it is the unique solution.

The ansatz solution is what you get by Lagrangian multipliers. For a refresher, see the Analytical Mechanics#Lagrange’s devil at Disneyland. The theorem shows that the solution is unique – provided that it exists. Does it exist? Yes, in physics. If it doesn’t exist, then we are clearly not modelling a physically real phenomenon.

In physics, these are “Boltzmann distributions” or “Gibbs distributions”. In statistics, these are exponential families. Because they are everywhere, they have many names.

Define a distribution \(\rho\) as given in the statement of the theorem. That is,

\[ \rho(x) = \frac{1}{Z(a_1, \dots, a_n)} e^{-\sum_i a_i A_i(x)} \]

etc.

Now, it remains to prove that for any other \(\rho'\) that satisfies the constraints, we have \(S[\rho] \geq S[\rho']\).

By routine calculation, for any probability distribution \(\rho'\),

\[ D_{KL}(\rho' \| \rho) = -S[\rho'] + \sum_i a_i \braket{A_i}_{\rho'} + \ln Z(a_1, \dots, a_n) \]

If \(\rho'\) satisfies the given constraints, then \(D_{KL}(\rho' \| \rho) = -S[\rho'] + \mathrm{Const}\) where the constant does not depend on \(\rho'\), as long as it satisfies the constraints. Therefore, \(S[\rho']\) is maximized when \(D_{KL}(\rho' \| \rho)\) is minimized, which is exactly \(\rho\).

The following proposition is often used when we want to maximize entropy in a two-step process:

Theorem 3 (compound entropy) If \(\rho_{X,Y}\) is a probability distribution over two variables \((X, Y)\), then

\[S[\rho_{X,Y}] = S[\rho_Y] + \braket{S[\rho_{X|y}]}_y\]

or more succinctly,

\[S_{X,Y} = S_Y + \braket{S_{X|y}}_y\]

\(\rho_Y\) is the probability distribution over \(Y\), after we integrate/marginalize \(X\) away:

\[ \rho_Y(y) := \int \rho_{X,Y}(x,y)dx \]

\(\rho_{X|y}\) is the conditional probability distribution over \(X\), conditional on \(Y=y\):

\[ \rho_{X|y}(x) := \frac{\rho_{X,Y}(x,y)}{\int \rho_{X,Y}(x,y) dx} \]

\(\braket{\cdot}_y\) is the expectation over \(\rho_Y\):

\[ \braket{S_{X|y}}_y := \int S_{X|y} \rho_Y(y)dy \]

Consider a compound system in ensemble \(\rho(x, y)\). Its entropy is

\[S[\rho] = -\int dxdy \; \rho(x, y) \ln \rho(x, y)\]

We can take the calculation in two steps:

\[S[\rho] = -\int dxdy \; \rho(x|y)\rho(y) (\ln \rho(x|y) + \ln \rho(y)) = S[\rho_Y] + \braket{S[\rho_{X|y}]}_y\]

Intuitively, what does \(S_{X,Y} = S_Y + \braket{S_{X|y}}_y\) mean? It means that the entropy in \((X, Y)\) can be decomposed into two parts: the part due to \(Y\), and the part remaining after we know \(Y\), but not yet knowing \(X\). In the language of information theory, the total information in \((X, Y)\) is equal to the information in \(Y\), plus the information of \(X\) conditional over \(Y\):

\[ I(X, Y) = I(Y) + I(X|Y) \]

Microcanonical ensembles

If the only constraint is the constant-energy constraint \(H(x) = E\), then the maximal entropy distribution is the uniform distribution on the shell of constant energy \(H = E\). It is uniform, because once we enforce \(H(x) = E\), there are no other constraints, and so by Theorem 2, the distribution is uniform.

Thus, we obtain the microcanonical ensemble:

\[\rho_E(x) \propto 1_{H(x) = E}\]

It is sometimes necessary to deal with the “thickness” of the energy shell. In that case, \(\rho_E(x) \propto \delta(H(x) - E)\), where \(\delta\) is the Dirac delta function.

By Theorem 2, the microcanonical ensemble is the unique maximizer of entropy under the constraint of constant energy. In particular, if the Hamiltonian does not change over time, then any microcanonical ensemble is preserved over time. In words, if we uniformly “dust” the energy shell of \(H(x) = E\) with a cloud of system states, and let all of them evolve over time, then though the dust particles move about, the cloud remains exactly the same.

More generally, we can impose more (in)equality constraints, and still obtain a microcanonical ensemble. For example, consider a ball flying around in an empty room with no gravity. The Hamiltonian is \(H(q, p) = \frac{p^2}{2m}\), and its microcanonical ensemble is \(\rho(q, p) \propto \delta(p = \sqrt{2mE})1[p \in \text{the room}]\). That is, its velocity is on the energy shell, while its position is uniform over the entire room.

If we want to specify the number of particles for each chemical species, then that can be incorporated into the microcanonical ensemble as well. For example, if we want the number of species \(i\) be exactly \(N_{i0}\), then we multiply \(\rho\) by \(1[N_i = N_{i0}]\).

Canonical ensembles

Consider a small cup of liquid in a giant tank of chemical fluids, or a small lump of air in the whole atmosphere. These are all examples of “a small system in contact with a giant system”. In general, if we have a small system connected to a large system, then we typically don’t care about the large system, and only want to study the small system’s ensemble. How do we do that? Rigorously, we would need to first find the microcanonical ensemble for the total compound small–large system, then take an integral over all states of the large system, resulting in an ensemble over just the small system, as in

\[\rho_{\text{small}}(x) = \int \rho_{\text{total}}(x, y) dy\]

where \(x\) ranges over the states of the small system, and \(y\) of the large system.

However, this is difficult to perform in general, because the large system, having so many particles, has a huge state space. We cannot do it in general. However, there is an easy way out. Whenever we have a big system changing only a little bit, we can assume linearity. Whenever we have a function \(f(x)\) where \(x\) changes only a little bit around \(x_0\), we can assume \(f(x) \approx f(x_0) + f'(x_0) (x - x_0)\). This is the trick that will allow us to solve the problem.

Assuming that the energy of the compound system is extensive, we obtain the canonical ensemble. Assuming that the energy and volume are both extensive, we obtain the grand canonical ensemble, etc. The following table would be very useful

| extensive constraint | ensemble | free entropy |

|---|---|---|

| none | microcanonical | entropy |

| energy | canonical | Helmholtz free entropy |

| energy, volume | ? | Gibbs free entropy |

| energy, particle count | grand canonical | Landau free entropy |

| energy, volume, particle count | ? | ? |

There are some question marks in the above table, because there are no consensus names for those question marks. What is more surprising is that there is no name for the ensemble of constrained energy and volume. I would have expected something like the “Gibbs ensemble”, but history isn’t nice to us like that. Well, then I will name it first, as the big canonical ensemble. And while we’re at it, let’s fill the last row as well:

| extensive constraint | ensemble | free entropy |

|---|---|---|

| none | microcanonical | entropy |

| energy | canonical | Helmholtz free entropy |

| energy, volume | big canonical | Gibbs free entropy |

| energy, particle count | grand canonical | Landau free entropy |

| energy, volume, particle count | gross canonical | EVN free energy |

In classical thermodynamics, extensivity means that entropy of the compound system can be calculated in a two-step process: calculate the entropy of each subsystem, then add them up. The important fact is that a subsystem still has enough independence to have its own entropy.

This is not always obvious. If we have two galaxies of stars, we can think of each as a “cosmic gas” where each particle is a star. Now, if we put them near each other, then the gravity between the two galaxies would mean it is no longer meaningful to speak of “the entropy of galaxy 1”, but only “the entropy of galaxy-compound 1-2”.

In statistical mechanics, extensivity means a certain property of each subsystem is unaffected by the state of the other subsystems, and the total is the sum of them. So for example, if \(A\) is an extensive property, then it means

\[ A(x_1, \dots, x_n) = A_1(x_1) + \dots + A_n(x_n) \]

Like most textbooks, we assume extensivity by default, although as we noted in Classical Thermodynamics and Economics, both classical thermodynamics and statistical mechanics do not require extensivity. We assume extensivity because it is mathematically convenient, and good enough for most applications.

In the following theorem, we assume that the total system is extensive, and is already in the maximal entropy distribution (microcanonical ensemble)

Theorem 4 If the two systems are in energy-contact, and energy is conserved, and energy is extensive, and the compound system is in a microcanonical ensemble, then the small system is in the canonical ensemble

\[ \rho(x) \propto e^{-\beta H(x)} \]

where \(\beta\) is the marginal entropy of energy of the large system:

\[\beta := \partial_E S[\rho_{bath, E}]\]

Similarly, if the two systems are in energy-and-particle-contact, then the small system is in the grand canonical ensemble

\[ \rho(x) \propto e^{-(\beta H(x) + (-\beta \mu) N(x))} \]

where \(-\beta\mu\) is the marginal entropy of particle of the large system:

\[-\beta\mu := (\partial_N S[\rho_{bath, E, N}])_{E}\]

Most generally, if the two systems are in \(q_1, \dots, q_m\) contact, and \(q_1, \dots, q_m\) are conserved and extensive quantity, then

\[\rho(x) \propto e^{-\sum_i p_i q_i(x)}\]

where \(p_i = (\partial_{q_i} S[\rho_{bath, q}])_{q}\) is the marginal entropy of \(q_i\) of the large system.

We prove the case for the canonical ensemble. The other cases are similar.

Since the total distribution of the whole system is the maximal entropy distribution, we are faced with a constrained maximization problem:

\[\max_\rho S[\rho]\]

By Theorem 3,

\[S = S_{\text{system}}(E_{\text{system}}) + \braket{S_{bath|system}(E_{total} - E_{\text{system}})}_{\text{system}}\]

Since the bath is so much larger than the system, we can take just the first term in its Taylor expansion:

\[S_{bath|system}(E_{total} - E_{\text{system}}) = S_{\text{bath}}(E_{total}) - \beta E_{\text{system}}\]

where \(E_{total}\) is the total energy for the compound system, \(\beta = \partial_E S_{\text{bath}}|_{E = E_{total}}\) is the marginal entropy per energy, and \(E_{\text{system}}\) is the energy of the system.

This gives us the linearly constrained maximization problem of

\[\max_{\rho_{\text{system}}} (S_{\text{system}} - \beta \braket{E_{\text{system}}}_{\rho_{\text{system}}})\]

and we apply Lagrange multipliers to finish the proof.

Extensivitiy in statistical mechanics yields extensivity in thermodynamics. Specifically, writing \(S_{\text{bath}}(E)\), instead of \(S_{\text{bath}}(E, E_{\text{system}})\), requires the assumption of extensivity. Precisely because the bath and the system do not affect each other, we are allowed to calculate the entropy of the bath without knowing anything about the energy of the system.

\(S_{\text{bath}}\) is the logarithm of the surface area of the energy shell \(H_{\text{bath}} = E_{\text{bath}}\). By extensivity, \(H(x_{\text{bath}}, x_{\text{system}}) = H_{\text{bath}}(x_{\text{bath}}) + H_{\text{system}}(x_{\text{system}})\), so the energy shells of the bath depends on only \(E_{\text{bath}}\), not \(E_{\text{system}}\).

The proof showed something extra: If the small system is in distribution \(\rho\) that does not equal to the equilibrium distribution \(\rho_B\), then the total system’s entropy is

\[S = S_{max} - D_{KL}(\rho \| \rho_B)\]

which is related to of Sanov’s theorem and large deviation theory, though I don’t know how to make this precise.

What if we have a system in volume-contact, but not thermal-contact? This might happen when the system is a flexible bag of gas held in an atmosphere, but the bag is thermally insulating. Notice that in this case, the small system still exchanges energy with the large system via \(d\braket{E} = -Pd\braket{V}\). We don’t have \(E = -PdV\), because the small system might get unlucky. During a moment of weakness, all its particles has abandoned their frontier posts, and the bath has taken advantage of this by encroaching on its land. The system loses volume by \(\delta V\), without earning a compensating \(\delta E = P \delta V\). In short, the thermodynamic equality \(E = -PdV\) is inexact in statistical mechanics, and only holds true on the ensemble average.

In this case, because pressure is a constant, we have \(d(E + PV) = 0\), and so we have the enthalpic ensemble \(\rho \propto e^{-\beta H}\), where \(H := E + PV\) is the enthalpy4.

Specifically, if you work through the same argument, you would end up with the following constrained maximization problem:

\[ \begin{cases} \max_{\rho_{\text{system}}} (S_{\text{system}} - \beta \braket{E_{\text{system}}}_{\rho_{\text{system}}} - \beta P \braket{V}) \\ \braket{E_{\text{system}}} + P\braket{V_{\text{system}}} = \mathrm{Const} \end{cases} \]

yielding the enthalpic ensemble (or the isoenthalpic-isobaric ensemble).

4 Sorry, I know this is not the Hamiltonian, but we are running out of letters to use.

Free entropies

Just like in thermodynamics, it is useful to consider free entropies, which are the convex duals of the entropy:

- Helmholtz free entropy: \(f[\rho] := S[\rho] - \beta \braket{E} = \int dx \; \rho(x) (-\ln \rho(x) - \beta E(x))\).

- Gibbs free entropy: \(g[\rho] := S[\rho] - \beta \braket{E} - \beta P \braket{V}\).

- Landau free entropy: \(\omega[\rho] := S[\rho] - \beta \braket{E} - \beta (-\mu) \braket{N}\). Note that the sign of \((-\mu)\) is not a typo. It is simply that 19th-century chemists have messed up the sign convention, like how Benjamin Franklin messed up the sign convention of electric charge.

Etc. Of those, we would mostly use the Helmholtz free energy, so I will write it down again:

\[ f[\rho] := S[\rho] - \beta \braket{E} = \int dx \; \rho(x) (-\ln \rho(x) - \beta E(x)) \]

Theorem 5 (chain rule for free entropies) \(f_X = S_Y + \braket{f_{X|y}}_y\), and similarly \(g_X = S_Y + \braket{g_{X|y}}_y\), and similarly for all other free entropies.

\[ \begin{aligned} f_X &= S_X - \beta \braket{E}_x \\ &= S_Y + \braket{S_{X|y}}_y - \beta \braket{\braket{E}_{x \sim X|y}}_y \\ &= S_Y + \braket{f_{X|y}}_y \end{aligned} \]

A common trick in statistical mechanics is to characterize the same equilibrium in many different perspectives. For example, the canonical ensemble has at least 4 characterizations. “Muscle memory” in statistical mechanics would allow you to nimbly applying the most suitable one for any occasion.

Theorem 6 (4 characterizations of the canonical ensemble)

- (total entropy under fixed energy constraint) The canonical ensemble maximizes total entropy when the system is in energy-contact with an energy bath that satisfies \(\partial_E S_{\text{bath}} = \beta\), under the constraint that \(E + E_{\text{bath}}\) is fixed.

- (entropy under mean energy constraint) Let \(E_0\) be a real number, and let \(\beta\) be the unique solution to \(\int dx \; e^{-\beta E(x)} = E_0\). A system maximizes its entropy under constraint \(\braket{E} = E_0\) when it assumes the canonical ensemble with \(\beta\).

- (Boltzann’s thermodynamic limit argument): Take \(N\) copies of a system, and connect them by energy-contacts. Inject the system with total energy \(NE_0\), and let the system reach its microcanonical ensemble. Then at the thermodynamic limit of \(N\to \infty\), the distribution of a single system is the canonical distribution with \(\beta\) that is the unique solution to \(\int dx \; e^{-\beta E(x)} = E_0\).

- (free entropy) A system maximizes its Helmholtz free entropy when it assumes the canonical ensemble. At the optimal distribution \(\rho^*\), the maximal Helmholtz free entropy is \(f[\rho^*] = \ln Z\), where \(Z = \int dx \; e^{-\beta E(x)}\) is the partition function.

- We already proved this.

- Use the Lagrange multiplier.

- Isolate one system, and treat the rest as an energy-bath.

- \(f[\rho] = \ln Z - D_{KL}(\rho \| \rho_B)\).

The partition function

When the system is in a canonical ensemble, we can define a convenient variable \(Z = \int dx\; e^{-\beta E(x)}\) called the partition function. As proven in Theorem 6, the partition function is equal to \(e^f\), where \(f\) is the Helmholtz free entropy of the canonical ensemble.

Theorem 7 (the partition function is the cumulant generating function of energy) Let a system be in canonical ensemble with inverse temperature \(\beta\), and let \(K(t) := \ln \braket{e^{tE}}\) be the cumulant generating function of its energy, then \[K(t) = \ln Z(\beta-t) - \ln Z(\beta)\]

In particular, the \(n\)-th cumulant of energy is

\[\kappa_n(E) = K^{(n)}(t) |_{t=0} = (-\partial_\beta)^n (\ln Z)\]

A similar proposition applies for the other ensembles and their free entropies.

The proof is by direct computation.

For example, the first two cumulants are the mean and variance:

\[\braket{E} = (-\partial_\beta) (\ln Z), \quad \mathrm{Var}(E) = \partial_\beta^2 (\ln Z)\]

Typical systems are made of \(N\) particles, where \(N\) is large, and that these particles are only weakly interacting. In this case, the total Helmholtz free entropy per particle converges at the thermodynamic limit of \(N \to \infty\):

\[ \lim_N \frac 1N \ln Z \to \bar f_\beta \]

Thus, for large but finite \(N\), we have

\[\braket{E} \approx -N \partial_\beta \bar f_\beta, \quad \mathrm{Var}(E) = N\partial_\beta^2 \bar f_\beta\]

In particular, the relative fluctuation scales like \(\frac{\sqrt{\mathrm{Var}(E)}}{\braket{E}} \sim N^{-1/2}\).

Conditional entropies

Given any two random variable \(X, Y\), and an “observable” variable \(Y\) that is determined by \(X\) by some function \(h\), such that \(Y = h(X)\). If we know \(X\), we would know \(Y\), but it is not so conversely, as multiple \(X\) may correspond to the same \(Y\). Typically, we use \(Y\) as a “summary statistic” for the more detailed, but more complicated \(X\). For example, we might have multiple particles in a box, such that \(X\) is their individual locations, while \(Y\) is their center of mass.

Theorem 8 (conditional entropy) Given any random variable \(X\), and an “observable” variable \(Y\) that is determined by \(X\), and some constraints \(c\) on \(X\), if \(X\) is the distribution that maximizes entropy under constraints \(c\), with entropy \(S_X^*\), then the observable \(Y\) is distributed as

\[\rho_Y^*(y) = e^{S_{X|y}^* - S_X^*}, \quad e^{S_X^*} = \int dy\; e^{S_{X|y}^*}\]

where \(S_{X|y}^*\) is the maximal entropy for \(X\) conditional on the same constraints, plus the extra constraint that \(Y = y\).

By assumption, \(X\) is the unique solution to the constrained optimization problem

\[ \begin{cases} \max S_X \\ \text{constraints on $x$} \end{cases} \]

By Theorem 3, the problem is equivalent to:

\[ \begin{cases} \max S_Y + \braket{S_{X|y}}_{y\sim Y} \\ \text{constraints on $x$} \end{cases} \]

Now, we can solve the original problem in a two-step process: For each possible observable \(y\sim Y\), we solve an extra-constrained problem:

\[ \begin{cases} \max S_{X|y} \\ \text{original constraints on $x$} \\ \text{$x$ must be chosen such that the observable $Y = y$} \end{cases} \]

Then, each such problem gives us a maximal conditional5 entropy \(S_{X|y}^*\), and we can follow it up by solving for \(Y\) with

\[\max\left(S_Y + \braket{S_{X|y}^*}_{y \sim Y}\right)\]

Again, the solution is immediate once we see it is just the KL-divergence:

\[S_Y + \braket{S_{X|y}^*}_{y \sim Y} = - \int dy \; \rho_Y(y) \ln\frac{\rho_Y(y)}{e^{S_{X|y}^*}} = \ln Z - D_{KL}(\rho_Y \| \rho_Y^*)\]

where

\[Z = \int dy\; e^{S_{X|y}^*}, \quad \rho_Y^*(y) = \frac{e^{S_{X|y}^*}}{Z}\]

At the optimal point, the entropy for \(X\) is maximized at \(S_X^* = \ln Z - 0\), so \(Z = e^{S_X^*}\).

5 If you’re a pure mathematician, you can formalize this using measure disintegration.

Consider a small system with energy states \(E_1, E_2, \dots\) and a large bath system, in energy contact. We can set \(X\) to be the combined state of the whole system, and \(Y\) to be the state of the small system. Once we observe \(y\), we have fully determined the small system, so the small system has zero entropy, and so all the entropy comes from the bath system: \[S_{X|y}^* = S_{\text{bath}} = S_{\text{bath}}(E_{total}) - \beta E_y\]

Consequently, the distribution of the small system is \(\rho_Y(y) \propto e^{-\beta E_y}\), as we expect.

A similar calculation gives us the grand canonical ensemble, etc.

Theorem 9 (conditional free entropy) Given any random variable \(X\), and an “observable” variable \(Y\) that is determined by \(X\), and some constraints \(c\) on \(X\), if \(X\) is the distribution that maximizes Helmholtz free entropy under constraints \(c\), with Helmholtz free entropy \(f_X^*\), then the observable \(Y\) is distributed as \[\rho_Y^*(y) = e^{f_{X|y}^* - f_X^*}, \quad e^{f_X^*} = \int dy\; e^{f_{X|y}^*}\]

where \(f_{X|y}^*\) is the maximal Helmholtz free entropy for \(X\) conditional on the same constraints, plus the constraint that \(Y = y\).

Similarly for Gibbs free entropy, and all other free entropies.

First note that \(f_X = S_Y + \braket{f_{X|y}}_y\), then argue in the same way.

Kinetic gas theory

Kinetic gas theory is the paradigm for pre-1930 statistical mechanics. Boltzmann devoted his best years to kinetic gas theory. The connection between kinetic gas theory and statistical mechanics was so strong that it was often confused as one. Modern statistical mechanics has grown to be so much more than this, so we will only settle for deriving the van der Waals equation. This strikes a balance between triviality (the ideal gas equation could be derived in literally two lines) and complication (Boltzmann’s monumental Lectures on Gas Theory has 500 pages (Boltzmann 2011)).

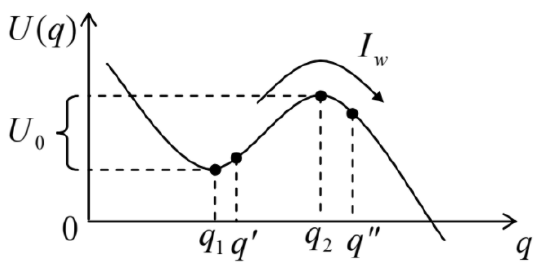

To review, the van der Waals gas equation is

\[P = \frac{N/\beta}{V- bN} - \frac{cN^2}{V^2}\]

where \(b, c\) are real numbers that depend on the precise properties of the gas molecules. The term \(V - bN\) accounts for the fact that each gas molecule excludes some volume, so that, as \(N\) grows, it corrects for the ideal gas pressure \(P_{ideal}\) by \(\sim P_{ideal}\frac{bN}{V}\). The term \(\frac{cN^2}{V^2}\) accounts for overall interaction energy between gas molecules. Suppose the interaction is overall attractive, then we would have \(c > 0\), and otherwise \(c < 0\).

Ideal gas

Consider a tank of ideal gas consisting of \(N\) point-masses, flying around in a free space with volume \(V\). The tank of gas has inverse temperature \(\beta\), so its phase-space distribution is

\[ \rho(q_{1:N}, p_{1:N}) = \prod_{i\in 1:N} \rho(q_i, p_i), \quad \rho(q, p) = \underbrace{\frac{1}{V}}_{\text{free space}} \times \underbrace{\frac{e^{-\beta \frac{\|p_i\|^2}{2m}}}{(2\pi m/\beta)^{3/2}}}_{\text{Boltzmann momentum distribution}} \]

The total energy of the gas has no positional term, so it is all due to momentum. Because the momenta coordinates \(p_{1,x}, p_{1,y}, \dots, p_{N,y}, p_{N,z}\) do not interact, their kinetic energies simply sum, giving

\[ U = 3N \times \int_{\mathbb{R}}dp\; \frac{p^2}{2m} \frac{e^{-\frac{p^2}{2m/\beta}}}{\sqrt{2\pi m/\beta}} = \frac{3N}{2\beta} \]

This is the same as Boltzmann’s derivation so far. However, although entropy is exactly defined when there are only finitely or countably many possible states, as \(\sum_{j \in \mathbb{N}} -p_j \ln p_j\), this is not so when state space is uncountably large, like \(\mathbb{R}^{6N}\). When Boltzmann encountered the issue, he solved it by discretizing the phase space into arbitrary but small cubes. The effect is that he could rederive the ideal gas laws, but the entropy has an additive constant that depends on the exact choice of the cube size. This was not a problem for Boltzmann, who was trying to found classical thermodynamics upon statistical mechanics, and in classical thermodynamics, entropy does have an indeterminate additive constant.

Later, Planck in his derivation of the blackbody radiation law, used the same trick. Ironically, Planck did not believe in atoms nor quantized light, but he did make the correct assumption that there is a natural unit of measurement for phase space area, which he called \(h\), and which we know as Planck’s constant. (Duncan and Janssen 2019, chap. 2).

Following Planck, we discretize the phase space into little cubes of size \(h^{3N}\), and continue:

\[ \begin{aligned} S &= -\sum_{i \in\text{Little cubes}} p_i \ln p_i \\ &\approx -\sum_{i \in\text{Little cubes}} (\rho(i) h^{3N}) \ln (\rho(i) h^{3N}) \\ &\approx -\int_{\mathbb{R}^{6N}} dp_{1:N}dq_{1:N} \; \rho(p_{1:N}, q_{1:N}) \ln (\rho(p_{1:N}, q_{1:N}) h^{3N}) \\ &= -\int_{\mathbb{R}^{6N}} dp_{1:N}dq_{1:N} \; \rho(p_{1:N}, q_{1:N}) \ln \rho(p_{1:N}, q_{1:N}) - 3N \ln h \\ &= -\underbrace{N\int_{\mathbb{R}^{6}} dpdq \; \rho(p, q) \ln \rho(p, q)}_{\text{non-interacting particles}} - 3N \ln h \end{aligned} \]

Now, the entropy of a single atom \(\int_{\mathbb{R}^{6}} dpdq \; \rho(p, q) \ln \rho(p, q)\) factors again into one position space and three momentum spaces:

\[ \begin{aligned} -\int_{\mathbb{R}^{6}} dpdq \; \rho(p, q) \ln \rho(p, q) &= -\int_{\mathbb{R}^3} dq \rho(q) \ln \rho(q) - \sum_{i = x, y, z} \int_{\mathbb{R}} dp_i \ln \rho(p_i) \\ &= \ln V + 3 \times \underbrace{(\text{entropy of }\mathcal N(0, m/\beta))}_{\text{check Wikipedia}} \\ &= \ln V + \frac 32 \ln(2\pi m/\beta) + \frac 32 \\ \end{aligned} \]

Does this remind you of our previous discussion about how differential entropy is ill-defined? Finally that discussion is paying off! The choice of a natural unit of measurement in phase space is equivalent to fixing a natural base measure on phase space, such that differential entropy becomes well-defined.

The above is not yet correct, because permuting the atoms does not matter. That is, we have grossly inflated the state space. For example, if \(N = 2\), then we have counted the state \((q_1, p_1, q_2, p_2)\), then \((q_2, p_2, q_1, p_1)\), as if they are different, but they must be counted as the same. We must remove this redundancy by “quotienting out” the permutation group over the particles. The effect is dividing the phase space by \(\ln N!\):

\[ \begin{aligned} \frac SN &= \ln V + \frac 32 \ln(2\pi m/\beta) + \frac 32 - 3 \ln h - \underbrace{\frac 1N \ln N!}_{\text{Stirling's approximation}} \\ &= \ln\left[\frac{V}{N} \left(\frac{2\pi m}{\beta h^2}\right)^{\frac 32}\right] + \frac 52 \end{aligned} \]

giving us the Sackur–Tetrode formula:

\[ S(U, V, N) = \ln\left[\frac{V}{N} \left(\frac{4\pi m U}{3N h^2}\right)^{\frac 32}\right] + \frac 52 \]

All other thermodynamic quantities can then be derived from this. For example, the pressure is

\[P = \beta^{-1}(\partial_V S)_{U, N} = \frac{1}{\beta V}\]

more conventionally written as \(PV = \beta^{-1} = Nk_BT\), the ideal gas equation, where we have re-inserted the Boltzmann constant in respect for tradition.

In early 1900s, Walther Nernst proposed the third law of thermodynamics. The history is rather messy, but suffice to say that the version we are going to care about says, “At the absolute zero of temperature the entropy of every chemically homogeneous solid or liquid body has a zero value.”. In support, he studied experimentally the thermodynamic properties of many materials at temperatures approaching absolute zero. He had a hydrogen liquefier and could reach around \(20 \;\mathrm{K}\).

Working on the assumption that \(S = 0\) in any chemical at \(T = 0\), he could measure the entropy of any substance by slowing heating up a substance (or cooling down), measuring its heat capacity at all temperatures, then take an integral:

\[ k_B S = \int \frac{CdT}{T} \]

The low-temperature data for mercury was the most available (mercury was also the substance with which Onnes discovered superconductivity). However, mercury is mostly in a liquid form at low temperatures. Fortunately, the latent heat of vaporization \(\Delta L\) can be measured, and then we can get

\[ S_{\text{vapor}} = S_{\text{liquid}} + \frac{\Delta L}{k_BT} \]

Back then, \(k_B = \frac{\text{Gas constant}}{\text{Avogadro constant}}\), and the \(S_{\text{liquid}}, \Delta L\) of mercury were all measured, so combining these, Tetrode calculated a value of \(h\) that is within \(30\%\) of modern measurement. (Grimus 2013)

Ideal gas (again)

We rederive the thermodynamic properties of ideal monoatomic gas via Helmholtz free entropy.

\[Z = \int e^{-\beta E} = \underbrace{\frac{1}{N!}}_{\text{identical particles}} \underbrace{V^N}_{\text{position}} \underbrace{(2\pi m/\beta )^{\frac 32 N}}_{\text{momentum}}\]

In typical textbooks, they use the Helmholtz free energy, which is defined as

\[ F = -\beta^{-1} \ln Z = -\beta^{-1} N \left(\ln \frac{V}{N} + \frac 32 \ln \frac{2\pi m}{\beta} + \frac{\ln N}{2N}\right) \]

By the same formula from classical thermodynamics,

\[ d\ln Z = -\braket{E}d\beta + \beta\braket{P} dV \implies \begin{cases} \braket{E} &= \frac 32 \frac{N}{\beta} \\ \braket{P}V &= \frac{N}{\beta} \end{cases} \]

Notice how the \(\ln N!\) part simply does not matter in this case.

Hard ball gas (dilute gas limit)

In order to refine the approach, we need to account for two effects.

- Each particle takes up finite volume, which forces the total volume of positional space to be smaller than \(V^N\).

- Particle pairs have interactions, which changes the Boltzmann distribution.

The first effect can be modelled by assuming each atom is a hard ball of radius \(r\). The particles still have no interaction except that their positions cannot come closer than \(2r\).

Because there is no potential energy, the Boltzmann distribution on momentum space is the same, and so the Helmholtz free entropy \(\ln Z\) still splits into the sum of positional entropy and momentous entropy. The momentum part is still \(\frac 32 N \ln\frac{2\pi}{\beta m}\), as the hard balls do not interfere with each other’s momentum, but the position part is smaller, because the balls mutually exclude each other.

Let \(a = 8V_{ball} = \frac{32}{3}\pi r^3\) be a constant for the gas.

To measure the volume of the diminished position space, we can add one hard ball at a time. The first hard ball can take one of \(V\) possible positions, as before. The next ball’s center cannot be within \(2r\) of the center of the first ball, so its position can only take one of \((V - a)\) positions, where \(a = 8V_{ball} = \frac{32}{3}\pi r^3\) is a constant that depends on the shape of the hard balls. We continue this argument, obtaining the total volume in position space:

\[V(V- a) \cdots (V - (N-1)a) \approx V^N e^{0 -\frac{a}{V}-2\frac{a}{V} -\dots -(N-1)\frac{a}{V}} \approx V^N\left(1- \frac{N^2 a}{2V} \right)\]

This gives us

\[\braket{E} = \frac{3N}{2\beta}, \quad \braket{P}V \approx \frac N\beta \left(1 + \frac{a N}{2V}\right) \approx \frac{N/\beta}{V - \frac a2 N}\]

The second equation is the van der Waals equation when the term \(c = 0\), meaning there is neither attraction nor repulsion between particles.

In the above derivation, we are assuming that only pairwise exclusion matters. That is, we ignore the possibility that three or more balls may simultaneously intersecting each other. We can make a more accurate counting argument via the inclusion-exclusion principle, which would lead us to a virial expansion for gas.

Specifically, if the balls \(A, B\) are intersecting, which has probability \(a/V\), and \(B, C\) are also intersecting, also with probability \(a/V\), then \(A, C\) are quite likely to be also intersecting, with probability much higher than \(a/V\). Therefore, if we have excluded the cases where \(A, B\) are intersecting by subtracting with \(a/V\), and the cases where \(B, C\) are intersecting by subtracting another \(a/V\), then we should be subtracting with something less than \(a/V\). The cluster expansion principle makes this precise. Unfortunately, it requires some difficult combinatorics. The interested reader should study (Andersen 1977).

Soft ball gas (high temperature and dilute gas limit)

In the above derivation, we got one part of van der Waals equation right – the part where particles take up space. However, we have not yet accounted for the force between particles. We expect that if the particles attract each other, then \(P\) should be smaller, and if the particles repel each other, then \(P\) should be larger.

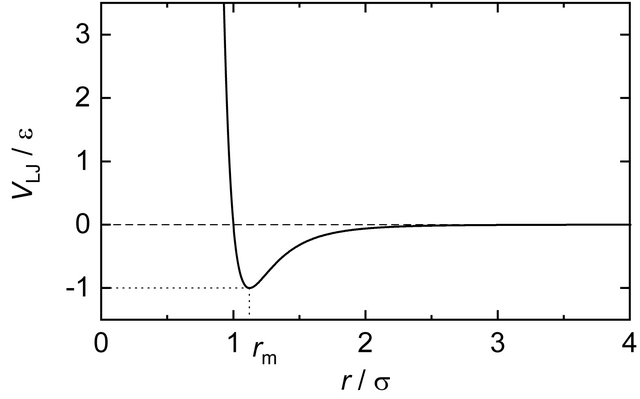

Let’s assume the gas is made of balls that has a hard core and a soft aura. That is, they repulse or attract each other at a distance, and when a pair comes too close. We also assume the force law depends only on the distances between particles.

That is, we can write such a system as having a gas potential energy \(V(q_1, \dots, q_N) = \sum_{i < j} V(\|q_i - q_j\|)\). To enforce the hard core, we should have \(V(r) = \infty\) when \(r \in [0, r_0]\).

Now, the partition function becomes

\[ Z = \int e^{-\beta\sum_i \frac{p_i^2}{2m} - \beta\sum_{i < j}V(\|q_i - q_j\|)} dqdp \]

The momentum part is still the same \((2\pi/\beta m)^{\frac 32 N}\), but the position part is more difficult now. Still, we hope it will be close to \(V^N\).

That is, we need to calculate:

\[Z = \underbrace{V^N (2\pi/\beta m)^{\frac 32 N} \frac{1}{V^N}}_{\text{ideal gas}} \int_{V^N} e^{ - \beta\sum_{i < j}V(\|q_i - q_j\|)} dq\]

The integral \(\int_{V^N} e^{ - \beta\sum_{i < j}V(\|q_i - q_j\|)} dq\) can be evaluated piece-by-piece: \[ \int_{V^N} e^{ - \beta\sum_{i < j}V(\|q_i - q_j\|)} dq = \int dq_1 \left(\int dq_2 \; e^{-\beta V(\| q_1 - q_2 \|)} \left(\int dq_3 \; e^{-\beta (V(\| q_1 - q_3 \|) + V(\| q_2 - q_3 \|))} \cdots\right)\right) \]

Because the chamber is so much larger than the molecular force-field, it is basically infinite. So for almost all of \(q_1\) (except when it is right at the walls of the chamber), \(\int dq_2 \; e^{-\beta V(\| q_1 - q_2 \|)} \approx V - \delta\), where \(\delta\) is some residual volume:

\[\delta := \int_{V} dq_2 \; (1 - e^{-\beta V(\|q_2 \|)})\]

Furthermore, because we are dealing with a dilute gas, the higher-order interactions don’t matter (see previous remark about the virial expansion). Therefore, the integral \[\int_{V} dq_3 \; e^{-\beta (V(\| q_1 - q_3 \|) + V(\| q_2 - q_3 \|))} \approx \int_{V_1 \cup V_2 \cup V_3} dq_3 \; e^{-\beta (V(\| q_1 - q_3 \|) + V(\| q_2 - q_3 \|))} \]

where \(V_1\) is the “turf” of particle \(1\), and \(V_2\) is the turf of particle \(2\), and \(V_3\) is the rest of the volume. Because the gas is dilute, we have basically \(V_1\) disjoint from \(V_2\), giving us

\[\approx \sum_{j = 1, 2, 3}\int_{V_j} dq_3\; e^{-\beta V(\| q_j - q_3 \|)} \approx V - 2\delta\]

Together, we have \[\int_{V^N} e^{ - \beta\sum_{i < j}V(\|q_i - q_j\|)} dq \approx V(V-\delta) \cdots(V - (N-1)\delta)\]

Giving us \[\ln Z \approx \ln Z_{\text{ideal}} - \frac{N^2 \delta}{2V}\]

It remains to calculate the residual volume. It has two parts, one due to the hard core and one due to the soft halo: \[\delta = \int_{\|q_2 \| \leq r_0} dq_2 \; (1 - e^{-\infty}) + \int_{\|q_2 \| > r_0} dq_2 \; (1 - e^{-\beta V(\|q_2\|)})\]

The first part is just \(a\), as calculated previously. The second part depends on the exact shape of the potential well. However, when temperature is high, \(\beta\) would be very small, so the second part is approximately \(\int dq_2 (\beta V)\), which is a constant times \(\beta\).

Thus, we have \[\ln Z \approx \ln Z_{\text{ideal}} - \frac{N^2}{V}(a + b \beta)\]

for some constants \(a, b\). This gives us the van der Waals equation: \[\braket{P} V = \frac{N}{\beta} + \frac{N^2}{\beta V}a + \frac{N^2}{V} b\]

Other classical examples

Countably many states

In a surprising number of applications, we have a single system in an energy bath. The system has finitely many, or countably infinitely many, distinguishable states, each with a definite energy: \(E_0 \leq E_1 \leq E_2 \leq \cdots\). In particular, this covers most of the basic examples from quantum mechanics. In such a system, the probability of being in state \(i\) is \(p_i = \frac 1Z e^{-\beta E_i}\) where

\[ Z = \sum_i e^{-\beta E_i} \]

Because I don’t like sections that are literally two paragraphs long, I will reformulate this as multinomial regression in mathematical statistics.

In the problem of classification, we observe some vector \(\vec X\), and we need to classify it into one of finitely many states \(\{1, 2, \dots\}\). With multinomial regression, we construct one vector \(\vec b_i\) for each possible state \(i\), and then declare that the probability of being in state \(k\) is

\[ Pr(i | X) = \frac{e^{-\vec X \cdot \vec b_i}}{Z(X)}, \quad Z(\vec X) = \sum_j e^{-\vec b_j \cdot \vec X} \]

To make the parallel clearer:

\[ \begin{aligned} \text{log probability} & & \text{observable } & &\text{ feature} & & \text{normalization constant} \\ \ln p(i | \beta) &=& -\beta & \; & E_i & & - \ln Z \\ \ln Pr(i | \vec X) &=& -\vec X & \cdot & \vec b_i & & - \ln Z \end{aligned} \]

We can make the analogy exact by adding multiple observables. Specifically, if we solve the following constrained optimization problem

\[ \begin{cases} \max S \\ \braket{\vec b} = \vec b_0 \end{cases} \]

then the solution is a multinomial classifier, with \(\vec X\) playing the role of \(\beta\).

Interpreting the physics as statistics, we can think of \(\beta\) as an “observable”. It is as if we are asking the physical system “What state are you in?” but we can only ask a very crude question “What is your energy on average?” Knowing that, we can make a reasonable guess by using the maximal entropy compatible with that answer.

Interpreting the statistics as physics, we can think of the observable \(\vec X\) as “entropic forces”, trying to push the system towards the distribution of maximal entropy. At the equilibrium of zero entropic force, we have a multinomial classifier. This is the prototypical idea of energy-based statistical modelling.

Fluctuation by \(N^{-1/2}\)

Suppose we have several tanks of oxygen gas that can exchange energy. They are in a microcanonical ensemble. Now, if we measure the total energy in the first tank, we would get a value \(E_1\). We sample it again after a while, and we would get another value. Averaging them, we would get \(\braket{E_1}\), which ought to match the prediction by classical thermodynamics. However, if thermodynamics is the theory of the average, then to go beyond it, statistical mechanics must predict the variance as well.

In Theorem 7, We had already seen that the partition function generates the mean, the variance, and all other terms. Here we expand on this.

Take several systems, and let them exchange energy, but nothing else. For concreteness, we can imagine taking several copper tanks of gas, and let them touch each other. The tanks hold their shape, not expanding or contracting. The system has total entropy

\[S = \sum_i S_i(E_i, A_i)\]

where \(A_i\) stand for the other state variables we don’t care about, because they are held constant. Now, there is a single constraint of constant total energy:

\[E = \sum_i E_i\]

In the thermodynamical limit, the compound system reaches the maximal entropy state \(E_1^*, \dots, E_n^*\), which solves the following constrained maximization

\[ \begin{cases} \max \sum_i S_i(E_i, A_i)\\ E = \sum_i E_i \end{cases} \]

By calculus, at the optimal point, all systems satisfy

\[ (\partial_{E_i} S_i)_{A_i} = \beta \]

for some number \(\beta\). This is the zeroth law of thermodynamics.

However, we are in statistical mechanics, so the compound system actually does not stay exactly at the optimal point. Instead, the energy levels fluctuate. Write the fluctuation vector as

\[Y = (\Delta E_1, \dots, \Delta E_n)\]

which satisfies the constraint \(\sum_i \Delta E_i = 0\).

Suppose we observe the fluctuation vector to be a certain value \(Y = y\), then by Theorem 8,

\[\rho_Y(y) \propto e^{S^*_{X|y}}\]

where \(S^*_{X|y}\) is the entropy of the compound system, given \(Y = y\). For small fluctuations, we do a Taylor expansion:

\[S^*_{X|y} = \sum_i S_i(E_i^*) + \underbrace{(\partial_{E_i} S_i)_{A_i}}_{\text{$=\beta$}} \Delta E_i + \frac 12 (\partial_{E_i}^2 S_i)_{A_i} (\Delta E_i)^2 + \cdots\]

Since \(\sum_i \Delta E_i = 0\) at the equilibrium point,

\[\rho_Y(\Delta E) \propto e^{\sum_i \frac 12 (\partial_{E_i}^2 S_i)_{A_i} (\Delta E_i)^2}\]

Now, \(\partial_E S = \beta\), and \(\partial_E^2 S = -\frac{1}{T^2 C}\) in typical thermodynamic notation, where \(C\) is \(\partial_T E\), the heat capacity (holding all other variables \(A\) constant), so we have the following equation:

\[\rho_Y(\Delta E) \propto e^{-\sum_i \frac{1}{2T^2 C_{i}} (\Delta E_i)^2}\]

with fluctuation on the order \(\Delta E_i \sim \sqrt{T^2 C_i}\). For most substances studied by 19th century physicists, such as gas, that is \(\sim \sqrt{N} k_B T\). If they could measure the energy of gas at \(T = 500 \;\mathrm{K}\) with precision down to \(10^{-3} \;\mathrm{J}\), that would still require a tank of gas with \(N = 10^{34} = 10^{10} \;\mathrm{mol}\). If they wanted to study this in oxygen, they would need 0.1 million tonnes of it.

Blackbody radiation

Planck’s derivation of the blackbody radiation is the first great success of quantum statistical mechanics. We give a brief presentation here that tracks Planck’s original argument.

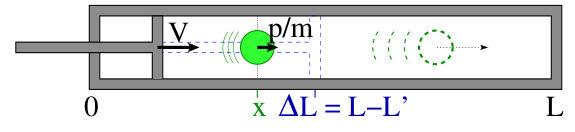

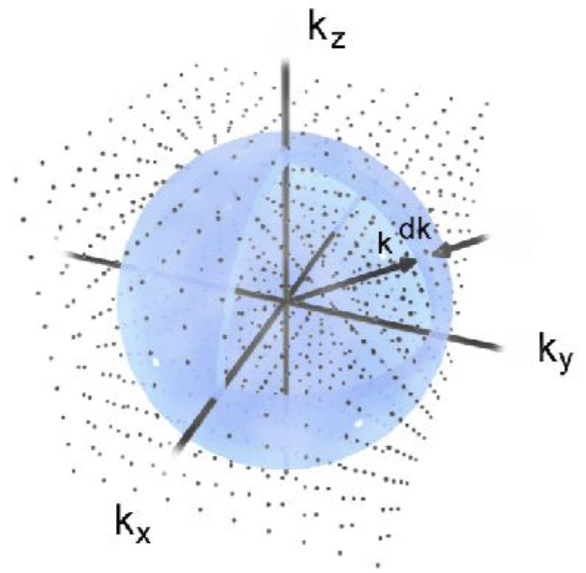

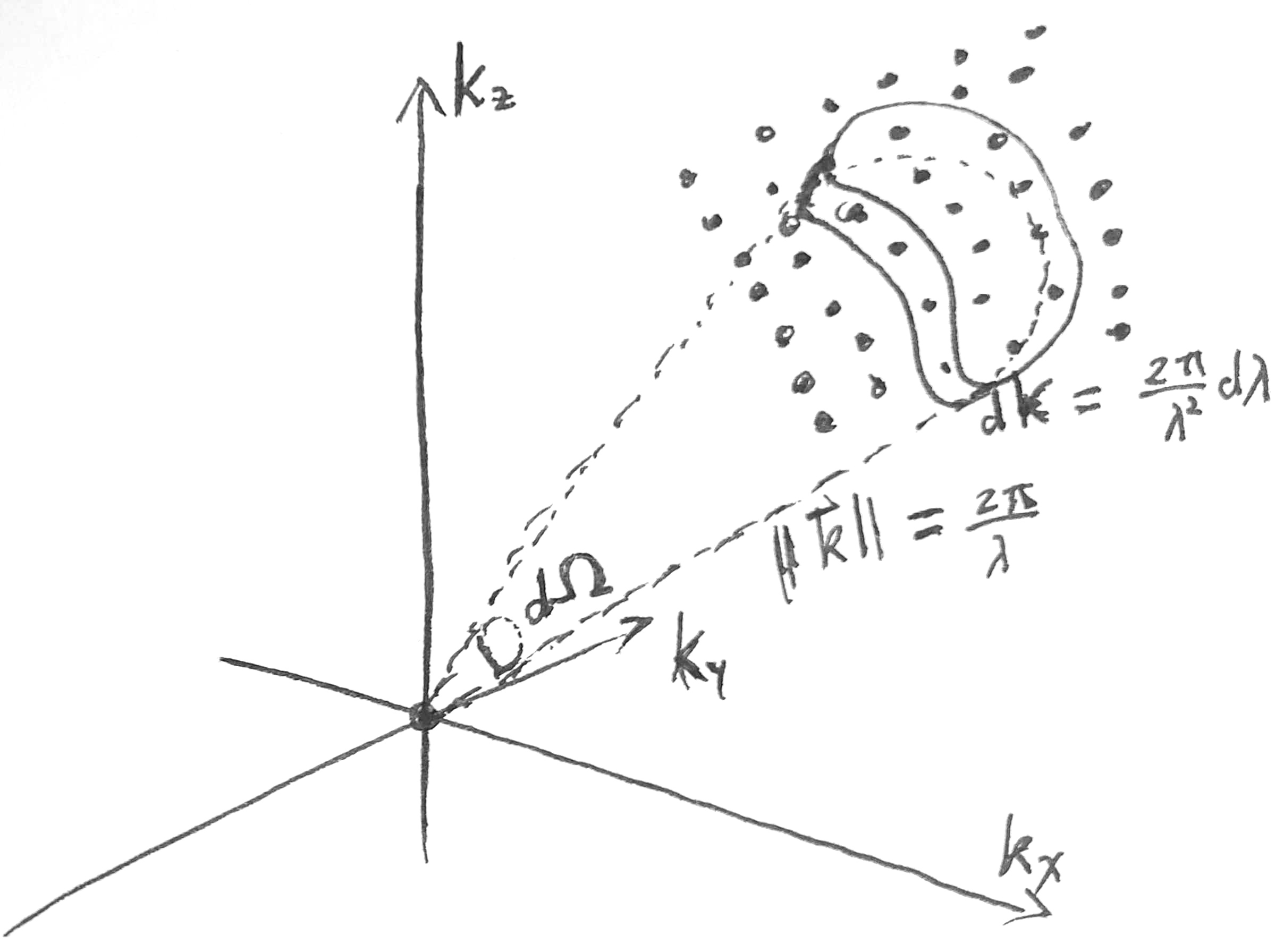

Consider a hollow cubic box with side lengths \(L\). The box has perfectly reflecting walls. At thermal equilibrium, the box is full of standing electromagnetic waves. Each standing EM wave has form \(\vec E(x, y, z, t) = \vec E_0 \sin(\omega t)\sin(\frac{n_x \pi x}{L})\sin(\frac{n_y \pi y}{L})\sin(\frac{n_z \pi z}{L})\), for some positive integers \(n_x, n_y, n_z\). Each wave has wavevectors \(\vec k = (n_x, n_y, n_z) \frac{\pi}{L}\). If we draw a region of volume \(\delta K\) in the space of wavevectors, then the region would contain about \(\delta K \frac{L^3}{\pi^3}\) valid wavevectors. Thus, we say that the wavevector space is \([0, +\infty)^3\), and has density of states \(\frac{L^3}{\pi^3}\). We can picture it as \([0, +\infty)^3\) with a rectangular grid of points being the valid wavevectors, such that the numerical density of such grid points is \(\frac{L^3}{\pi^3}\).

At this point, we depart from Planck’s derivation. Instead of considering standing waves in a perfectly reflecting chamber, we consider planar waves in a chamber with periodic boundaries. That is, we imagine that we have opened 6 portals, so that its top wall is “ported” to the bottom, etc. In this case, the planar waves have valid wavevectors \(\vec k = (n_x, n_y, n_z) \frac{2\pi}{L}\).

Wait, the numerical density of grid points is now just \(\frac{L^3}{8\pi^3}\), which is \(1/8\) of what we found previously?

Yes, indeed, but it will work out correctly, because whereas the density of states has dropped to just \(1/8\) of previously, the state space has increased \(8\times\), from \([0, +\infty)^3\) to \(\mathbb{R}^3\).

Now, we need to allow two states at each valid wavevector, to account for polarization.

At this point, we have decomposed the state space into a composition of oscillators. Because there is no interaction between these oscillators,6 it remains to calculate the partition function of each oscillator.

6 That is, two photons do not interact, except when the energy levels are so high that you would need a quantum field theorist to know what is going on.

Whereas modern spiritualists talk of electromagnetic fields and quantum vibrations, a century ago they talked of subatomic structures and ether vibrations. Light resembles ghosts and spirits in that they are massless, untouchable, moving very fast, bright, and vaguely associated with good feelings. During the 19th century, the best scientific theory for light, that of ether theory, became the foundation of many spiritualist world systems. (Asprem 2011) The connection of electromagnetism with animal magnetism did not help.

Planck considered an ensemble of \(N\) oscillators, all at the same wavevector and polarization. If they have average energy \(\braket{E}\), the question is to find the total entropy for the whole system, which, when divided by \(N\), should yield the entropy of a single oscillator. Here he used the celebrated quantum hypothesis: The energy levels are divided into integer levels of \(nh\nu\), where \(n = 0, 1, 2, \dots\). By the stars and bars argument, there are \(\binom{N + M-1}{M}\) ways do distribute these energy-quanta between these oscillators, where \(M = \frac{N\braket{E}}{h\nu}\).

\[S = \frac 1N \ln \binom{N+M-1}{M} \underbrace{\approx}_{\text{Stirling}} (1 + a) \ln (1+a) - a \ln a, \quad a = \frac{\braket{E}}{h\nu}\]

Given the entropy function, he then matched \(\braket{E}\) to temperature \(\beta\) by the equality \(\beta = \partial_{\braket{E}} S\), giving

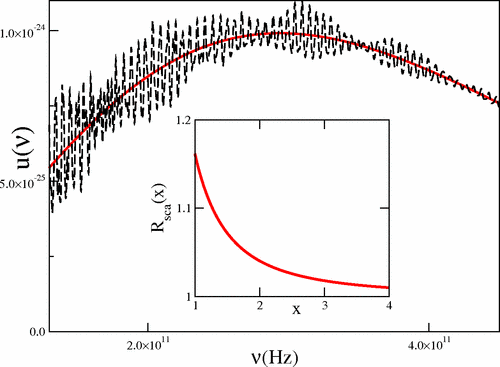

\[ \braket{E} = \left(\frac{h\nu}{e^{\beta h\nu}-1}\right) \]

Now, in any direction \(\hat k\), for any wavelength interval \([\lambda, \lambda + d\lambda]\), and any span of solid angle \(d\Omega\), compute its corresponding wavevector space volume \(k dkd\Omega = \frac{4\pi^2}{\lambda^3} d\lambda d\Omega\), and multiply that by the density of states and \(\braket{E}\), yielding the blackbody radiation law.

The details are found in any textbook. I will just point out some interesting facts typically passed over in textbooks.

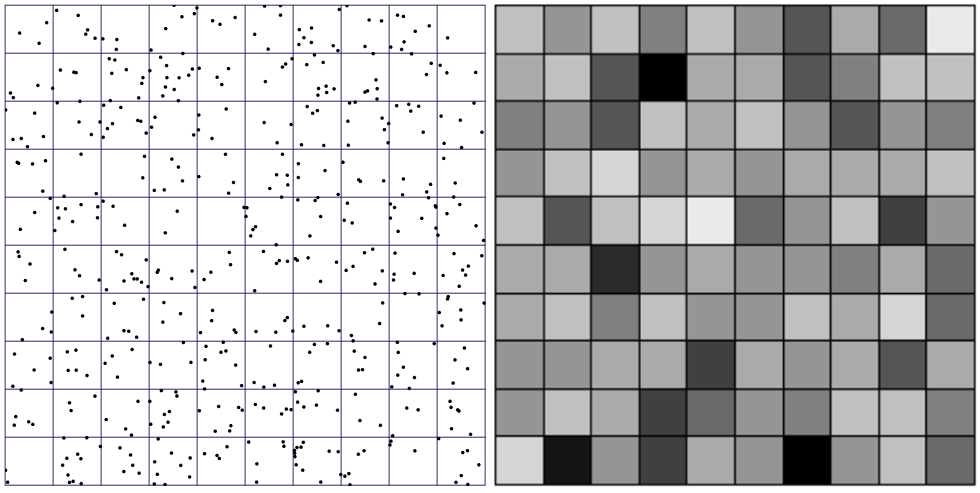

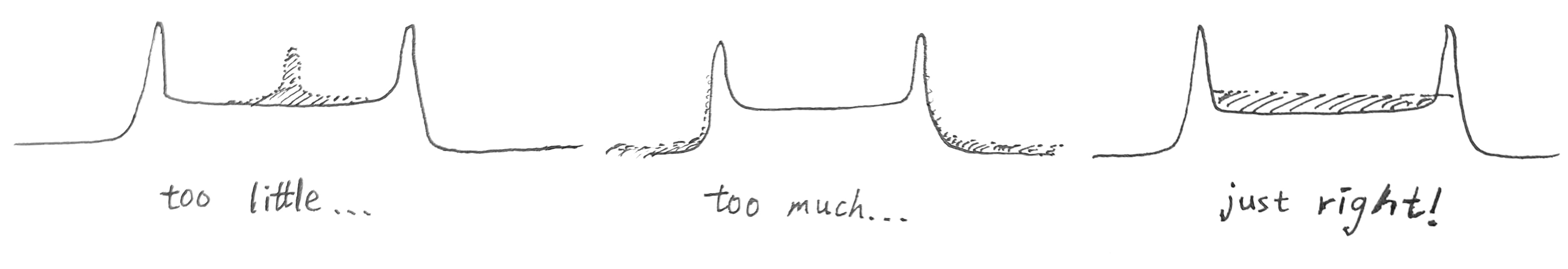

In the above derivation of the blackbody radiation law, the allowed wavevectors \(\vec k\) are like a dust cloud in the space of possible wavevectors. The cloud is assumed to be dense and even, so that the number of states inside a chunk of volume \(\Delta V\) is roughly \(\Delta V \rho\), where \(\rho\) is the average density of states. This only works if \(\Delta V \rho \gg 1\), or in other words, \(\frac{\Delta \lambda}{\lambda} \gg \frac{\lambda^3}{L^3}\). Thus, when the chamber is small, or when temperature is low enough that the spectral peak is close to the zero, then the murky cloud of wavevectors resolves into individual little specks, and we have deviation from blackbody radiation law.

In this limit, the precise shape of the chamber becomes important, since the precise chamber shape has a strong effect on long-wavelength (low-temperature) resonant modes. A tiny cube and a tiny cylinder have different blackbody spectra. See (Reiser and Schächter 2013) for a literature review.

According to Kirchhoff’s law of thermal radiation, a chunk of matter is exactly as absorptive as it is emissive. A blackbody absorbs all light, and conversely it emits light at the maximal level. A white body absorbs no light, and conversely it does not emit light. This can be understood as a consequence of the second law: If a body emits more light than it absorbs, then it would spontaneously get colder when placed inside a blackbody radiation chamber.

However, much more can be said than this. Not only is it exactly as absorptive as it is emissive, it is as absorptive as it is emissive at any angle, at any wavelength, and any polarization. So for example, if a piece of leaf is not absorptive when viewed from an angle, at the green light wavelength, of clockwise polarization, then it is not emissive under the same angle, wavelength, polarization.

Why is that? The standard argument (Reif 1998, chap. 9.15) uses a time-reversal argument, but I like to think of it as yet more instances of protecting the second law. If you look inside a blackbody radiation chamber, you would see a maximal entropy state. Light rushes in all directions equally, at all polarizations equally, and the energy is distributed optimally across the spectrum to maximize entropy (because \(\beta\) is constant across the whole spectrum). If we have a material that takes in blue light and outputs green light, then it would spontaneously decrease entropy. Similarly, if it can absorb vertically polarized light to emit diagonally polarized light, it would also spontaneously decrease entropy, etc.

A box full of blackbody radiation is also called a photon gas. The photon gas is sometimes treated as the limit of “ultrarelativistic gas”. Start with the relativistic energy function \(E = \sqrt{m^2 c^4 + \|p\|^2 c^2}\), derive its Boltzmann distribution \(\rho(q, p) \propto e^{-\beta \sqrt{m^2 c^4 + \|p\|^2 c^2}}\), then take the \(m \to 0\) limit. This gives some correct results, such as the \(U = 3PV\).

However, accounting for the entropy of photon gas, as well as deriving the blackbody radiation, hinges critically on the photon quantization \(E = h\nu, 2h\nu, \dots\). I guess it can be done correctly by relativistic quantum mechanics, but it is of course beyond the world of classical mechanics.

The point is that the blackbody radiation law is not about a blackbody. Instead, it is about photons in vacuum. We could have taken a perfectly reflecting mirror box (or if you are fancy, a three-dimensional torus) and injected it with a gas of \(400 \;\mathrm{nm}\) photons with zero total momentum and angular momentum. Since no conservation laws are constraining, the system will equilibrate to its maximal entropy state, which is the blackbody radiation spectrum. We simply need to wait a few eternities for photon-photon interactions to do the job. Thus, the precise material of the chamber does not matter, and charcoal is merely a better catalyst than titanium oxide.

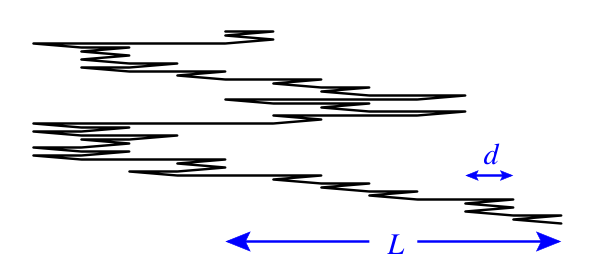

Rubber bands

It turns out that rubber bands, and generally things made of long polymers, contract when heated up, instead of expanding. This is the Gough–Joule effect. Roughly speaking, this is because elasticity in long polymer material (like rubber) is very different from elasticity in short molecule solids (like copper and ice). In rubber, elasticity is an entropic force, while in copper, it is an electrostatic force caused by attraction between molecules.

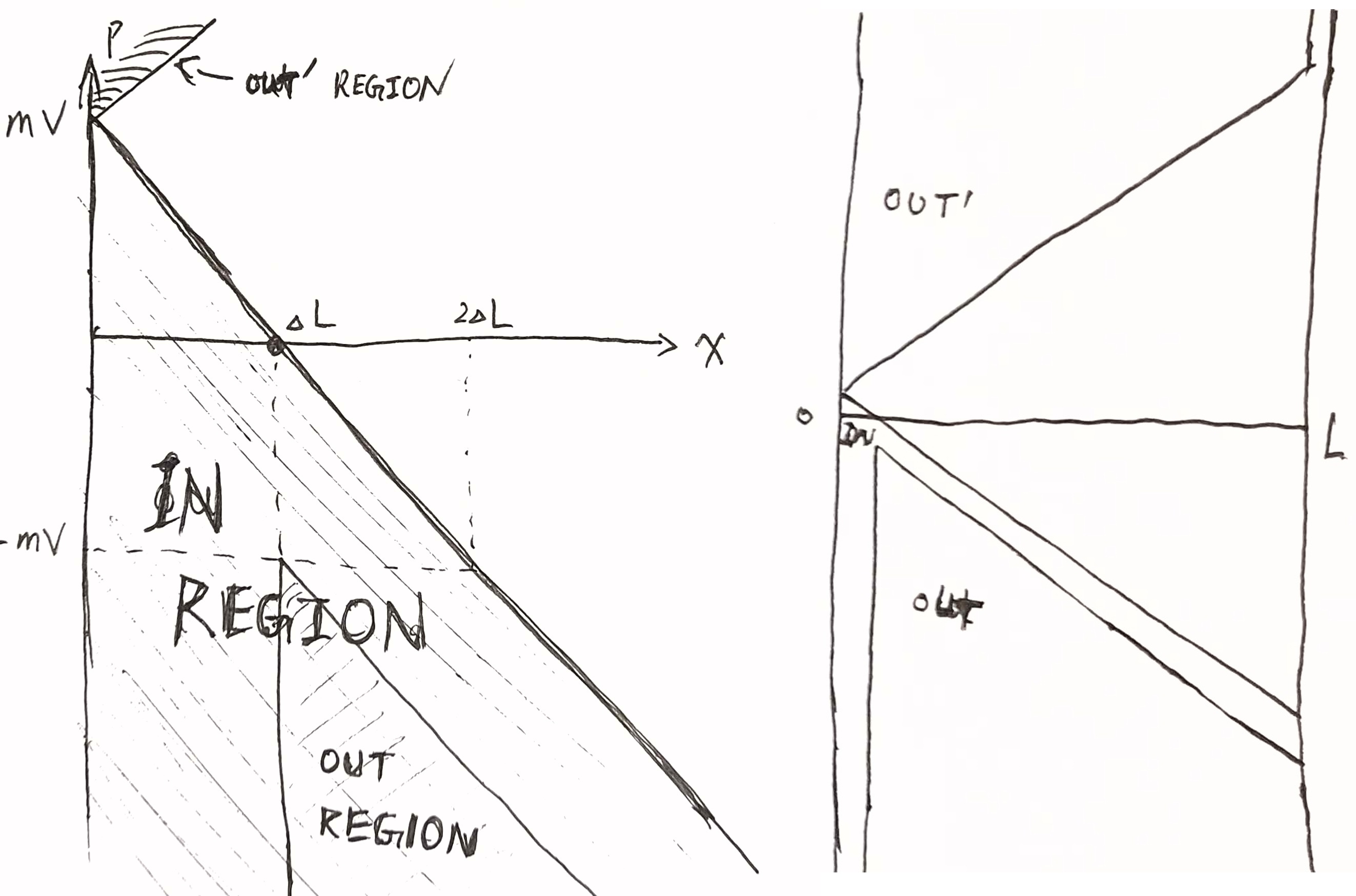

To model a rubber band, consider a long chain molecule with \(N\) joints. Each joint can go forward or backward, with equal energy. Each link between two joints has length \(d\). The total length of the system is \(L\).

Direct argument (microcanonical)

The entropy of the system, conditional on \(L\), is

\[S = \ln \binom{N}{\frac{N + L/d}{2}}\]

The thermodynamic equation for the rubber band is

\[0 = TdS + FdL\]

because the internal energy of the rubber band is constant, no matter how the joint turns.

Therefore, the elastic force is

\[F = -T \partial_L S \approx -T \frac{S(L+2d) - S(L)}{2d} \approx \frac{T}{2d }\ln\frac{Nd+L}{Nd - L}\]

When \(Nd \gg L\), that is, we have not stretched it close to the breaking point, the elastic force is

\[F \approx \frac{TL}{Nd^2} = k L\]

where \(k = \frac{T}{Nd^2}\) is the elastic constant, proportional to temperature.

Why does the rubber band stiffen when temperature rise? We can interpret it as follows. When we place the rubber band in a chamber of hot air, the air particles would often collide with the links in the rubber band, flipping it. When there are more links going to the right than the left, then the air particles would tend to flip the links to the left, decreasing \(L\), and conversely. The net force is zero only when there are an equal number of links going either way, which is when \(L = 0\).

Via free entropy (canonical)

Because the rubber band has \(dE = TdS + FdL\), the corresponding free entropy is \(S - \beta \braket{E} + \beta F \braket{L}\). Under the canonical distribution, that free entropy is maximized, meaning that \(\rho(x) \propto e^{\beta FL(x)}\) where \(x\) is a microstate of the rubber band (i.e. the precise position of each link), and \(L(x)\) is the corresponding length (macrostate).

The trick of using the free entropy is that it decomposes the entire rubber band into atomic individuals. Like how opening an energy market converts consumers trying to coordinate their energy use into consumers each individually buying and selling energy, and thus simplifying the calculation problem. Like how Laplace’s devil allows you to calculate the optimal way to schedule your day. Microcanonical ensembles are true, but canonical ensembles are almost just as true, and much easier to use. The idea is that the canonical ensemble and the microcanonical ensemble are essentially the same because the fluctuation is so tiny.

Back to the rubber band. Each individual link in the rubber band now is freed from the collective responsibility of reaching exactly length \(L\). It is now an atomized individual, maximizing its own free entropy \(S - \beta \braket{E} + \beta F \braket{L}\). Let \(p\) be its probability of going up, then its free entropy is

\[ \underbrace{-p\ln p - (1-p) \ln(1-p) }_{\text{$S$}} - 0 + \beta F (d p - d(1-p)) \]

This is maximized by the Boltzmann distribution \(p = \frac{e^{\beta F 2d}}{1+e^{\beta F 2d}}\), with first two moments

\[ \braket{L} = \frac{e^{\beta F 2d} - 1}{e^{\beta F 2d} + 1} d \approx \beta Fd^2, \quad \mathbb{V}[L] = p(1-p)d^2 \approx d^2/4 \]

The total extension of the rubber band has the first two moments

\[ N\braket{L} \approx \beta F Nd^2, \quad N\mathbb{V}[L] \approx Nd^2/4 = \frac{1}{4F\beta} N\braket{L} \]

The first equation is the same as the previous one. The second equation tells us the fluctuation in rubber band length when held under constant force and temperature. For typical conditions like \(F \sim 1 \;\mathrm{N}, T \sim 300 \;\mathrm{K}, N\braket{L} \sim 1 \;\mathrm{m}\), the fluctuation is on the order of \((0.1\;\mathrm{nm})^2\), about one atom’s diameter. So we see that the difference between the canonical and the microcanonical ensemble are indeed too tiny to speak of.

Combinatorial examples

Burning the library of Babel

The universe (which others call the Library) is composed of an indefinite, perhaps infinite number of hexagonal galleries… always the same: 20 bookshelves, 5 to each side, line four of the hexagon’s six sides… each bookshelf holds 32 books identical in format; each book contains 410 pages; each page, 40 lines; each line, approximately 80 black letters… punctuation is limited to the comma and the period. Those two marks, the space, and the twenty-two letters of the alphabet are the 25 sufficient symbols…

The Library of Babel, Jorge Luis Borges

Like the artist M. C. Escher, the writer J. L. Borges is a favorite of scientists, for his stories that construct precise worlds like elegant thought experiments. The Library of Babel is a thought experiment on combinatorics and entropy. In the universe, there are only books. Each book contains

\[410 \;\mathrm{page} \times 40\;\mathrm{line/page} \times 80 \;\mathrm{symbol/line} = 1.3\times 10^6\;\mathrm{symbol}\]

Suppose the books are uniformly random sequences made of 25 symbols, then each symbol contains \(\ln 25\) amount of entropy, and each book contains \(1.3\times 10^6\ln 25 = 4.2 \times 10^6\). Now, consider another library of Babel, but this one consists of books filled with white space, so each book has zero entropy. Then, we can take one empty book and “burn” it into a uniformly random book, recovering \(4.2 \times 10^6 k_B T\) free energy per book burned. At ambient temperature \(300\;\mathrm{K}\), that is just \(1.4 \times 10^{-14}\;\mathrm{J}\) per book. Book-burning isn’t going to keep the librarians warm.