Connectionism died in the 60s from technical limits to scaling, then resurrected in the 80s after backprop allowed scaling. The Minsky–Papert anti-scaling hypothesis explained, psychoanalyzed, and buried.

AI

history

Author

Yuxi Liu

Published

January 1, 2024

Modified

January 1, 2024

Abstract

During the 1950s and 1960s, the connectionist and symbolic schools of artificial intelligence competed for researcher allegiance and funding, with the symbolic school winning by 1970. This “first neural network winter” lasted until the rise of the connectionist school in the 1980s.

It is often stated that neural networks were killed off by the 1969 publication of Perceptrons by Marvin Minsky and Seymour Papert. This story is wrong on multiple accounts:

Minsky and Papert had been working towards killing off neural networks since around 1965 by speaking at conferences and circulating preprints.

The mathematical content studies only the behavior of a single perceptron. It does not study multilayer perceptrons.

By 1969, most researchers had already left connectionism, frustrated by the lack of progress, as they did not develop backpropagation or deep learning. The last holdout, Frank Rosenblatt, died in 1971.

The book achieved its mythical status as the “neural network killer” by its opportune timing, appearing just as the symbolic school achieved dominance. Since the connectionist school was almost empty, it faced little objection at its publication, creating the illusion that it caused the closure of the perceptron controversy.

In the 1980s, the perceptron controversy reopened with the rise of connectionism. This time the controversy was bypassed without closure. Connectionists demonstrated that backpropagation with MLP bypassed most of the objections from Perceptrons. Minsky and Papert objected that the lessons of Perceptrons still applied, but their objections and lessons had by then become irrelevant.

Minsky and Papert were possibly the most consistent critics of the scaling hypothesis, arguing over decades that neural networks cannot scale beyond mere toy problems. Minsky was motivated by mathematical certainty, as backpropagation–MLP cannot provably find global optima or accomplish any task efficiently, unlike a single perceptron. He rejected all experimental data with large MLP as theory-less data that cannot be extrapolated. Papert was motivated by social justice and epistemological equality. He rejected all scalable uniform architectures like backpropagation–MLP, as threats to his vision of a society with different but equally valid ways of knowing.

As of 2019, essentially all predictions by Minsky and Papert, concerning the non-scalability of neural networks, had been disproven.

The enigma of Marvin Minsky

In a 1993 interview, Robert Hecht-Nielsen recounted an encounter between Marvin Minsky and the neural network community in the late 1980s1:

1 This was corroborated by a contemporary news report on the International Conference on Neural Networks of 1988:

Minsky who has been criticized by many for the conclusions he and Papert make in ‘Perceptrons,’ opened his defense with the line ‘Everybody seems to think I’m the devil.’ Then he made the statement, ‘I was wrong about Dreyfus too, but I haven’t admitted it yet,’ which brought another round of applause. (quoted in (Olazaran 1991, 285))

Minsky had gone to the same New York “science” high school as Frank Rosenblatt, a Cornell psychology Ph.D. whose “perceptron” neural network pattern recognition machine was receiving significant media attention. The wall-to-wall media coverage of Rosenblatt and his machine irked Minsky. One reason was that although Rosenblatt’s training was in “soft science,” his perceptron work was quite mathematical and quite sound—turf that Minsky, with his “hard science” Princeton mathematics Ph.D., didn’t feel Rosenblatt belonged on. Perhaps an even greater problem was the fact that the heart of the perceptron machine was a clever motor-driven potentiometer adaptive element that had been pioneered in the world’s first neurocomputer, the “SNARC”, which had been designed and built by Minsky several years earlier! In some ways, Minsky’s early career was like that of Darth Vader. He started out as one of the earliest pioneers in neural networks but was then turned to the dark side of the force (AI) and became the strongest and most effective foe of his original community. This view of his career history is not unknown to him. When he was invited to give the keynote address at a large neural network conference in the late 1980s to an absolutely rapt audience, he began with the words: “I am not the Devil!” (Rosenfeld and Anderson 2000, 303–5)

However, it appears that he had changed his mind later. As recounted by Terry Sejnowski:

I was invited to attend the 2006 Dartmouth Artificial Intelligence Conference, “AI@50,” a look back at the seminal 1956 Summer Research Project on artificial intelligence held at Dartmouth and a look forward to the future of artificial intelligence. … These success stories had a common trajectory. In the past, computers were slow and only able to explore toy models with just a few parameters. But these toy models generalized poorly to real-world data. When abundant data were available and computers were much faster, it became possible to create more complex statistical models and to extract more features and relationships between the features.

In his summary talk at the end of the conference [The AI@50 conference (2006)], Marvin Minsky started out by saying how disappointed he was both by the talks and by where AI was going. He explained why: “You’re not working on the problem of general intelligence. You’re just working on applications.” …

There was a banquet on the last day of AI@50. At the end of the dinner, the five returning members of the 1956 Dartmouth Summer Research Project on Artificial Intelligence made brief remarks about the conference and the future of AI. In the question and answer period, I stood up and, turning to Minsky, said: “There is a belief in the neural network community that you are the devil who was responsible for the neural network winter in the 1970s. Are you the devil?” Minsky launched into a tirade about how we didn’t understand the mathematical limitations of our networks. I interrupted him—“Dr. Minsky, I asked you a yes or no question. Are you, or are you not, the devil?” He hesitated for a moment, then shouted out, “Yes, I am the devil!” (Sejnowski 2018, 256–58)

What are we to make of the enigma of Minsky? Was he the devil or not?

The intellectual history of Minsky

During his undergraduate years, Minsky was deeply impressed by Andrew Gleason,2 and decided to work on pure mathematics, resulting in his 1951 undergraduate thesis A Generalization of Kakutani’s Fixed-Point Theorem, which extended an obscure fixed-point theorem of Kakutani – not the famous version, as Kakutani proved more than one fixed-point theorem.

2

I asked Gleason how he was going to solve it. Gleason said he had a plan that consisted of three steps, each of which he thought would take him three years to work out. Our conversation must have taken place in 1947, when I was a sophomore. Well, the solution took him only about five more years … Gleason made me realize for the first time that mathematics was a landscape with discernible canyons and mountain passes, and things like that. In high school, I had seen mathematics simply as a bunch of skills that were fun to master – but I had never thought of it as a journey and a universe to explore. No one else I knew at that time had that vision, either. (Bernstein 1981)

Theorem 1 (Kakutani’s fixed point theorem on the sphere) If \(f\) is an \(\mathbb{R}^2\)-valued continuous function on the unit sphere in \(\mathbb{R}^3\), then for any side length \(r \in (0, \sqrt{3})\), there exist \(x_1, x_2, x_3\) on the sphere forming an equilateral triangle with side length \(r\), such that \(f(x_1) = f(x_2) = f(x_3)\).

Equivalently, if \(x_1, x_2, x_3\) form an equilateral triangle on the unit sphere, then there exists a rotation \(T\) such that \(f(T(x_1)) = f(T(x_2)) = f(T(x_3))\).

Using knot theory, Minsky proved an extension where \(x_1, x_2, x_3\) are three points of a square or a regular pentagon (M. Minsky 2011). The manuscript “disappeared” (M. L. Minsky n.d.).

I wrote it up and gave it to Gleason. He read it and said, ‘You are a mathematician.’ Later, I showed the proof to Freeman Dyson, at the Institute for Advanced Study, and he amazed me with a proof (Dyson 1951) that there must be at least one square that has the same temperature at all four vertices. He had found somewhere in my proof a final remnant of unused logic. (Bernstein 1981)

He then became interested in neural networks and reinforcement learning, and constructed a very simple electromechanical machine called SNARC.3 The SNARC machine is a recurrent neural network that performs reinforcement learning by the Hebbian learning rule. It simulates a mouse running around a maze, while the operator watches an indicator light showing the mouse. The operator can press a button as a reward signal, which would cause an electric motor to turn a chain. The chain is clutched to rheostats that connect the neurons, with the stretch of the clutch being proportional to the charge in a capacitor. During the operation of the neural network, the capacitor charges up if there is neural co-activation on the connection, and decays naturally, thus serving as a short-term memory. When the reward button is pressed, the clutches turn by an amount proportional to the co-activation of neural connections, thereby completing the Hebbian learning.

3 It was published as (M. Minsky 1952), but the document is not available online, and I could only piece together a possible reconstruction from the fragments of information.

Minsky was impressed by how well it worked. The machine was designed to simulate one mouse, but by some kind of error it simulated multiple mice, and yet it still worked.

The rats actually interacted with one another. If one of them found a good path, the others would tend to follow it. We sort of quit science for a while to watch the machine. … In those days, even a radio set with twenty tubes tended to fail a lot. I don’t think we ever debugged our machine completely, but that didn’t matter. By having this crazy random design, it was almost sure to work, no matter how you built it. (Bernstein 1981)

This was the last we saw of Minsky’s work with random neural networks. He had crossed the Rubicon, away from the land of brute reason and into the land of genuine insight.

I had the naïve idea that if one could build a big enough network, with enough memory loops, it might get lucky and acquire the ability to envision things in its head. … Even today, I still get letters from young students who say, ‘Why are you people trying to program intelligence? Why don’t you try to find a way to build a nervous system that will just spontaneously create it?’ Finally, I decided that either this was a bad idea or it would take thousands or millions of neurons to make it work, and I couldn’t afford to try to build a machine like that. (Bernstein 1981)

For his PhD thesis, Minsky worked on the mathematical theory of McCulloch–Pitts neural networks. In style, it was a fine piece of classical mathematics (M. L. Minsky 1954). Minsky would go on to write (M. Minsky 1967, chap. 3), still the best introduction to McCulloch–Pitts neural networks.

Minsky’s doctoral dissertation in mathematics from Princeton in 1954 was a theoretical and experimental study of computing with neural networks. He had even built small networks from electronic parts to see how they behaved. The story I heard when I was a graduate student at Princeton in physics was that there wasn’t anyone in the Mathematics Department who was qualified to assess his dissertation, so they sent it to the mathematicians at the Institute for Advanced Study in Princeton who, it was said, talked to God. The reply that came back was, “If this isn’t mathematics today, someday it will be,” which was good enough to earn Minsky his PhD. (Sejnowski 2018, 259)

… Sussman told Minsky that he was using a certain randomizing technique in his program because he didn’t want the machine to have any preconceived notions. Minsky said, “Well, it has them, it’s just that you don’t know what they are.” It was the most profound thing Gerry Sussman had ever heard. And Minsky continued, telling him that the world is built a certain way, and the most important thing we can do with the world is avoid randomness, and figure out ways by which things can be planned. (Levy 2010, 110–11)

In the days when Sussman was a novice, Minsky once came to him as he sat hacking at the PDP-6. “What are you doing?”, asked Minsky. “I am training a randomly wired neural net to play Tic-Tac-Toe” Sussman replied. “Why is the net wired randomly?”, asked Minsky. “I do not want it to have any preconceptions of how to play”, Sussman said. Minsky then shut his eyes. “Why do you close your eyes?”, Sussman asked his teacher. “So that the room will be empty.” At that moment, Sussman was enlightened.

As for Sussman, I knew him for two things: writing the SICP book, and being the coordinator of the infamous summer vision project that was to construct “a significant part of a visual system” in a single summer, using only undergraduate student researchers. A brief read of his “reading list” shows where his loyalties lie: firmly in the school of neats.

(Sejnowski 2018, 28) recounts the background of the summer vision project:

In the 1960s, the MIT AI Lab received a large grant from a military research agency to build a robot that could play Ping-Pong. I once heard a story that the principal investigator forgot to ask for money in the grant proposal to build a vision system for the robot, so he assigned the problem to a graduate student as a summer project. I once asked Marvin Minsky whether the story was true. He snapped back that I had it wrong: “We assigned the problem to undergraduate students.”

After rejecting neural networks, Minsky became a leading researcher in AI. His style of AI is typically described as “symbolic AI”, although a more accurate description would be The Society of Mind (SoM). Minsky developed the SoM in the 1960s and 1970s with his long-time collaborator, Seymour Papert, inspired by their difficulty with building robots, and published the definitive account in (M. Minsky 1988). The SoM thesis states that “any brain, machine, or other thing that has a mind must be composed of smaller things that cannot think at all”.

Stated in this way, it seems patently compatible with neural networks, but only on the surface. Minsky concretely described how he expected a Society of Mind to work, based on his attempts at making Builder, a robot that can play with blocks:

Both my collaborator, Seymour Papert, and I had long desired to combine a mechanical hand, a television eye, and a computer into a robot that could build with children’s building-blocks. It took several years for us and our students to develop Move, See, Grasp, and hundreds of other little programs we needed to make a working Builder-agency. I like to think that this project gave us glimpses of what happens inside certain parts of children’s minds when they learn to “play” with simple toys. The project left us wondering if even a thousand microskills would be enough to enable a child to fill a pail with sand. It was this body of experience, more than anything we’d learned about psychology, that led us to many ideas about societies of mind.

To do those first experiments, we had to build a mechanical Hand, equipped with sensors for pressure and touch at its fingertips. Then we had to interface a television camera with our computer and write programs with which that Eye could discern the edges of the building-blocks. It also had to recognize the Hand itself. When those programs didn’t work so well, we added more programs that used the fingers’ feeling-sense to verify that things were where they visually seemed to be. Yet other programs were needed to enable the computer to move the Hand from place to place while using the Eye to see that there was nothing in its way. We also had to write higher-level programs that the robot could use for planning what to do—and still more programs to make sure that those plans were actually carried out. To make this all work reliably, we needed programs to verify at every step (again by using Eye and Hand) that what had been planned inside the mind did actually take place outside—or else to correct the mistakes that occurred. … Thousands and, perhaps, millions of little processes must be involved in how we anticipate, imagine, plan, predict, and prevent—and yet all this proceeds so automatically that we regard it as “ordinary common sense.” (M. Minsky 1988, sec. 2.5)

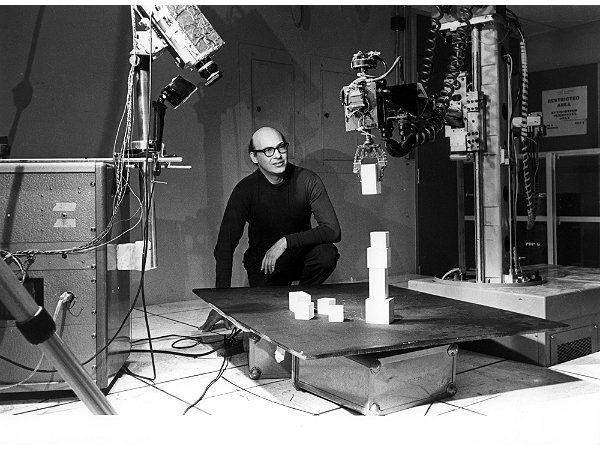

Marvin Minsky and Builder the robot. It’s hard to tell who is having more fun here.

From the concrete description, as well as the many attractive illustrations in the book, it is clear that Minsky intended the “Society of Mind” to be a uniform computing substrate (silicon or carbon) upon which millions of little symbolic programs are running, each capable of running some specific task, each describable by a distinct and small piece of symbolic program. They cannot be mere perceptrons in a uniform block of neural network, or mere logic gates in a uniform block of CPU.

In his 1988 book, Minsky described dozens of these heterogeneous components he thought might make up a Society of Mind. However, the precise details are not relevant,5 as he freely admited that they are conjectured. He was only adamant about the overarching scheme: heterogeneous little separated components, not a homogeneous big connected piece.

5 The conjectured components included “K-lines”, “nomes”, “nemes”, “frames”, “frame-arrays”, etc. Although Minsky meant for this SoM project to last a very long time, building up to general intelligence brick by brick, my literature search shows that there had been no new development since the 2000s, so the overview (Singh 2003) still represents the SOTA of SoM.

Perhaps a modern reincarnation of such an idea would be the dream of Internet agents operating in a digital economy, populated by agents performing simple tasks like spam filtering, listening for the price of petroleum, etc. Some agents would interface with reality, while others would interface with agents. Some agents are organized at a higher level into DAOs, created by a small committee of simple “manager agents” serving as the interface and coordinators for other agents. DAOs can interface with other DAOs through little speaker-agents, which consist of a simple text filter for the torrent of information and then outsource to text-weaving agents to compose the actual messages they send out.

Seymour Papert

Seymour Papert, the long-time collaborator of Minsky, was the second author of Perceptrons. To unlock the enigma of Minsky, we must look into Papert’s past as well.

In 1958, after earning a doctorate in mathematics, he met Jean Piaget and became his pupil for four years. This experience had a formative effect on Papert. Piaget’s work was an important influence on the constructivism philosophy in education, and Papert would go on to bring constructivism from books to classrooms. He was particularly hopeful that computers can realize the constructivist dream of unlocking the kaleidoscopic creativity that a child can construct.

The main theme of Jean Piaget’s work was developmental psychology – how children’s understanding of the world changes as they grow up. What goes on in their mind as they progressively understand that things fall down, what dogs are, and that solid steel sinks but hollow steel might float? Piaget discovered that children did not simply start with a blank sheet of paper and gradually fill in sketchy details of the true model. Instead they constructed little models of small facets of reality that would be modified or completely replaced as they encounter new phenomena that their old models cannot explain. In this way, Piaget claimed that children are “little scientists”.

A small example illustrates the idea. When children see that a leaf floats on water, but a stone sinks, they add a rule “Soft things float, while hard things sink.”. Then, they see that a hard plastic boat floats too, so they add a rule “Except hard and light things also float.”. Then, they see that a large boat also floats, so they rewrite the entire model to “Flat-bottomed things float, while small-bottomed things sink.”. And so on.

There are conservative and radical ways of using Piaget’s research for pedagogy. The conservative approach involves studying how children construct their scientific theories and identifying a sequence of evidence to present to these young scientists so they can quickly reach scientific orthodoxy. For example, we might show children videos of curling and air hockey, then let them play with an air hockey table, following this with guided exercises, so they race through animism, Aristotelian physics, impetus theory, etc, and end up with Newton’s laws of motion.

The radical way is to decenter the orthodoxy and let a thousand heterodoxies bloom. Why go for the orthodoxy, when the Duhem–Quine thesis tells us that evidence is never enough to constrain us to only one orthodoxy? And given that objectively no theory deserves the high name of “orthodoxy”, how did the scientific “orthodoxy” become dominant? A critical analysis of the history shows that its dominance over Aboriginal and woman ways of knowing is merely a historical accident due to an alliance with the hegemonic reason of the center over the periphery.

Papert went with the radical way.

After four years of study under Piaget, he arrived in MIT in 1963, and began working with Minsky on various topics, including the Logo Turtle robot, and the Perceptrons book. The computer revolution was starting, and Papert saw computers as a way to bring radical constructivism to children.

In the real world, phenomena are limited by nature, and aspiring little heterodoxy-builders are limited by their ability to construct theories and check their consequences. In the computer world, every child could program and construct “microworlds” from their own theories. Thus, computers would bring constructivism to the classroom. Furthermore, the constructed world inside computers could then flow out to the physical world via robots. This is why Papert worked on both Logo the programming language and Logo the turtle robots. In his words, he intended to fight “instructionism” with “constructionism” by bringing the power of the computer to every child, so that they would grow up to be “bricoleurs”, working with whatever little tool they have available doing whatever is necessary to accomplish little things. This is a vital piece in his overarching project of epistemological pluralism, to liberate heterodoxical ways of knowing (S. A. Papert 1994, chap. 7):

Traditional education codifies what it thinks citizens need to know and sets out to feed children this “fish.” Constructionism is built on the assumption that children will do best by finding (“fishing”) for themselves the specific knowledge they need … it is as well to have good fishing lines, which is why we need computers, and to know the location of rich waters, which is why we need to develop a large range of mathetically rich activities or “microworlds.”

… School math, like the ideology, though not necessarily the practice, of modern science, is based on the ideal of generality – the single, universally correct method that will work for all problems and for all people. Bricolage is a metaphor for the ways of che old-fashioned traveling tinker, the jack-of-all-trades who knocks on the door offering to fix whatever is broken. Faced with a job, the tinker rummages in his bag of assorted tools to find one that will fit the problem at hand and, if one tool does nor work for the job, simply tries another without ever being upset in the slightest by the lack of generality. The basic tenets of bricolage as a methodology for intellectual activity are: Use what you’ve got, improvise, make do. And for the true bricoleur, the tools in the bag will have been selected over a long time by a process determined by more than pragmatic utility. These mental tools will be as well worn and comfortable as the physical tools of the traveling tinker; they will give a sense of the familiar, of being at ease with oneself …

Kitchen math provides a clear demonstration of bricolage in its seamless connection with a surrounding ongoing activity that provides the tinker’s bag of tricks and tools. The opposite of bricolage would be to leave the “cooking microworld” for a “math world,” to work the fractions problem using a calculator or, more likely in this case, mental arithmetic. But the practitioner of kitchen math, as a good bricoleur, does not stop cooking and turn to math; on the contrary, the mathematical manipulations of ingredients would be indistinguishable to an outside observer from the culinary manipulations.

… The traditional epistemology is based on the proposition, so closely linked to the medium of text-written and especially printed. Bricolage and concrete thinking always existed but were marginalized in scholarly contexts by the privileged position of text. As we move into the computer age and new and more dynamic media emerge, this will change.

According to Papert, his project is epistemological pluralism, or promoting different ways of knowing (Turkle and Papert 1990):

The diversity of approaches to programming suggests that equal access to even the most basic elements of computation requires accepting the validity of multiple ways of knowing and thinking, an epistemological pluralism. Here we use the word epistemology in a sense closer to Piaget’s than to the philosopher’s. In the traditional usage, the goal of epistemology is to inquire into the nature of knowledge and the conditions of its validity; and only one form of knowledge, the propositional, is taken to be valid. The step taken by Piaget in his definition of epistemologie genetique was to eschew inquiry into the “true” nature of knowledge in favor of a comparative study of the diverse nature of different kinds of knowledge, in his case the kinds encountered in children of different ages. We differ from Piaget on an important point, however. Where he saw diverse forms of knowledge in terms of stages to a finite end point of formal reason, we see different approaches to knowledge as styles, each equally valid on its own terms.

… The development of a new computer culture would require more than environments where there is permission to work with highly personal approaches. It would require a new social construction of the computer, with a new set of intellectual and emotional values more like those applied to harpsichords than hammers. Since, increasingly, computers are the tools people use to write, to design, to play with ideas and shapes and images, they should be addressed with a language that reflects the full range of human experiences and abilities. Changes in this direction would necessitate the reconstruction of our cultural assumptions about formal logic as the “law of thought.” This point brings us full circle to where we began, with the assertion that epistemological pluralism is a necessary condition for a more inclusive computer culture.

The project of epistemological pluralism erupted into public consciousness during the “Science Wars” of 1990s. After that, it had stayed rather quiet.

The perceptron controversy

Connectionism, 1945–1970

In the early days, there were several centers of connectionist research, clustered around Frank Rosenblatt, Bernard Widrow, and the Stanford Research Institute (SRI). Out of those centers of research, Minsky and Papert targeted mostly Rosenblatt’s research.

Frank Rosenblatt’s research had three modes: mathematical theory, experiments on bespoke machines, such as the Mark I Perceptron and the Tobermory, and experiments on serial digital computers, usually IBM machines. He was strongly inclined to building two-layered perceptron machines where the first layer was fixed 0-1 weights, and only the second layer contained real-valued weights learned by the perceptron learning rule. This is precisely the abstract model of the perceptron machine used by Minsky and Papert.

After 4 years of research, he published a summary of his work in (Rosenblatt 1962). In the book, he noted that there were many problems that the perceptron machines could not learn well. As summarized in (Olazaran 1991, 116–21),

… two stimuli (presented one after another) had to occupy nearly the same area of the retina in order to be classified as similar. … The lack of an adequate preprocessing system meant that a set of association units had to be dedicated to the recognition of each possible object, and this created an excessively large layer of association units in the perceptron. … Other problems were excessive learning time, excessive dependence on external evaluation (supervision), and lack of ability to separate essential parts in a complex environment. Rosenblatt (1962, pp. 309-310) included the ‘figure-ground’ or ‘connectedness’ problem in this last point.

A number of perceptrons analyzed in the preceding chapters have been analyzed in a purely formal way, yielding equations which are not readily translated into numbers. This is particularly true in the case of the four-layer and cross-coupled systems, where the generality of the equations is reflected in the obscurity of their implications. … The previous questions [from the first to the twelfth] are all in the nature of ‘mopping-up’ operations in areas where some degree of performance is known to be possible . . . [However,] the problems of figure-ground separation (or recognition of unity) and topological relation recognition represent new territory, against which few inroads have been made.” (Rosenblatt, 1962a, pp. 580-581)

Almost every one of these problems was specifically targeted by “Perceptrons”. For example, the difficulty of testing for “connectedness” was a centerpiece of the entire book, the difficulty of recognizing symmetry was studied by “stratification” and shown to have exponentially growing coefficients (chapter 7), the requirement for “had to occupy nearly the same area of the retina” was targeted by studies on the limitations of “diameter-limited perceptrons” (chapter 8), the “figure-ground problem” was targeted by showing “recognition-in-context” has infinite order (Section 6.6), the “generality of the equations is reflected in the obscurity of their implications” was targeted by comments such as “if there is no restriction except for the absence of loops, the monster of vacuous generality once more raises its head” (Section 13.2),

Bernard Widrow worked mostly in collaboration with Marcian Hoff. Their work is detailed in my essay The Backstory of Backpropagation. In short, they first developed a least-mean-square gradient descent method to train a single perceptron, then proceeded to two-layered perceptrons and predictably failed to develop backpropagation, as the activation function is not differentiable. Thereafter, Widrow gave up neural networks until learning of backpropagation in the 1980s.

Widrow and his students developed uses for the Adaline and Madaline. Early applications included, among others, speech and pattern recognition, weather forecasting, and adaptive controls. Work then switched to adaptive filtering and adaptive signal processing after attempts to develop learning rules for networks with multiple adaptive layers were unsuccessful. … After 20 years of research in adaptive signal processing, the work in Widrow’s laboratory has once again returned to neural networks. (Widrow and Lehr 1990)

At the time that Hoff left, about 1965 or 1966, we had already had lots of troubles with neural nets. My enthusiasm had dropped. But we were beginning to have successful adaptive filters, in other words, finding good applications. … So we stopped, basically stopped on neural nets, and began on adaptive antennas very strongly.” (Widrow, interview) (Olazaran 1991, 129–30)

SRI had a strong AI program, with luminaries such as Nils Nilsson, Charles Rosen, Duda, and Hart. At first they worked on a system called MINOS, with several iterations. MINOS II in 1962 had 3 layers, but only one was trainable, presumably because they also had no better training method than the perceptron learning rule. Since they used 0-1 activation functions like everyone else, they were frustrated by the same problem of not doing backpropagation, so they switched to symbolic AI techniques around 1965.

In 1973, Duda and Hart published the famous “Duda and Hart” book on pattern classification (Duda and Hart 1973). The book contained two halves. The first half was statistical: Bayes, nearest neighbors, perceptron, clustering, etc. The second half was on scene analysis, a symbolic-AI method for computer vision. Indeed, Minsky and Papert promoted it as superior to perceptron networks.6Shakey the robot, built between 1966 and 1972, was a tour de force of scene analysis, and it could traverse a room in as short as an hour, avoiding geometric obstacles along the way. Its program was written in LISP, the staple programming language for symbolic AI.

6 It is instructive to compare the first edition with the second, published in 2001 (Duda, Hart, and Stork 2001). It had become almost completely statistical. There were new chapters on neural networks, Boltzmann machines, decision trees, and so on. In contrast, scene analysis was completely removed.

It says something about the obsolescence of scene analysis even in 2001, as Duda and Hart deleted half of their most famous book just to avoid talking about it. In fact, the only mention of “scene analysis” is a condemnation:

Some of the earliest work on three-dimensional object recognition relied on complex grammars which described the relationships of corners and edges, in block structures such arches and towers. It was found that such systems were very brittle; they failed whenever there were errors in feature extraction, due to occlusion and even minor misspecifications of the model. For the most part, then, grammatical methods have been abandoned for object recognition and scene analysis. (Duda, Hart, and Stork 2001, sec. 8.8)

I got very interested for a while in the problem of training more than one layer of weights, and was not able to make very much progress on that problem. … When we stopped the neural net studies at SRI, research money was running out, and we began looking for new ideas. (Nilsson, interview)

About 1965 or 1966 we decided that we were more interested in the other artificial intelligence techniques. … Our group never solved the problem of training more than one layer of weights in an automatic fashion. We never solved that problem. That was most critical. Everybody was aware of that problem. (Rosen, interview) (Olazaran 1991, 131–33)

The perceptron controversy, 1960s

In the middle nineteen-sixties, Papert and Minsky set out to kill the Perceptron, or, at least, to establish its limitations – a task that Minsky felt was a sort of social service they could perform for the artificial-intelligence community. (Bernstein 1981)

Although the book was published only in 1969, close to the end of the perceptron controversy, the influence of Minsky and Papert had been felt years earlier as they attended conferences and disseminated their ideas through talks and preprints, sometimes quarreling on stage. Both sides had their motivations and the conflict was real.

In order to show the extent of the perceptron controversy, it is interesting to repeat some of the rhetorical expressions that were used in it: ‘many remember as great spectator sport the quarrels Minsky and Rosenblatt had;’ ‘Rosenblatt irritated a lot of people;’ ‘Rosenblatt was given to steady and extravagant statements about the performance of his machine;’ ‘Rosenblatt was a press agent’s dream, a real medicine man;’ ‘to hear Rosenblatt tell it, his machine was capable of fantastic things;’ ‘they disparaged everything Rosenblatt did, and most of what ONR did in supporting him;’ ‘a pack of happy bloodhounds;’ ‘Minsky knocked the hell out of our perceptron business;’ ‘Minsky and his crew thought that Rosenblatt’s work was a waste of time, and Minsky certainly thought that our work at SRI was a waste of time;’ ‘Minsky and Papert set out to kill the perceptron, it was a sort of social service they could perform for the Al community;’ ‘there was some hostility;’ ‘we became involved with a somewhat therapeutic compulsion;’ ‘a misconception that would threaten to haunt artificial intelligence;’ ‘the mystique surrounding such machines.’ These rhetorical expressions show the extent (the heat) of the perceptron controversy beyond doubt. (Olazaran 1991, 112)

Charles Rosen of SRI recalls:

Minsky and his crew thought that Frank Rosenblatt’s work was a waste of time, and they certainly thought that our work at SRI was a waste of time. Minsky really didn’t believe in perceptrons, he didn’t think it was the way to go. I know he knocked the hell out of our perceptron business. (Olazaran 1993, 622)

When Perceptrons was finally published in 1969, the connectionist camp was already deserted. The SRI group had switched to symbolic AI projects; Widrow’s group had switched to adapting single perceptrons to adaptive filtering; Frank Rosenblatt was still labouring, isolated, with dwindling funds, until his early death in 1971.7

7 The 1972 reprinting of Perceptrons included a handwritten note, “In memory of Frank Rosenblatt”. This was not an ironic dedication, as Minsky and Rosenblatt were personally friendly, although their research paradigms had been fighting for dominance.

During the last days of Rosenblatt, he worked on a massive expansion of the Mark I Perceptron, named Tobermory. It would have been a multimodal neural network, capable of seeing and hearing in real time. From the obscurity of Tobermory, we can infer that it was a failure.

The four-layer Tobermory perceptron, designed and built at Cornell University between 1961 and 1967, had 45 S units, 1600 A1 units, 1000 A2 units, and 12 R units. Intended for speech recognition, the input section consisted of 45 band-pass filters attached to 80 difference detectors, with the output of each detector sampled at 20 time intervals. Its 12000 weights consisted of toroidal cores capable of storing over 100 different amplitudes. Each A2 unit could be connected to any of 20 A1 units by means of a wall-sized plugboard. As has happened with so many other projects in the last three decades, by the time Tobermory was completed, the technology of commercial Von Neumann computers had advanced sufficiently to outperform the special-purpose parallel hardware. (Nagy 1991)

(Olazaran 1991) gathered evidence that the publication of Perceptrons was not the cause but a “marker event” for the end of the perceptron controversy and the ascendancy of the symbolic AI school. The book was not the neural network killer, but its epitaph.

Connectionist retrospectives, 1990s

Following the resurgence of connectionism in the 1980s, Anderson and Rosenfeld conducted interviews with prominent connectionists throughout the 1990s, compiled in (Rosenfeld and Anderson 2000). The perceptron controversy is mentioned several times. Reading the interviews gives one a distinct feeling of Rashomon. The same events are recounted from multiple perspectives. I will excerpt some of the most important ones for the essay.

Jack D. Cowan gave an “eyewitness account” of Minsky and Papert’s role in the controversy, before the publication of the book in 1969.

ER: I’m curious about one thing. You said that Minsky and Papert first presented their notions about exclusive-OR in the Perceptron work [in a 1965 conference].

JC: Well, they first presented their notions about the limitations of perceptrons and what they could and couldn’t do.

ER: They hadn’t gotten to exclusive-OR yet?

JC: They had, but that wasn’t a central issue for them. The essential issue was, suppose you had diameter-limited receptive fields in a perceptron, what could it compute?

ER: How was that received at that first conference?

JC: Both of them were quite persuasive speakers, and it was well received. What came across was the fact that you had to put some structure into the perceptron to get it to do anything, but there weren’t a lot of things it could do. The reason was that it didn’t have hidden units. It was clear that without hidden units, nothing important could be done, and they claimed that the problem of programming the hidden units was not solvable. They discouraged a lot of research and that was wrong. … Everywhere there were people working on perceptrons, but they weren’t working hard on them. Then along came Minsky and Papert’s preprints that they sent out long before they published their book. There were preprints circulating in which they demolished Rosenblatt’s claims for the early perceptrons. In those days, things really did damp down. There’s no question that after ’62 there was a quiet period in the field.

ER: Robert Hecht-Nielsen has told me stories that long before Minsky and Papert ever committed anything to a paper that they delivered at a conference or published anywhere, they were going down to ARPA and saying, “You know, this is the wrong way to go. It shouldn’t be a biological model; it should be a logical model.”

JC: I think that’s probably right. In those days they were really quite hostile to neural networks. I can remember having a discussion with Seymour … in the ’60s. We were talking about visual illusions. He felt that they were all higher-level effects that had nothing to do with neural networks as such. They needed a different, a top-down approach to understand. By then he had become a real, a true opponent of neural networks. I think Marvin had the same feelings as well. To some extent, David Marr had those feelings too. After he got to the AI lab, I think he got converted to that way of thinking. Then Tommy Poggio essentially persuaded him otherwise.

I was one of the people suffering from Minsky and Papert’s book [Perceptrons] because it went roughly this way: you start telling somebody about your work, and this visitor or whoever you talk to says, “Don’t you know that this area is dead?” It is something like what we experienced in the pattern recognition society when everything started to be structural and grammatical and semantic and so on. If somebody said, “I’m doing research on the statistical pattern recognition,” then came this remark, “Hey, don’t you know that is a dead idea already?”

Michael A. Arbib thought the book did not cause the neural network winter, but rather caused by the change in funding.

Minsky and Papert basically said that if you limit your networks to one layer in depth, then, unless you have very complicated individual neurons, you can’t do very much. This is not too surprising. … Many people see the book as what killed neural nets, but I really don’t think that’s true. I think that the funding priorities, the fashions in computer science departments, had shifted the emphasis away from neural nets to the more symbolic methods of AI by the time the book came out. I think it was more that a younger generation of computer scientists who didn’t know the earlier work may have used the book as justification for sticking with “straight AI” and ignoring neural nets.

Bernard Widrow concurred.

I looked at that book, and I saw that they’d done some serious work here, and there was some good mathematics in this book, but I said, “My God, what a hatchet job.” I was so relieved that they called this thing the percept ron rather than the Adaline because actually what they were mostly talking about was the Adaline, not the percept ron. I felt that they had sufficiently narrowly defined what the percept ron was, that they were able to prove that it could do practically nothing. Long, long, long before that book, I was already successfully adapting Madaline [Madaline = many Adalines], which is a whole bunch of neural elements. All this worry and agony over the limitations of linear separability, which is the main theme of the book, was long overcome.

We had already stopped working on neural nets. As far as I knew, there wasn’t anybody working on neural nets when that book came out. I couldn’t understand what the point of it was, why the hell they did it. But I know how long it takes to write a book. I figured that they must have gotten inspired to write that book really early on to squelch the field, to do what they could to stick pins in the balloon. But by the time the book came out, the field was already gone. There was just about nobody doing it.

James A. Anderson pointed out that during the “winter”, neural networks survived outside of AI.

This was during the period sometimes called the neural network dark ages, after the Minsky and Papert book on perceptrons had dried up most of the funding for neural networks in engineering and computer science. Neural networks continued to be developed by psychologists, however, because they turned out to be effective models in psychology … What happened during the dark ages was that the ideas had moved away from the highly visible areas of big science and technology into areas of science that did not appear in the newspapers.

David Rumelhart had nice things to say about Minsky, with no trace of bitterness. It is understandable as he only started working in neural networks years after the controversy died down.

I always had one course that was like a free course in which I would choose a book of the year and teach out of that. In 1969, I think it was, or maybe ’70, I chose Perceptrons by Minsky and Papert as the book of the year. We then carefully went through it and read it in a group. … This was my most in-depth experience with things related to neural networks, or what were later called neural networks. I was quite interested in Minsky in those days because he also had another book which was called, I think, Semantic Information Processing. That book was a collection, including an article by Ross Quillian. It was a collection of dissertations from his graduate students. In a way, it was Minsky who led me to read about the perceptron more than anybody else.

Regarding Robert Hecht-Nielsen, we have already seen his belief that Minsky was “Darth Vader” and possibly “the Devil”. Unsurprisingly, he was the most embittered, and placed the blame for the 1970s neural network winter squarely on the publication of Perceptrons.

By the mid-1970s, Minsky and his colleagues (notably Seymour Papert) began to take actions designed to root out neural networks and ensure large and, in their view, richly deserved funding for AI research by getting the money currently being “wasted” on neural networks, and more to boot, redirected. They did two things. First, Minsky and Papert began work on a manuscript designed to discredit neural network research. Second, they attended neural network and “bionics” conferences and presented their ever-growing body of mathematical results being compiled in their manuscript to what they later referred to as “the doleful responses” of members of their audiences.

At the heart of this effort was Minsky and Papert’s growing manuscript, which they privately circulated for comments. The technical approach they took in the manuscript was based on a mathematical theorem discovered and proven some years earlier—ironically, by a strong supporter of Rosenblatt—that the perceptron was incapable of ever implementing the “exclusive-OR” [X-OR] logic function. What Minsky and Papert and their colleagues did was elaborate and bulk up this idea to book length by devising many variants of this theorem. Some, such as a theorem showing that single-layer perceptrons, of many varied types, cannot compute topological connectedness, are quite clever. To this technical fabric, they wove in what amounted to a personal attack on Rosenblatt. This was the early form of their crusade manifesto.

Later, on the strong and wise advice of colleagues, they expunged the vitriol. They didn’t quite get it all, as a careful reading will show. They did a complete flip-flop, dedicating the book to Rosenblatt! As their colleagues sensed it would, this apparently “objective” evaluation of perceptrons had a much more powerful impact than the original manuscript with its unseemly personal attack would have. Of course, in reality, the whole thing was intended, from the outset, as a book-length damnation of Rosenblatt’s work and many of its variants in particular, and, by implication, all other neural network research in general.

Minsky and Papert’s book, Perceptrons, worked. The field of neural networks was discredited and destroyed. The book and the associated conference presentations created a new conventional wisdom at DARPA and almost all other research sponsorship organizations that some MIT professors have proven mathematically that neural networks cannot ever do anything interesting. The chilling effect of this episode on neural network research lasted almost twenty years.

The message of Perceptrons

Minsky described how he and Papert felt impelled to write the book:

Both of the present authors (first independently and later together) became involved with a somewhat therapeutic compulsion: to dispel what we feared to be the first shadows of a “holistic” or “Gestalt” misconception that would threaten to haunt the fields of engineering and artificial intelligence as it had earlier haunted biology and psychology. For this, and for a variety of more practical and theoretical goals, we set out to find something about the range and limitations of perceptrons. (M. Minsky and Papert 1988, 20).

The book has become a true classic: everybody wants to have read and nobody wants to read. Taking the opposite approach, I have read the book, despite not wanting to read it.

Its content can be neatly divided into a greater and a lesser half. The greater half is a mathematical monograph on which functions can be implemented by a single perceptron with fixed featurizers, and the lesser half is a commentary on the wider implications of the monograph. The impact of the work is precisely reversed: most of the impact comes from the commentary derived from the results, and effectively no impact comes from the mathematical results themselves.

Despite this imbalance, the mathematical work is substantial, and the perceptron controversy turns critically on the pliable interpretation sprouting from the solid structure. Therefore, I have detailed the mathematical content in a separate essay, Reading Perceptrons, to which I refer occasionally to gloss their interpretation.

Minsky and Papert struck back

In the 1980s, neural networks rose again to prominence under the name of “connectionism”, prompting an eventual response from Minsky and Papert. The Perceptrons book was reissued in 1988, with new chapters dedicated to rejecting connectionism. They took the 1986 two-volume work of Parallel Distributed Processing (PDP), especially (Rumelhart, Hinton, and Williams 1985)8, as the representative of connectionism, and made specific objections to them.

In the prologue, they staked their claim thus: Connectionism is a mistake engendered by a new generation of researchers ignorant of history; though the theorems of the Perceptrons book apply to only a single perceptron, the lessons extend to all neural networks. To back up the claim, they made specific technical, historical, and philosophical objections, all with the central goal of showing that homogeneous neural networks cannot scale.

… when we found that little of significance had changed since 1969, when the book was first published, we concluded that it would be more useful to keep the original text (with its corrections of 1972) and add an epilogue, so that the book could still be read in its original form. One reason why progress has been so slow in this field is that researchers unfamiliar with its history have continued to make many of the same mistakes that others have made before them.

… there has been little clear-cut change in the conceptual basis of the field. The issues that give rise to excitement today seem much the same as those that were responsible for previous rounds of excitement. … many contemporary experimenters assume that, because the perceptron networks discussed in this book are not exactly the same as those in use today, these theorems no longer apply. Yet, as we will show in our epilogue, most of the lessons of the theorems still apply.

In an earlier interview, Minsky reiterated his belief that the proper place of perceptrons is solving tiny problems with tiny perceptron networks.

… for certain purposes the Perceptron was actually very good. I realized that to make one all you needed in principle was a couple of molecules and a membrane. So after being irritated with Rosenblatt for overclaiming, and diverting all those people along a false path, I started to realize that for what you get out of it – the kind of recognition it can do – it is such a simple machine that it would be astonishing if nature did not make use of it somewhere. It may be that one of the best things a neuron can have is a tiny Perceptron, since you get so much from it for so little. You can’t get one big Perceptron to do very much, but for some things it remains one of the most elegant and simple learning devices I know of. (Bernstein 1981)

They also urged all AI researchers to adopt the Society of Mind hypothesis, or else face the charge of being unreflective or of drawing lines where none exists. It seems to me that Minsky wrote most of the prologue and epilogue, because in Papert’s solo paper, he went considerably further with sociological interpretation.

This broad division makes no sense to us, because these attributes are largely independent of one another; for example, the very same system could combine symbolic, analogical, serial, continuous, and localized aspects. Nor do many of those pairs imply clear opposites; at best they merely indicate some possible extremes among some wider range of possibilities. And although many good theories begin by making distinctions, we feel that in subjects as broad as these there is less to be gained from sharpening bound aries than from seeking useful intermediates.

… Are there inherent incompatibilities between those connectionist and symbolist views? The answer to that depends on the extent to which one regards each separate connectionist scheme as a self-standing system. If one were to ask whether any particular, homogeneous network could serve as a model for a brain, the answer (we claim) would be, clearly. No. But if we consider each such network as a possible model for a part of a brain, then those two overviews are complementary. This is why we see no reason to choose sides.

… Most researchers tried to bypass [the technical objections], either by ignoring them or by using brute force or by trying to discover powerful and generally applicable methods. Few researchers tried to use them as guides to thoughtful research. We do not believe that any completely general solution to them can exist …

We now proceed to the epilogue and its arguments.

1980s connectionism is not that different

They speculated on the reason for the revival of neural networks. Was it because of the development of backpropagation, multilayer networks, and faster computers? Emphatically not. In fact, 1980s connectionists were not different from the 1960s connectionists. It is only the ignorance of history that made them think otherwise. In both periods, connectionism was focused on making small-scale experiments and then extrapolating to the largest scale, without mathematical theorems to justify the extrapolation. In both periods, connectionism failed (or would fail) to scale beyond toy problems.

most of the theorems in this book are explicitly about machines with a single layer of adjustable connection weights. But this does not imply (as many modern connectionists assume) that our conclusions don’t apply to multilayered machines. To be sure, those proofs no longer apply unchanged, because their antecedent conditions have changed. But the phenomena they describe will often still persist. One must examine them, case by case.

… the situation in the early 1960s: Many people were impressed by the fact that initially unstructured networks composed of very simple devices could be made to perform many interesting tasks – by processes that could be seen as remarkably like some forms of learning. A different fact seemed to have impressed only a few people: While those networks did well on certain tasks and failed on certain other tasks, there was no theory to explain what made the difference – particularly when they seemed to work well on small (“toy“) problems but broke down with larger problems of the same kind. Our goal was to develop analytic tools to give us better ideas about what made the difference.

There is no silver bullet in machine learning

There are no general algorithms and there are no general problems. There are only particular algorithm-problem pairs. An algorithm-problem pair can be a good fit, or a bad fit. The parity problem is a bad fit with a neural network trained by backpropagation, but it is a good fit with a Turing machine.

There is no general and effective algorithm. Either the algorithm is so general that it is as useless as “just try every algorithm” akin to Ross Ashby’s homeostat, or it is useful but not general. This general lesson is similar to Gödel’s speedup theorem, Blum’s speedup theorem, the no free lunch theorem, etc.

Clearly, the procedure can make but a finite number of errors before it hits upon a solution. It would be hard to justify the term “learning” for a machine that so relentlessly ignores its experience. The content of the perceptron convergence theorem must be that it yields a better learning procedure than this simple homeostat. Yet the problem of relative speeds of learning of perceptrons and other devices has been almost entirely neglected. (M. Minsky and Papert 1988, sec. 11.7)

Arthur Samuel’s checker learning algorithm encountered two fundamental problems: credit assignment and inventing novel features. Those two problems are not just for the checker AI, but for all AI. There are no universal and effective solutions to credit assignment, and there are no universally effective solutions to inventing novel features. There could be universal but impractical solutions, such as backpropagation on homogeneous neural networks, Solomonoff induction, trying every Turing machine, etc. There could be practical but not universal solutions, which is precisely what populates the Society of Mind in human brains.

Rosenblatt’s credit-assignment method turned out to be as effective as any such method could be. When the answer is obtained, in effect, by adding up the contributions of many processes that have no significant interactions among themselves, then the best one can do is reward them in proportion to how much each of them contributed.

Several kinds of evidence impel us toward this view. One is the great variety of different and specific functions embodied in the brain’s biology. Another is the similarly great variety of phenom ena in the psychology of intelligence. And from a much more abstract viewpoint, we cannot help but be impressed with the practical limitations of each “general” scheme that has been proposed – and with the theoretical opacity of questions about how they behave when we try to scale their applications past the toy problems for which they were first conceived.

There is no efficient way to train homogeneous, high-order networks

They ask the reader to think back to the lesson of the parity predicate from Chapter 10: Even though it is learnable by a two-layered perceptron network, it would involve weights exponential in the input pixel count, and therefore take a very long time to learn. They expect this to generalize, so that any problem that require some perceptron in the network to have receptive field of size \(\Omega(|R|^\alpha)\), necessarily require that perceptron to have coefficients growing like \(2^{\Omega(|R|^\alpha)}\), and therefore taking \(2^{\Omega(|R|^\alpha)}\) steps to train.

We could extend them either by scaling up small connectionist models or by combining small-scale networks into some larger organization. In the first case, we would expect to encounter theoretical obstacles to maintaining GD’s effectiveness on larger, deeper nets. And despite the reputed efficacy of other alleged remedies for the deficiencies of hill-climbing, such as “annealing,” we stay with our research conjecture that no such procedures will work very well on large-scale nets, except in the case of problems that turn out to be of low order in some appropriate sense.

The second alternative is to employ a variety of smaller networks rather than try to scale up a single one. And if we choose (as we do) to move in that direction, then our focus of concern as theoretical psychologists must turn toward the organizing of small nets into effective large systems.

There is no effective use for homogeneous, high-order networks

Fully connected networks, or indeed any neural network without a strong constraint on “order” or “receptive field”, would hopelessly confuse itself with its own echoes as soon as it scales up, unless it has sufficient “insulation”, meaning almost-zero connection weights, such that it effectively splits into a large number of small subnets. That is, a large fully connected network is useless anyway unless it already decomposes into many tiny networks arranged in a Society of Mind.

Certain parallel computations are by their nature synergistic and cooperative: each part makes the others easier. But the And/Or of theorem 4.0 shows that under other circumstances, attempting to make the same network perform two simple tasks at the same time leads to a task that has a far greater order of difficulty. In those sorts of circumstances, there will be a clear advantage to having mechanisms, not to connect things together, but to keep such tasks apart. How can this be done in a connectionist net?

… a brain is not a single, uniformly structured network. Instead, each brain contains hundreds of different types of machines, interconnected in specific ways which predestine that brain to become a large, diverse society of partially specialized agencies.

Gradient descent cannot escape local minima

Gradient descent, backpropagation, and all other hill-climbing algorithms are all vulnerable to getting trapped in local optima, and therefore they cannot work – except in problem-architecture pairs where the loss landscape of this particular problem, for this particular architecture, using this particular loss function, is a single bump whose width is shorter than this particular learning rate.

Gradient descent is just a form of hill-climbing, when the hill is differentiable. The perceptron learning algorithm can be interpreted as a hill-climbing algorithm too, as it makes localized decision to make one step in this direction or that, one error-signal at a time (Section 11.7). Therefore, the generic ineffectiveness of perceptron learning suggests that gradient descent is also generically ineffective and cannot scale. It does not even have a convergence theorem, so in that sense it’s worse than perceptron learning algorithm.9

9 This claim is astonishing, now that we see how powerful backpropagation works, and how the perceptron learning rule had crippled neural network research for 30 years. We can understand their sentiments by remembering that they, like most of the academic community in computer science, favored the certainty of mathematical theorems over mere empirical success. Leo Breiman observed that academic statistics had been hamstrung by the same grasp over mathematical certainty, and thus over 95% of its publications were useless. (Breiman 1995)

We were very pleased to discover (see section 11.6) that PC could be represented as hill-climbing; however, that very fact led us to wonder whether such procedures could dependably be generalized, even to the limited class of multilayer machines that we named Gamba perceptrons. The situation seems not to have changed much – we have seen no contemporary connectionist publication that casts much new theoretical light on the situation. Then why has GD become so popular in recent years? … we fear that its reputation also stems from unfamiliarity with the manner in which hill-climbing methods deteriorate when confronted with larger-scale problems. … Indeed, GD can fail to find a solution when one exists, so in that narrow sense it could be considered less powerful than PC.

Stochastic gradient descent cannot see through the noise

So far as we could tell, every experiment described in (Rumelhart, Hinton, and Williams 1985) involved making a complete cycle through all possible input situations before making any change in weights. Whenever this is feasible, it completely eliminates sampling noise—and then even the most minute correlations can become reliably detectable, be cause the variance is zero. But no person or animal ever faces situations that are so simple and arranged in so orderly a manner as to provide such cycles of teaching examples. Moving from small to large problems will often demand this transition from exhaustive to statistical sampling, and we suspect that in many realistic situations the resulting sampling noise would mask the signal completely. We suspect that many who read the connectionist literature are not aware of this phenomenon, which dims some of the prospects of successfully applying certain learning procedures to large-scale problems.

Differentiable activation is just a hack

Using differentiable activations for neural networks is an artificial trick of questionable future. It makes the learned boolean functions imprecise, and only appears to redeem itself by allowing backpropagation. However, backpropagation is a dead-end because it will not scale. It is better to look for a method that can directly train multilayer perceptron networks with discrete activation functions.

The trick is to replace the threshold function for each unit with a monotonic and differentiable function … However, we suspect that this smoothing trick may entail a large (and avoidable) cost when the predicate to be learned is actually a composition of linear threshold functions. There ought to be a more efficient alternative based on how much each weight must be changed, for each stimulus, to make the local input sum cross the threshold.

We conjecture that learning XOR for larger numbers of variables will become increasingly intractable as we increase the numbers of input variables, because by its nature the underlying parity function is absolutely uncorrelated with any function of fewer variables. Therefore, there can exist no useful correlations among the outputs of the lower-order units involved in computing it, and that leads us to suspect that there is little to gain from following whatever paths are indicated by the artificial introduction of smoothing functions that cause partial derivatives to exist.

Connectionists have no theory, so they should not extrapolate from experiments

In the past few years, many experiments have demonstrated that various new types of learning machines, composed of multiple layers of perceptron-like elements, can be made to solve many kinds of small-scale prob lems. Some of those experimenters believe that these performances can be economically extended to larger problems without encountering the limitations we have shown to apply to single layer perceptrons. Shortly, we shall take a closer look at some of those results and see that much of what we learned about simple perceptrons will still remain quite pertinent.

Without a mathematical theory, experimental data cannot be extrapolated. If neural networks happen to work well on a problem, it merely shows that the problem is a good fit for this particular architecture trained in this particular way at this particular scale, not anything more general than that.

As the field of connectionism becomes more mature, the quest for a general solution to all learning problems will evolve into an understanding of which types of learning processes are likely to work on which classes of problems. And this means that, past a certain point, we won’t be able to get by with vacuous generalities about hill-climbing. We will really need to know a great deal more about the nature of those surfaces for each specific realm of problems that we want to solve.

… the learning procedure required 1,208 cycles through each of the 64 possible examples – a total of 77,312 trials (enough to make us wonder if the time for this procedure to determine suitable coefficients increases exponentially with the size of the retina). PDP does not address this question. What happens when the retina has 100 elements? If such a network required on the order of \(2^{200}\) trials to learn. most observers would lose interest.

Connectionist experiments can be extrapolated to show that they do not scale

Though lacking a theory of their own on the operation of multilayer perceptrons, Minsky and Papert proceeded to interpret the connectionist experiment data as showing that neural networks would fail to scale.10

10 Without a mathematical theory of what neural networks can do, extrapolating from their behavior at small scales to the large scale is impossible and only reflect the bias behind those who make the extrapolation.

Connectionists demonstrated that two-layered perceptrons, where both layers were trainable, bypassed the limits described in Perceptrons. For example, (Rumelhart, Hinton, and Williams 1985) showed that several problems unsolvable by a single perceptron – XOR, parity, symmetry, etc – were solved by a two-layered neural network.

While the connectionist authors saw the result as a hopeful sign, Minsky and Papert interpreted it as showing that the experiments wouldn’t scale, because the coefficients appeared to grow exponentially – in just the way they proved in Chapter 7.

In PDP it is recognized that the lower-level coefficients appear to be growing exponentially, yet no alarm is expressed about this. In fact, anyone who reads section 7.3 should recognize such a network as employing precisely the type of computational structure that we called stratification.

although certain problems can easily by solved by perceptrons on small scales, the computational costs become prohibitive when the problem is scaled up. The authors of PDP seem not to recognize that the coefficients of this symmetry machine confirm that thesis, and celebrate this performance on a toy problem as a success rather than asking whether it could become a profoundly “bad” form of behavior when scaled up to problems of larger size.

Papert struck back

While it appears that Minsky was the main author for the new prologue and epilogue, Papert solo-authored (S. Papert 1988), an essay that gave the controversy a uniquely Papert-styled spin. It is an extensive reframing of the perceptron controversy into a social and philosophical issue, with the prediction of ultimate victory for epistemological pluralism:

The field of artificial intelligence is currently divided into what seem to be several competing paradigms … for mechanisms with a universal application. I do not foresee the future in terms of an ultimate victory for any of the present contenders. What I do foresee is a change of frame, away from the search for universal mechanisms. I believe that we have much more to learn from studying the differences, rather than the sameness, of kinds of knowing.

He diagnosed the source of the philosophical error as a “category error”.

There is the same mistake on both sides: the category error of supposing that the existence of a common mechanism provides both an explanation and a unification of all systems, however complex, in which this mechanism might play a central role.

Artificial intelligence, like any other scientific enterprise, had built a scientific culture… more than half of our book is devoted to “pro-perceptron” findings about some very surprising and hitherto unknown things that perceptrons can do. But in a culture set up for global judgment of mechanisms, being understood can be a fate as bad as death. A real understanding of what a mechanism can do carries too much implication about what it cannot do… The same trait of universalism leads the new generation of connectionists to assess their own microlevel experiments, such as Exor, as a projective screen for looking at the largest macroissues in the philosophy of mind. The category error analogous to seeking explanations of the tiger’s stripes in the structure of DNA is not an isolated error. It is solidly rooted in AI’s culture.

He then discussed the compute-first interpretation, a “bitter lesson” for the 1980s, before rejecting it.

In the olden days of Minsky and Papert, neural networking models were hopelessly limited by the puniness of the computers available at the time and by the lack of ideas about how to make any but the simplest networks learn. Now things have changed. Powerful, massively parallel computers can implement very large nets, and new learning algorithms can make them learn. …

I don’t believe it. The influential recent demonstrations of new networks all run on small computers and could have been done in 1970 with ease. Exor is a “toy problem” run for study and demonstration, but the examples discussed in the literature are still very small. Indeed, Minsky and I, in a more technical discussion of this history (added as a new prologue and epilogue to a reissue of Perceptrons), suggest that the entire structure of recent connectionist theories might be built on quicksand: it is all based on toy-sized problems with no theoretical analysis to show that performance will be maintained when the models are scaled up to realistic size. The connectionist authors fail to read our work as a warning that networks, like “brute force” programs based on search procedures, scale very badly.

Consider Exor, a certain neural network he picked out of the pages of PDP, which learned to perform the infamous XOR task, but only after 2232 examples. Was it slow, or fast? A proper judgment requires a mathematical understanding of the algorithm-problem fit. By extension, to properly judge whether neural networks were good for any specific problem, one must first mathematically understand the fit. He insinuated that the connectionists who were confident that their neural networks were more than a sterile extension of the perceptron did not do their math, unlike he and Minsky.

instead of asking whether nets are good, we asked what they are good for. The focus of enquiry shifted from generalities about kinds of machines to specifics about kinds of tasks. From this point of view, Exor raises such questions as: Which tasks would be learned faster and which would be learned even more slowly by this machine? Can we make a theory of tasks that will explain why 2,232 repetitions were needed in this particular act of learning?

… Minsky and I both knew perceptrons extremely well. We had worked on them for many years before our joint project of under standing their limits was conceived… I was left with a deep respect for the extraordinary difficulty of being sure of what a computational system can or cannot do. I wonder at people who seem so secure in their intuitive convictions, or their less-than-rigorous rhetorical arguments, about computers, neural nets, or human minds.

Interjection

I wonder at people who seem so secure in their intuitive convictions, or their less-than-rigorous rhetorical arguments, about computers, neural nets, or human minds:

There is no reason to suppose that any of these virtues carry over to the many-layered version. Nevertheless, we consider it to be an important research problem to elucidate (or reject) our intuitive judgment that the extension is sterile. Perhaps some powerful convergence theorem will be discovered, or some profound reason for the failure to produce an interesting “learning theorem” for the multilayered machine will be found. (M. Minsky and Papert 1988, 232)

What, then, explains the rise of connectionism? Since Papert reframed the fall of perceptron socially, it only stands to reason he would reframe the rise of connectionism as the rise of a social myth caused by other social myths, not by the increase in computing power or new algorithms like backpropagation, convolutional networks, and such. For one, the computing powers used by the breakthrough connectionist models like NETtalk were already within reach even in the 1960s.11 For another, he and Minsky were firm in their conviction that any uniform architecture must scale very badly and that no amount of computing or algorithmic advancement could be anything more than a sterile extension.

11 NETtalk, a neural network with 3 layers and 18,629 weights, is entirely within reach for the 1960s. Its dataset was built in weeks by hand, and its training took a single night on a Ridge computer that is close to a VAX 11/780. Now, VAX 11/780 has \(\sim 1 \;\rm{MFLOP/sec}\), so NETtalk took \(\sim 10^{11}\;\rm{FLOP}\) to train. During the 1960s, typical workstations have a computing power of \(\sim 0.11 \;\rm{MIPS}\), so NETtalk could be trained in a month.

We then used the 20,000-word Brown Corpus and assigned phonemes, as well as stress marks, to each of letters. The alignment of the letters and sounds took weeks, but, once the learning started, the network absorbed the whole corpus in a single night. (Sejnowski 2018, 115)