Introduction

It is to the economist, the statistician, the philosopher, and to the general reader that I commend the analysis contained herein… mathematics as applied to classical thermodynamics is beautiful: if you can’t see that, you were born color-blind and are to be pitied.

Paul Samuelson, forewords to (Bickler and Samuelson 1974)

What this essay contains

Unlike my Analytical Mechanics essay, this essay does not cover much of the width covered in a university course on classical thermodynamics or neoclassical economics. Instead, it’s best described as “the conceptual foundations that a typical course does not work well on”, a deep but narrow essay to supplement a shallow but wide typical textbook.

Unlike analytical mechanics, which is typically taught to students intent on reaching the abstract plane of modern theoretical physics, and is thus deep but narrow, a typical course on classical thermodynamics is shallow, but very wide, and taught to a wide student base – theoretical physicists, thermal engineers, electric engineers, chemists, biologists… When something must be taught to a wide audience, and is both deep and wide, depth is sacrificed. This is perfectly practicable, but it leaves a small minority confused with a sinister feeling that the teachers have abused their trust in them. I am in that minority.

The essay contains: three laws of thermodynamics, entropy, Helmholtz and Gibbs free energy, nonextensive entropy, Caratheodory’s axiomatic thermodynamics, Vladimir Arnold’s contact-geometric thermodynamics, Paul Samuelson’s area-ratio thermodynamics, Le Chatelier’s principle, chemical equilibrium, Gibbs phase rule and its extensions, analogies with neoclassical economics, speculative sci-fi.

It does not contain: statistical mechanics, most of the “width” part of thermodynamics and economics.

The prerequisites are multivariate calculus and mathematical maturity. It’s good to be familiar with basic economics as well.

Quick reference

- \(S\): entropy

- \(U\): internal energy

- \(V\): volume

- \(T\): temperature

- \(\beta = 1/T\): inverse temperature

- \(N\): number of particles of a chemical species

- \(n\): number of moles of a chemical species

- \(\xi\): extent of reaction

- \(X\): “other properties that we are not concerned with”

- For example, with an ideal gas trapped in a copper box, its macroscopic state is determined by \(U, N, V\). If we want to focus on \(U\), then we can let \(X = (N, V)\), and write \(S = S(U, X)\).

- Similarly, for a photon gas in a blackbody chamber, its macroscopic state is determined by \(U, V\), since photons can be created and destroyed on the inner surface of the blackbody chamber. We can then write \(S = S(U, X)\) if we are concerned only about how entropy changes with \(U\), holding the other state constant. We can also write \(S = S(V, X)\) vice versa.

Thermodynamics is notorious for having too many partial differentials and coordinate changes. \((\partial_{x_1} f)_{x_1, x_2, \dots, x_n}\) means that we lay down a coordinate system defined by \(x_1, \dots, x_n\), then calculate \(\partial_{x_1}f\), fixing the other coordinates constant. In particular, \((\partial_{x_1} f)_{x_1, x_2, \dots, x_n}\) is likely different from \((\partial_{x_1} f)_{x_1, y_2, \dots, y_n}\).

If in doubt, write down

\[df = \sum_{i=1}^n (\partial_{x_i} f)_{x_1, \dots, x_n} dx_i\]

and reason thenceforth.

Sometimes people write \((\partial_{x_i} f)_{x_2, \dots, x_n}\) instead of \((\partial_{x_i} f)_{x_1, x_2, \dots, x_n}\) to save them one stroke of the pen. I try to avoid that, but be aware and beware.

Further readings

- (Pippard 1964). Slim, elegant, both mathematical and applied. In the best British tradition of mathematics – think James Maxwell and G. H. Hardy.

- (Fermi 1956). The same as above. However, it also covers chemical thermodynamics.

- (Lemons 2019). A very readable introduction to classical thermodynamics, slim but deep. I finally understood the meaning of the three laws of thermodynamics after reading it. Contains copious historical quotations.

- (Lemons 2008). A textbook version of the author’s (Lemons 2019), weaving in history and philosophical contemplation at every turn.

- (Carnot, Clapeyron, and Clausius 1988). A reprint of the most important papers in thermodynamics published before 1900. Useful to have on hand if you are reading (Lemons 2019).

- (Buchdahl 1966). A textbook based on Carathéodory’s axiomatic thermodynamics. The notation is ponderous, and the payoff is unclear. I don’t know what is its intended audience – perhaps professional pedants and differential geometers? Nevertheless, if you need to do research in Carathéodory’s axiomatic thermodynamics, I think this is your best bet.

- Ted Chiang’s Exhalation (2008), printed in (Chiang 2019). A sci-fi story about an alien race where pneumatic engines, not heat engines, are all-important. A better take on stereodynamics than my attempt.

What is thermodynamics?

Both neoclassical economics and classical thermodynamics are about the equilibria of large systems. While a large system is generally hopelessly complicated, almost all the complexity falls away when the system is maximizing a single quantity. Ceaselessly striving to maximize entropy, a complex system sheds its complexity and reaches the pure simplicity of maximal entropy. Ceaselessly striving to maximize profit, a complex company sheds its complexity and reaches the pure simplicity of perfect product.

In both fields, everything we can say about the world are nothing more than systems, constraints, contacts, and equilibria. Time and change do not exist. All we can explain is which states are in constrained equilibrium, not how a system can get there. Atoms do not exist. All we can explain is what happens to homogeneous substances in constrained equilibrium. People do not exist. All we can explain is what happens to constrained economic systems in equilibrium.

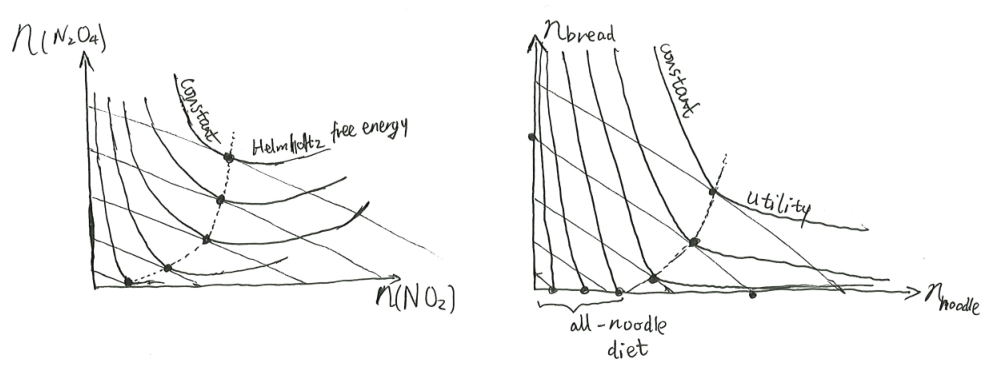

Different things can be maximized: the total entropy, or the negative Gibbs free energy, or the profit, or the sum-total of utility, or something else. Through different lenses, different things are maximized, but they predict the same phenomena. Using this mathematical freedom, experts brachiate around the coordinate axes like gibbons brachiating around vines, looking for the perfect angle to solve each particular problem.

| interpretation | maximized quantity | constraint |

|---|---|---|

| conglomerate accounting | book value | Assets is conserved, but can be moved between child companies. |

| social welfare | social utility | Wealth is conserved, but can be redistributed. |

| closed system | entropy | Quantities are conserved, but can be moved between sub-systems. |

| factory production | profit | Some raw materials are on sale at a market, but others are not. |

| consumer choice | utility | Some finished goods are on sale at a market, but others are not. The market uses a commodity money. |

| partially open system | negative free energy | Some quantities can be exchanged with a bath, but others are conserved. |

Even if the capitalist system is to give way to one in which service and not profit shall be the object, there will still be an integral of anticipated utilities to be made a maximum. Since we must find a function which maximizes an integral we must in many cases use the Calculus of Variations. But the problem here transcends the questions of depreciation and useful life, and belongs to the dawning economic theory based on considerations of maximum and minimum which bears to the older theories the relations which the Hamiltonian dynamics and the thermodynamics of entropy bear to their predecessors.

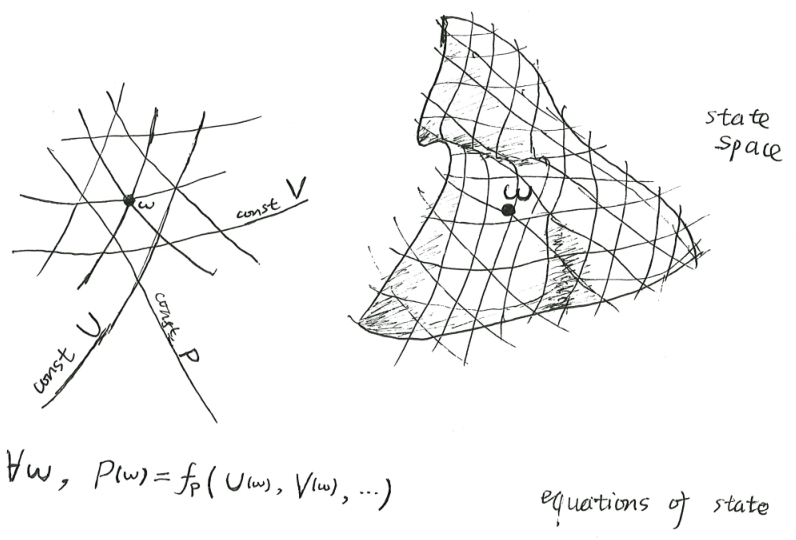

Systems

Systems are the main characters of the drama of thermodynamics. A thermodynamic system is fully determined by a few macroscopic properties, related by equations of state. Once we know enough of its properties, we know all there is to know about such a system. There is nothing left to say about it.

Everything is a thermodynamic system. However, there are two special types: bath systems, and mechanical systems.1 Any number of thermodynamic systems can be connected into a larger system – a compound system.

1 Some children are confused when they heard that squares are rectangles too. I hope you won’t be equally confused when you hear that mechanical systems are also thermodynamic systems.

The prototypical thermodynamic system is a tank of ideal gas whose number of particles is fixed. It has 2 degrees of freedom, so if we write down \(n\) different macroscopic properties, they would (generically) be related by \(n-2\) equations of state. So for example, if we write down the properties internal energy \(U\), temperature \(T\), volume \(V\), pressure \(P\), they would be related by the 2 equations of state

\[PV = k_BNT, \quad U = \frac 32 k_B NT\]

If we know two out of the four of internal energy \(U\), temperature \(T\), volume \(V\), pressure \(P\), then we can solve for all the others by equations of state. The macroscopic properties fully describe the system, and nothing more can be said about it. We cannot ask additional questions such as “Are there more particles on the left than on the right?” or “How long did it take for the system to reach equilibrium?”, because such questions are literally undefined in classical thermodynamics.

Because the properties are related by equations of state, we need only know a few of the properties in order to infer all the rest. For example, knowing the volume \(V\), internal energy \(U\), and particle number \(N\), of a tank of ideal gas, we can infer that its pressure is \(P = \frac{2U}{3V}\), and its temperature is \(T = PV/k_BN\). Succinctly,

\[P = P(U, V, N), \quad T = T(U, V, N)\]

meaning “If we know \(U, V, N\), then we can calculate \(P\) and \(T\)”.

It’s too easy to misread it as saying that \(P\) is a mathematical function of \(U, V, N\). It is not. It really is saying that, there exists a mathematical function \(f_P\), such that for any state \(\omega\) of the system, we have

\[P(\omega) = f_P(U(\omega), V(\omega), N(\omega))\]

Everything about a thermodynamic system is known once we specify how its entropy is a function of its macroscopic properties. For example, the ideal gas is fully specified by

\[S(U, V, N) = k_B N \ln\left[\frac{V}{N}\,\left(\frac{U}{\hat{c}_V k_B N}\right)^{\hat{c}_V}\,\frac{1}{\Phi}\right]\]

where \(\hat c_V\) and \(\Phi\) are constants that differ for each gas.

As another example, the photon gas is defined by

\[S(U, V) = C V^{1/4}U^{3/4}, \quad C=\left(\frac{256\pi^2 k_B^4}{1215 c^3 \hbar^3}\right)^{1/4}\]

The fact that \(S(U, V) \propto V^{1/4}U^{3/4}\) can be derived from 19th-century physics (indeed, it was known to Boltzmann), but the constant \(C\) has to wait for proper quantum mechanics.

Baths

A bath system is an infinite source of a conserved quantity at constant marginal entropy. A bath system is intended to be used as a “free market” where non-bath systems can trade some conserved quantities with.

For example, if we take a copper piston and fill it with ideal gas, then immerse the piston in the bottom of an ocean, then it is playing the role of a volume-and-energy bath with constant pressure-and-temperature. If we cover up the piston with some insulating material, then the ocean suddenly plays the role of merely a volume bath with constant pressure. If we use screws to fix the piston head, then the ocean suddenly becomes merely an energy bath with constant temperature. From this, we see that a bath in itself is a rather vacant concept. A bath should always be in contact with some non-bath system.

Because they are infinitely large, if you connect two baths together, something bad will happen. For example, if you connect two energy baths together, but with different temperature, what would happen? The simple answer is: “A torrent of heat will flow from the hotter to the colder bath.”. The more correct answer is: “Classical thermodynamics does not allow such a question to be asked. It would be like asking what happens when ‘an unstoppable force meets an immovable object’. If we literally have two baths, then we cannot connect them. If we only have two giant oceans that seem like baths when compared to this little tank of gas, then if the two oceans are connected to each other, they will no longer appear as baths to each other.”.

You can take this in two ways. Either will work. You can say that both systems and baths are first-class concepts in thermodynamics, and enforce the rule that you can never connect two baths together. You can also say that systems are first-class concepts in thermodynamics, but baths are second-class concepts, a convenient way to think about a system that is much larger relative to some other systems. Since a bath is a relative concept, it simply is a bad question to ask “What happens if we connect two baths together?” – The correct reply is “You mean, two systems that appear as baths… relative to what?”.

Some important (and unimportant) examples of baths:

- A heat bath, or more accurately an energy bath, is a system that you can take or dump as much energy as you want, always at constant marginal price of energy.

- An atmosphere, or more accurately an energy-and-volume bath, is a system that you can take or dump as much energy or volume as you want, always at constant temperature and pressure.

- The surface of a lake could serve as an energy-and-area bath.

- A large block of salt can serve as a salt-chemical bath.

Mechanical systems

A mechanical system is a thermodynamic system whose entropy is always zero. Essentially all systems studied in classical mechanics are such systems. In classical thermodynamics, they are not put center-stage, but if you know where to look, you will see them everywhere.

An ideal linear-spring has two macroscopic properties: length \(x\) and internal energy \(U\), with equation of state \(U = \frac 12kx^2\). For example, a helix spring is close to an ideal linear-spring.

An ideal surface-spring is the same as an ideal linear-spring, but with area \(A\) instead of length \(x\). Its equation of state is \(U = \sigma A\), where \(\sigma\) is surface tension constant. For example, a balloon skin is close to an ideal surface-spring.

An ideal volume-spring is similar. It would resemble a lump of jelly. An ideal gas, though it looks like a volume-spring, is not an example, because its entropy is not zero. In particular, this means an ideal gas has temperature and can be “heated up”, but a lump of ideal jelly cannot.

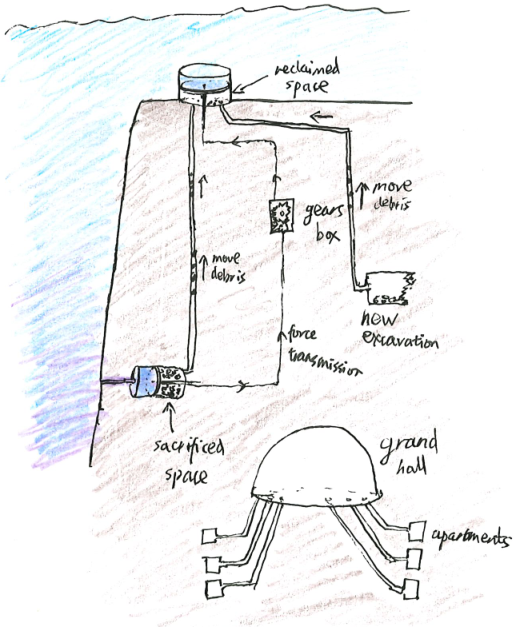

In general, we can construct an arbitrary energy storage system, such that it has two macroscopic properties \(x, U\), satisfying \(U = f(x)\), where \(f\) is any differentiable function. To show this, we can imagine taking a mystery box with a chain we can pull on, and by some internal construction with gears, pulleys, weights, and springs, the force on the chain is \(f'(x)\), where \(x\) is the length by which we have pulled. That is the desired system.

State space

A tank of gas has on the order of \(10^{26}\) particles, but in classical thermodynamics, its state is entirely determined if we know its \(U, V, N\). In this sense, we can say that its macroscopic state space has just 3 dimensions. In many situations, such as in the Carnot heat engine, we also fix its \(N\), in which case its state space has just 2 dimensions.

This is typically plotted in either the \((P, V)\) space, or the \((T, S)\) space, or some other spaces, but in every case, there are just two dimensions. We can unify all these diagrams as merely different viewpoints upon the same curvy surface – the state space \(\mathcal X\) itself. Each point \(\omega \in \mathcal X\) in the state space is a state, and each macroscopic property \(X\) is a scalar function of type \(X : \mathcal X \to \mathbb{R}\).

If the state space has just \(d\) dimensions, then we need only \(d\) macroscopic properties \(X_1, \dots, X_d\) in order to lay down a coordinate system for the state space. If we have another macroscopic property \(Y\), then there in general exists a function \(f_Y: \mathbb{R}^d \to \mathbb{R}\), such that \(Y(\omega) = f_Y(X_1(\omega), \dots, X_d(\omega))\) for any state \(\omega\). In other words, we have an equation of state.

Calculus on state space

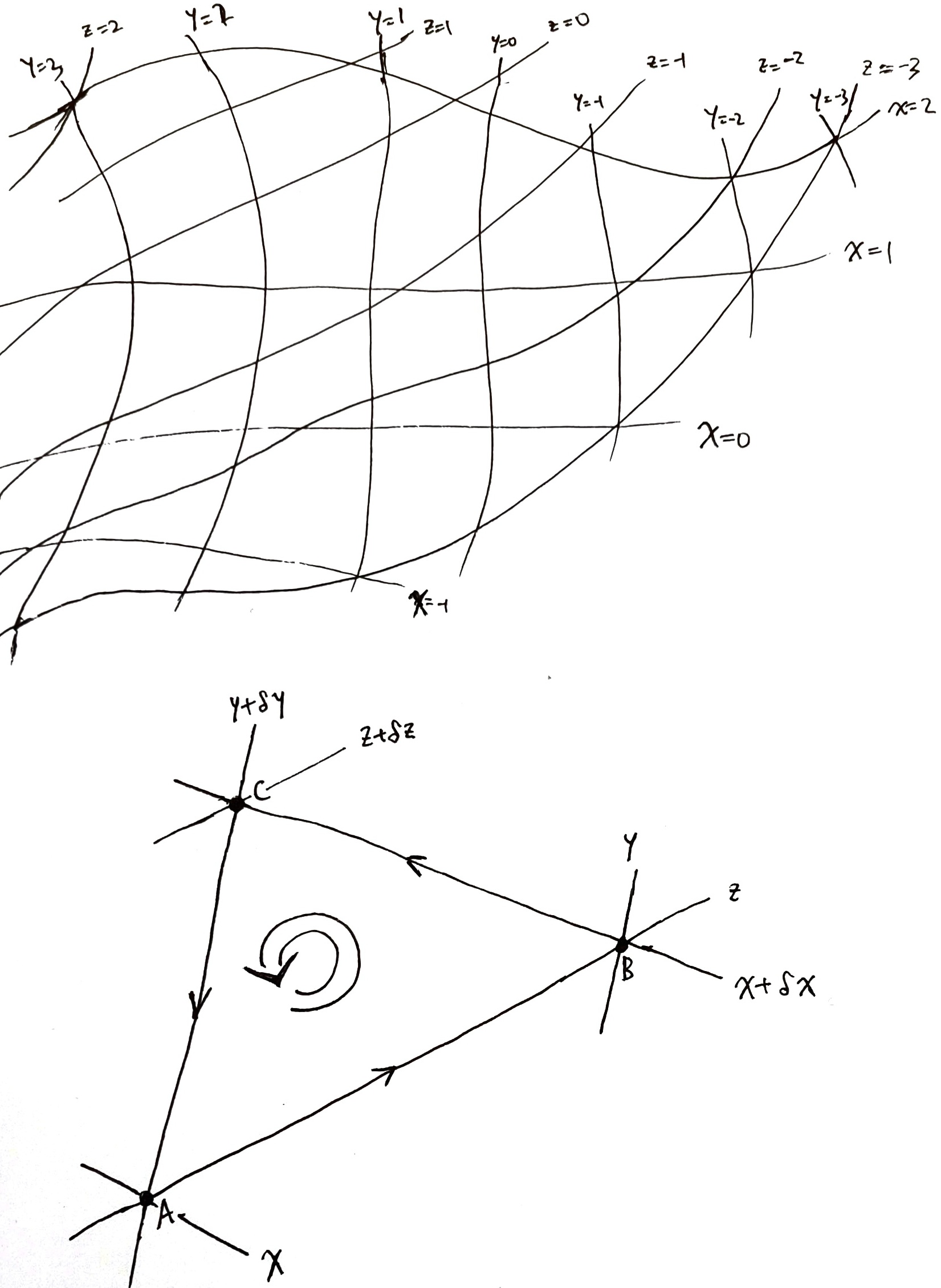

In thermodynamics, we typically have many more macroscopic properties than dimensions. For example, the state space of an ideal gas has only 3 dimensions, but has many macroscopic properties: \(S, U, V, N, T, P, \mu, \dots\). In this case, every time we take a derivative on the state space, we need to pick exactly 3 properties, since picking different coordinates leads to different partial differentials.

As an illustrative example, let \(x, y, z\) be smooth scalar functions on a smooth 2D surface, such that their contour lines are linearly independent at every point on the surface. Then, picking any 2 out of \(x, y, z\) would give us a coordinate system. Any other smooth function \(f\) on the surface can be expressed in 3 different ways, as

\[f = f_{x, y}(x, y) = f_{y, z}(y, z) = f_{z, x}(z, x)\]

This gives us two different ways to “differentiate \(f\) with respect to \(x\)”:

\[ \left(\frac{\partial f}{\partial x}\right)_y := \partial_1 f_{x, y}, \quad \left(\frac{\partial f}{\partial x}\right)_z := \partial_2 f_{z, x} \]

where \(\partial_1\) means “partial differentiation with respect to the first input”, etc.

Let \(x, y\) be the usual coordinates on the 2D plane \(\mathbb{R}^2\), and let \(z = x + y, w = x - y\). We can pick any 2 of \(x, y, z, w\) to construct a coordinate system. Then,

\[ \left(\frac{\partial y}{\partial x}\right)_y = 0 \quad \left(\frac{\partial y}{\partial x}\right)_z = -1 \quad \left(\frac{\partial y}{\partial x}\right)_w = 1 \]

In general, if we pick \(x_1, \dots, x_d, y\) from a list of many smooth scalar functions on a surface, such that \(x_1, \dots, x_d\) form a smooth and linearly independent coordinate system on the surface, then we can express \(y = f(x_1, \dots, x_d)\) for some function \(f : \mathbb{R}^d \to \mathbb{R}\), and define

\[ \left(\frac{\partial y}{\partial x_1}\right)_{x_2, \dots, x_d} = \partial_1 f, \quad \left(\frac{\partial y}{\partial x_1\partial x_2}\right)_{x_3, \dots, x_d} = \partial_1\partial_2 f, \quad \dots \]

In particular, we have

\[dy = \sum_{i=1}^d (\partial_{x_i} y)_{x_1, \dots, x_d} dx_i\]

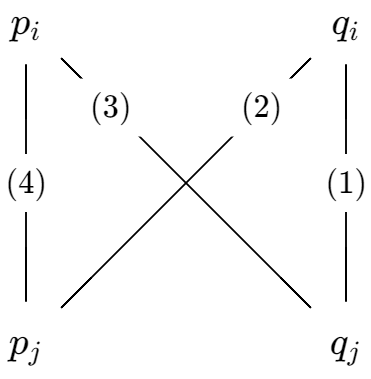

Exercise 1 Based on the following diagram, prove that if we have three smooth scalar functions \(x, y, z\) on a smooth2 2D surface, such that their contour surfaces are linearly independent, then \(\left(\frac{\partial x}{\partial z}\right)_y\left(\frac{\partial y}{\partial x}\right)_z\left(\frac{\partial z}{\partial y}\right)_x = -1\). Generalize this to higher dimensions.

2 It is sufficient to assume the surface and the scalar functions are \(C^2\), but we need not worry about it, because everything is smooth in classical thermodynamics, except at phase transitions.

Constraint

A constraint is an equation of form \(f(A, B, C, \dots) = f_0\), where \(f\) is a mathematical function, \(A, B, C, \dots\) are macroscopic properties, and \(f_0\) is a constant.

For example, if we have two tanks of gas in thermal contact, then the constraint is as follows:

\[ \begin{cases} V_1 &= V_{1,0} \\ V_2 &= V_{2,0} \\ U_1 + U_2 &= U_{1,0} + U_{2,0} \\ \end{cases} \]

meaning that the volume of each tank of gas is conserved, and the sum of their energy is also conserved.

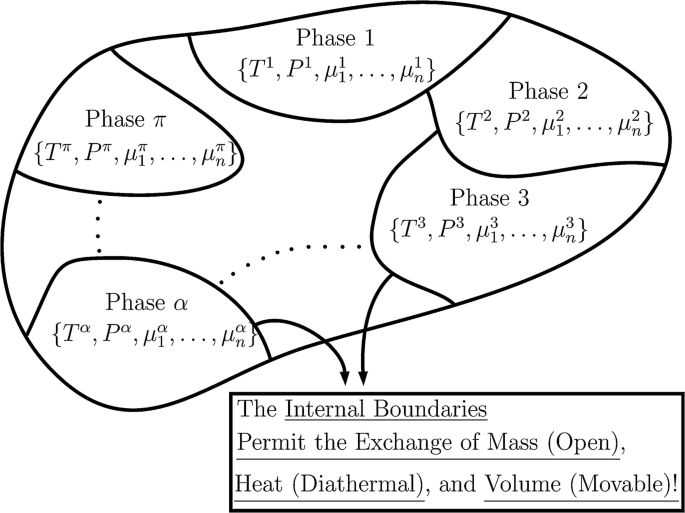

The constraints on a compound system are determined by the contacts between its subsystems.

Contacts

If we only have systems isolated in a perfect vacuum, then nothing interesting will happen. If two systems are perfectly connected, then they will never be brought apart. A contact allows two systems to interact, without destroying their individuality. It allows two systems to communicate, without becoming literally one system. Economically, contacts are trade contracts.

In general, the effect of a contact is to reduce the number of constraints by one. For example, a metal rod between two pistons reduces the two constraints

\[V_1 = V_{1,0}, \quad V_2 = V_{2, 0}\]

into one constraint: \[V_1 + V_2 = V_{1,0} + V_{2,0}\]

As another example, in a tank of three kinds of gas \(N_2, H_2, NH_3\), allowing a single chemical reaction \(N_2 + 3H_2 \rightleftharpoons 2NH_3\) reduces three constraints

\[ N_{N_2} = N_{N_2, 0}, \quad N_{H_2} = N_{H_2, 0}, \quad N_{NH_3}= N_{NH_3, 0} \]

into two:

\[ N_{N_2} - N_{N_2, 0} = (N_{H_2} - N_{H_2, 0})/3, \quad N_{N_2} - N_{N_2, 0} = (N_{NH_3} - N_{NH_3, 0})/2 \]

A contact can be nonlinear. For example, if we have two pistons of the same area, connected by a lever, such that pushing on one piston by \(\Delta x\) would be pulling on the other piston by \(2 \Delta x\), then the constraint becomes \(2(V_1 - V_{1,0}) + (V_2 - V_{2,0}) = 0\). And by designing a series of levers, gears, and chains, we can realize any constraint function \(f(V_1, V_2) = f(V_{1,0}, V_{2,0})\) for any smooth function \(f\).

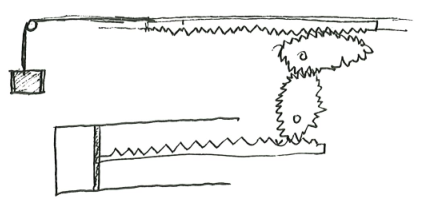

Compound systems

A compound system is nothing more than several systems connected. If we know the connections, and the entropy function of each subsystem, then we know everything about the compound system. The number of DOF for the compound system is the sum of the DOF of the subsystems, minus the degree of constraints.

For example, in the adiabatic expansion of a tank of ideal gas, we are really studying one compound system made of 3 subsystems:

- a tank of ideal gas (thermodynamic system),

- a lump of mass in gravity (mechanical system),

- with a gear system between them (contact).

The gear system is designed with gear-ratio that varies as the system turns, in just the right way such that the system is always in equilibrium no matter the position of the piston, so that it really has no preference of going forwards or backwards.

We typically think of the tank of ideal gas itself as part of the thermodynamics, and the other parts as “the environment”, but we should consider one single compound system, properly speaking, of which the tank of ideal gas is merely a sub-system. This way, we can state directly that the entropy of the entire compound system is maximized.

Example 1 (heat-engine-and-environment compound system) A heat engine is a thermodynamic system that is used as a component of a larger compound system. The large system contains 4 parts: two energy baths, one heat engine, and one carefully designed mechanical system acting as energy storage.

If you only want a heat engine that works, then the energy storage does not need to be carefully designed. However, if you want a Carnot heat engine, i.e. at maximal efficiency, then the energy storage must be designed to be exactly right. It must be designed to follow the exact parameters of the heat engine, as well as the temperatures of the two energy baths. If any of those is ignored, the energy storage would fail to “mesh” with the rest of the compound system, and cause waste.

This is why a heat engine must be a component of a larger compound system. Every part depends on every other part. The energy storage unit is just as important and precisely designed as the heat engine is.

Equilibrium, virtual vs actual states

Freedom is an iron cage.

Constraints set it free again.

On the African savannah, there lived a bunch of meerkats. Meerkats love to stand on the tallest place.

At first, they could stand wherever they wanted, so they all stood on one single hill. It was crowded. A blind lion who had memorized the landscape came and ate all of them.

Then humans came and added long walls that divided the savannah into thin stripes. Now each meerkat’s location is determined by the stripe in which it happened to fall. The blind lion could find the meerkat if he knew the location of the stripe. In other words, optimizing for height, when there is one constraint, leads to one dimension of uncertainty.

This is a subtle point, so I will say it again. If you want to optimize for a quantity, and you don’t have a constraint, then you would always go to the globally best solution. The whole space of possibilities is open to you, but you don’t need them. Those states are “virtual”, because they are never observed, even though they are out there.

But if you have one constraint, then you have one unique solution for each possible setting of constraint. Suddenly a lot of those virtual states become real. You are still not free, but at least now you have a puppet master.

In classical thermodynamics, only equilibrium states are “real”. Nonequilibrium states are “virtual”. In Lagrangian mechanics, only stationary-action paths are real, and the other paths are virtual. You can imagine that if you throw a rock upwards, it might execute a complex figure-8 motion before returning to the ground again, but that’s a virtual path. The only real path is the unique virtual path that stationarizes the action integral.

Similarly, in classical thermodynamics, you could imagine that a tank of gas contains all its gas on the left side. Its entropy is just \(S(U, V/2, N)\), but that’s a virtual state that does not maximize entropy under constraint. The unique entropy-maximizing virtual state is the real state.

For every constraint, there are many nonequilibrium states that satisfy the constraint, but only one equilibrium entropy, and so equilibrium entropy is a function of constraints, even though the entropy function itself is not. It optimizes all its complexities away, allowing us to know it through just its external constraints.

Some common misconceptions

Thermodynamics is not statistical mechanics. Forget about molecules and atoms. Forget about statistics and statistical mechanics. Forget about \(S = k_B\ln \Omega\) or \(S = -\sum_i p_i \ln p_i\). Randomness does not exist in classical thermodynamics.

Forget about the first law of thermodynamics. Energy is nothing special. The conservation of energy is no more important than the conservation of volume, or the conservation of electric charge.

Forget about heat. Heat does not exist – it is not a noun, not even an adjective, but an adverb at most. The theory of caloric has already been disproven in 1798 by the cannon-boring experiment.

Heat energy and work energy are both misnomers. Neither are types of energy. Instead, they are types of energy-flow. We should speak of only the heatly flow of energy and the workly flow of energy. In this way, both “heat” and “work” are revealed to be actually adverbs. This is a bit awkward, so we will continue to speak of “heat” and “work”, but you should understand that it’s a shorthand, and that there is neither “heat energy” nor “work energy”.

To perform work, one must perform work upon something. In other words, there is no such thing as “system A performed work”. There is really “some energy and length is between A and B, in compliance with an equation constraint, such that the total entropy of the compound system has remained constant”.

Forget about time. Time does not exist in classical thermodynamics. We can say nothing at all about what happens between equilibria. We can only say, “This is an equilibrium, but that is not an equilibrium.”. That is all we can say. See this, and you will see thermodynamics aright. As to what happened “between them”, that we must pass over in silence.

The name “thermodynamics” is a complete misnomer, because heat does not exist (thus no “thermo-”) and time does not exist (thus no “-dynamics”). If I am allowed a bit of name-rectification, I will call it “entropo-statics”.

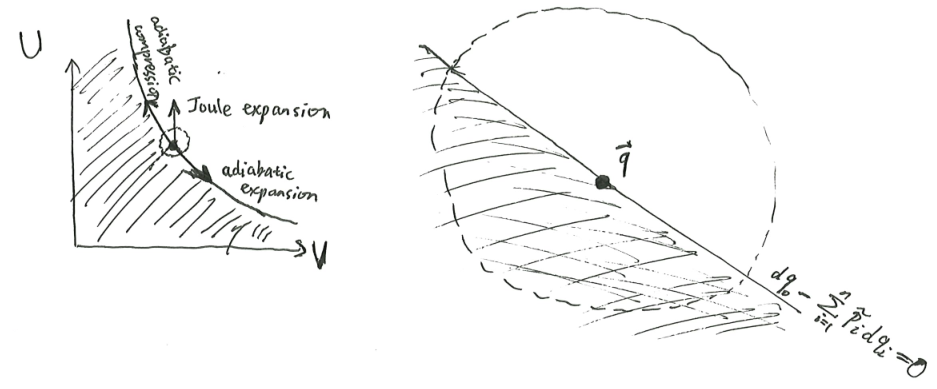

If time does not exist, you ask, what do we mean when we study Joule expansion? That is, when we take half a tank of gas, and suddenly open the middle wall and wait until the gas equilibrates in the entire tank?

Quiet. We did not open the middle wall. We did not wait. Gas did not expand. The past did not cause the future. Time is a stubbornly persistent illusion, and causality too.

In fact, we are considering two different problems in thermodynamics.

First problem: Given a tank of gas with internal energy \(U = U_0\), molar-amount \(n = n_0\), and constraint \(V \leq \frac 12 V_0\). What is its equilibrium state? Answer: The state that maximizes entropy under the constraints: \[ \begin{cases} \max S(U, n, V) \\ V \leq \frac 12 V_0 \\ U = U_0 \\ n = n_0 \end{cases} \]

where \(S(U, n, V)\) is the entropy of the gas when its states properties are \(U, n, V\).

Similarly, the second problem is another constraint-optimization problem: \[ \begin{cases} \max S(U, n, V) \\ V \leq V_0 \\ U = U_0 \\ n = n_0 \end{cases} \]

That the two different problems seem to “follow one from another” is an illusion. In reality, they only appear to follow one another because this is what we observe in the real world: one equilibrium follows another. In equilibrium thermodynamics, equilibria do not follow one another – each stands alone.

Time only appears in the following sense: we observe a real-world system, like a car engine, and notice that its motion seems to consist of a sequence of equilibria. Not quite true equilibria, since true equilibria do not change. Maybe “pseudo-equilibria”? Too dismissive. Let’s call them “quasi-equilibria” instead.

Now, keeping those “quasi-equilibria” in mind, we muddle around the ocean of classical thermodynamics, until we have found some equilibria that resemble the quasi-equilibria we have in mind. And so, from the bottom of the ocean, we pick up one equilibrium, then another, then another. Then we string together these little equilibria along a number line like a pearl necklace. We run our fingers over these pearls and delight in their “motion”, like flipping the pages of a stop-motion book and shouting, “Look, it is moving!”.

The three laws

More accurately: one law and two non-laws.

Second law

For the equilibrium of any isolated system it is necessary and sufficient that in all possible variations of the state of the systems which do not alter its energy, the variation of its entropy shall either vanish or be negative.

The second law of thermodynamics is all-important: maximizing entropy is all of classical thermodynamics. All other parts are just tricks for maximizing entropy.

Although, there are actually two aspects of the second law, subtly different. One of them is static, while the other is dynamic:

- Static: A thermodynamic system is described by a function on the state space, called the entropy function. Each system can be placed under many different forms of constraints. Under each possible constraint, the only physically observable state is the state that maximizes entropy under constraint.

- Dynamic: The entropy of a thermodynamic system does not decrease over time.

There is no difficulty with the static statement, but many difficulties with the dynamic statement, since it involves time, which really does not exist in classical thermodynamics. Nevertheless, since time is so important to the rest of physics, physicists, especially physics teachers, have repeatedly tried to hack it back into the theory, resulting in predictable and endless confusions. Since we do not compromise with intuition, in the rest of this essay, we will use the static formulation as much as possible.

First law

As a student, I read with advantage a small book by F. Wald entitled “The Mistress of the World and her Shadow”. These meant energy and entropy. In the course of advancing knowledge the two seem to me to have exchanged places. In the huge manufactory of natural processes, the principle of entropy occupies the position of manager, for it dictates the manner and method of the whole business, whilst the principle of energy merely does the book- keeping, balancing credits and debits.

The first law of thermodynamics is entirely trivial. Energy is nothing special! Energy is just a conserved quantity, one among equals, much like volume, mass, and many other things… Every conserved quantity is equally conserved,3 so it does not deserve a special thermodynamic law. You might as well say “conservation of mass” is “the second-first law of thermodynamics” and “conservation of volume” is “the third-first law of thermodynamics”, and “conservation of electrons” and “conservation of protons” and “conservation of length” (if you are studying a thermodynamic system restricted to move on a line) and “conservation of area” (if you are studying a thermodynamic system restricted on the surface of a lake), and so on…

3 The first law of thermodynamics had always struck me as oddly out of place, almost like a joke I could not catch, like

All animals are equal, but some animals are more equal than others

but with

All conserved quantities are conserved, but some quantities are more conserved than others.

I kept waiting for the textbooks, or the teachers, or someone to drop the act and confess, “Actually, we were joking – conservation of energy really is not that special, and we were just bored with standard physics and wanted to confuse you with a cute magic trick before showing you what standard physics really is saying.”. Slowly, I realized that there is no joke – conservation of energy is unironically treated as special not just by the students but even by the teachers. I had to figure out for myself how the joke really works, and it required me to rebuild thermodynamics according to my preferences.

This sounds extraordinary, but that is merely how classical thermodynamics works. The first law of thermodynamics does not deserve its title. It should be demoted to an experimental fact and not a law. Just to drive the point home, I wrote an entire sci-fi worldbuilding sketch about an alien species for which the conservation of volume that is fundamental, not energy, and which discovered stereodynamics. If you can laugh at their mistaken importance of the conservation of volume, maybe you can laugh at the mistaken importance of the conservation of energy too.

The proper place for the law of conservation of energy is not classical thermodynamics, but general physics, because energy is nothing special inside classical thermodynamics, but it is extremely special if we zoom out to consider the whole of physics. Whereas in classical thermodynamics, systems conserve energy, and volume, and mass, and electron-number, and proton-number, and… when you move outside of thermodynamics, such as when you add in electrodynamics, special relativity, and quantum mechanics, all kinds of conservations breakdown. You don’t have conservation of mass, or number of electrons, or even volume, but energy is always conserved.

This explains the long confusion around the conservation of energy. Within equilibrium thermodynamics, every conserved quantity is conserved, yes, but physicists are less interested in theoretical purity than mathematicians. They know that outside, energy is a more special conserved quantity than others, and so they can’t help but feel like energy should be treated as special inside thermodynamics too.

Zeroth law

Now that the first law has been dispelled, we can dispel the zeroth law of thermodynamics too. If energy falls from grace, so must its shadow, temperature.

Theorem 1 (general zeroth law) If \(S_1(X_1, Y) + S_2(X_2, Y)\) is maximized under the constraint \(X_1 + X_2 = X\), then \((\partial_X S_1)_Y = (\partial_X S_2)_Y\).

| maximized quantity \(S\) | conserved quantity \(X\) | derivative \((\partial_X S)_Y\) |

|---|---|---|

| entropy | energy | inverse temperature \(\beta\) |

| entropy | volume | \(\beta P\) |

| entropy | particles | \(-\beta \mu\), where \(\mu\) is chemical potential |

| entropy | surface area | \(-\beta\sigma\), where \(\sigma\) is surface tension |

| production value | raw material | marginal value |

Third law

The third law is rarely, if ever, used in classical thermodynamics, and its precise meaning is still unclear. It seems to me that its proper place is not thermodynamics, but quantum statistical mechanics, where it states that a substance, when at the lowest possible energy (ground state), has finite entropy – Einstein’s formulation of the third law. (Klimenko 2012)

An economic interpretation

Here is an economic interpretation of classical thermodynamics. There are other possible interpretations, and we will use the others in this essay.

In this interpretation, the laws of nature become the CEO of a company. Every conserved quantity is a commodity. The company has some commodity. Commodities themselves have no intrinsic value. Instead, the company is valued by a certain accounting agency. The CEO’s job is to move around the commodities so that the accounting agency gives it the highest book-value.

A compound system is a conglomerate company: a giant company made of little companies. If entropy is extensive, then it means the total book-value for the conglomerate is the sum of the book-value of each subsidiary company. Otherwise, entropy is nonextensive, and the accounting agency believes that the conglomerate has corporate synergy.

The inverse temperature \(\beta\) is the marginal value of energy:

\[\beta = \frac{d(\text{value of a sub-company})}{d(\text{energy owned by the sub-company})}\]

The pressure \(P\), multiplied by \(\beta\), is the marginal value of space:

\[\beta P = \frac{d(\text{value of a sub-company})}{d(\text{volume owned by the sub-company})}\]

It might appear odd to write \(\beta P\), but in the entropy-centric view of thermodynamics, it is the quantity \(\beta P\) that is fundamental, and in comparison, the pressure \(P\) is less fundamental, as a ratio \(P := \frac{\beta P}{\beta}\). Why, then, do we speak of pressure \(P\) and temperature \(T\), instead of \(\beta\) and \(\beta P\)? It is because classical thermodynamics is traditionally understood as energy-centric.

Basic consequences

Thermodynamic force/suction

Since nature is entropy-maximizing, if entropy can be increased by moving some energy from one system to another, it will happen. Similarly for space. It would seem as if there is a thermodynamic suction that is sucking on on energy, and the side with the higher thermodynamic suction tends to absorb it.

\[ \text{thermodynamic suction of $X$} = \left(\frac{\partial S}{\partial X}\right)_{\text{non-}X} \]

In energy-centric thermodynamics, we use thermodynamic force, or thermodynamic potential, defined by

\[ \text{thermodynamic force of $X$} = -\frac{\left(\frac{\partial S}{\partial X}\right)_{\text{non-}X}}{\left(\frac{\partial S}{\partial U}\right)_{\text{non-}U}} \]

For example, for a tank of gas, the macroscopic properties are \(U, V, N\), and so it has three thermodynamic suctions:

\[ \begin{aligned} \beta &= (\partial_U S)_{V, N}\\ \beta P &= (\partial_V S)_{U, N}\\ -\beta \mu &= (\partial_N S)_{U, V} \end{aligned} \]

and the thermodynamic forces associated with \(V, N\) are \(P\) and \(-\mu\).

Unfortunately, the notations for thermodynamic suctions are far from elegant. The first one, \(\beta\), is the inverse of temperature.. The second one, \(\beta P\), is \(\beta\) multiplied by pressure. The third one is truly the most annoying, as it not only involves \(\beta\), but also a negative sign. To see how the notation came about, we can write it out in differential form:

\[dS = \beta dU + \beta P dV - \beta \mu dN\]

Conventional notations are energy-centric, so we rewrite it to single out \(dU\):

\[ dU = TdS + (- PdV) + \mu dN \]

Now we see how the notation came about: \(TdS\) is the heat energy-flow into the system, \(-PdV\) is the mechanical work energy-flow into the system, and \(\mu dN\) is the chemical energy-flow into the system.

If I could truly reform notation, I would redefine \(\beta P\) as \(p_V\), meaning “the price of volume”, meaning “the price of particle”, and so on. In this notation, we have:

\[ \begin{aligned} dS &= p_U dU + p_V dV + p_N dN \\ dU &= p_U^{-1}dS - \frac{p_V}{p_U}dV - \frac{p_N}{p_U}dN \end{aligned} \]

Example 2 (photon gas with \(\mu = 0\)) Suppose we have a piston chamber, with its inner surface covered with silver, and there is a tiny speck of blackbody inside it, then the chamber would be filled with bouncing photons, in the form of a “photon gas”. The photons would reflect off the surface of the chamber, some absorbed and some emitted in turn, by the blackbody.

As usual for gas, the state of the system is determined by its internal energy, volume, and particle number: \(U, V, N\), with

\[dS = \beta dU + \beta PdV - \beta \mu dN\]

However, the photon gas is quite special, in that photons can be created and destroyed by the speck of blackbody, so at equilibrium, we must have \(\beta\mu = 0\), for otherwise, the system would be able to increase in entropy simply by creating/destroying more photons, and thus it is not in equilibrium.

This contrasts with the typical case with chemical gases like oxygen, where the particle number in a reaction chamber is constant, allowing \(\mu \neq 0\) even at equilibrium.

Helmholtz free entropy

When we have a small system connected to a large system, while we can solve its equilibria by maximizing the plain old entropy for the full compound system, it is often easier conceptually to define a “free entropy” for the small system, and treat the large system as a bath. This is similar to how one can solve for the motion of a cannonball on earth by describing a constant gravitational acceleration, even though we can could have solved for the full cannonball-earth two-body system.

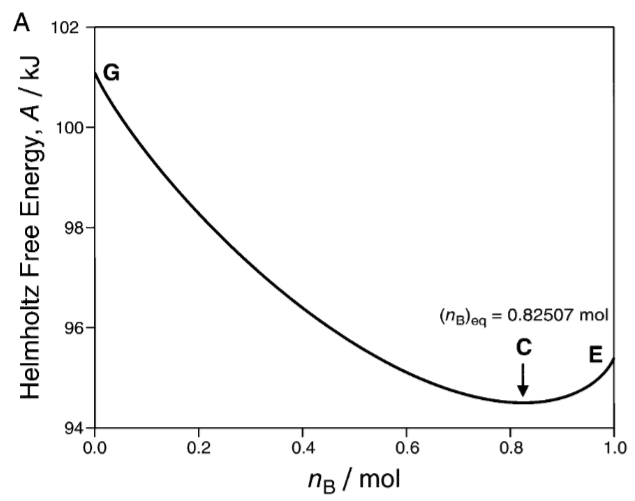

Definition 1 (Helmholtz free entropy) The Helmholtz free entropy is the convex dual of entropy with respect to energy:

\[ f(\beta, X) = \max_U [S(U, X) - \beta U] \tag{1}\]

where \(U\) is its internal energy, and \(X\) are some other macroscopic properties.

Of historical importance is the Helmholtz free energy \(F := - T f\), or equivalently,

\[ F = \min_U [U - TS(U, X)] \tag{2}\]

Theorem 2 (maximize Helmholtz free entropy) If a thermodynamic system is in energy-contact with an energy bath with price \(\beta\), and is held under constraint on the state by \(C(X) = 0\), then the system equilibrates at

\[ \begin{cases} \max_{U, X} [S(U, X) - \beta U] = \max_{X} f(\beta, X)\\ C(X) = 0 \end{cases} \]

That is, the system always equilibrates at the maximal Helmholtz free entropy state that satisfies the constraint.

Equivalently, the system minimizes its Helmholtz free energy under constraint.

If we prove the case for Helmholtz free entropy, then by multiplying it by \(-T\), we find that the system minimizes its Helmholtz free energy under constraint. So it remains to prove the case for Helmholtz free entropy.

We prove the case where there is no constraint on the state of the system. The proof for the case with constraint is similar.

Suppose the system starts out at \(\beta, U_0, X\). Then the equilibrium condition is

\[ \begin{cases} \max (S_{bath} + S) \\ U_{bath} + U = U_{bath, 0} + U_{0} \end{cases} \]

The entropy of the bath is

\[S_{bath} = S_{bath, 0} + \beta Q\]

where \(Q = U_{bath} - U_{bath, 0}\) is the amount of energy received by the bath as heat.

Plugging this back in, the equilibrium condition simplifies to

\[ \max_U [\beta (U_0 - U) + S(U, X)] \]

which is the desired result.

Theorem 3 (Helmholtz free energy difference is available for work) Consider the following method of extracting mechanical energy. Connect the system to an energy bath at the energy price \(\beta\), and to a mechanical system of arbitrary design. The system starts at \(\beta, U_0, X_0\) and ends at \(\beta, U_1, X_1\). No matter how the mechanical system is designed, and no matter whether the process is reversible or not, we have

\[ W\leq F(\beta, U_0, X_0) - F(\beta, U_1, X_1) \]

where \(W\) is “mechanical work done by the system”, that is, the increase in internal energy of the mechanical system. This is an equality when the process is reversible.

By conservation of energy and the second law,

\[ \begin{cases} U_1 = U_0 - (W + Q) \\ \beta Q + S(U_1, X_1) \geq S(U_0, X_0) \end{cases} \]

which simplifies to the result.

If the process is reversible, then the entropy before and after must be equal, which gives us

\[ \begin{cases} U_1 = U_0 - (W + Q) \\ \beta Q + S(U_1, X_1) = S(U_0, X_0) \end{cases} \]

which simplifies to the result.

This result is typically interpreted as saying that \(\Delta F = W\), that is, in a system in constant thermal equilibrium with an energy bath of constant temperature, the decrease in Helmholtz energy of the system is the maximal mechanical work extractable from the system. Incidentally, this explains the odd name of “free energy” – “free” as in “free to do work” to contrast with the other parts of internal energy, which are chained up and not free to do work. Energy is born free, and eventually everywhere it is in chains.

Theorem 4 (envelope theorem for Helmholtz) For any inverse temperature \(\beta > 0\) and thermodynamic properties \(X\), let \(U^*\) be the optimal internal energy that maximizes Helmholtz free entropy. We have

\[ \begin{aligned} \beta &= (\partial_U S)_X|_{U=U^*(\beta, X), X = X} \\ df &= (\partial_X S)_U|_{U=U^*(\beta, X), X = X} dX - U^*(\beta, X) d\beta \end{aligned} \]

if \(S\) is differentiable and strictly concave at that point.

For the first equation, differentiate \(f\). For the second equation, apply the same no-arbitrage proof as in the proof of Hotelling’s lemma (see the essay on Analytical Mechanics).

Economically speaking, the second equation is a special case of the envelope theorem, just like Hotelling’s lemma.

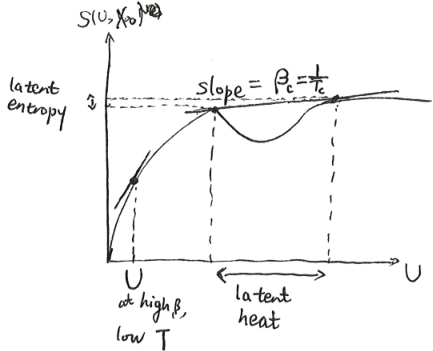

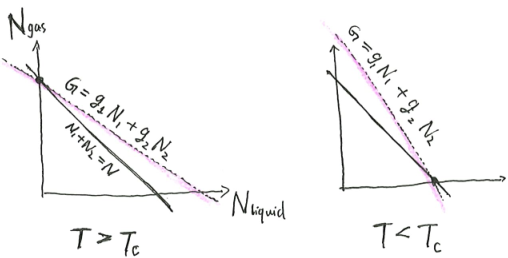

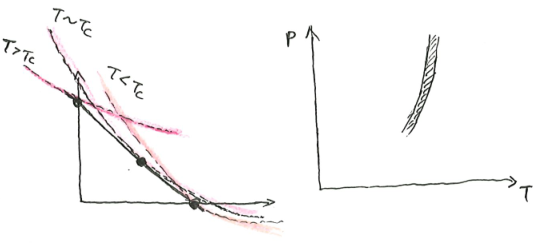

First-order phase transition

What happens if \(S\) is not differentiable and strictly concave? In this case, we do not have \(\beta = (\partial_U S)_X\). We have two possibilities.

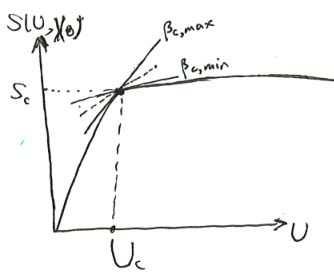

The first possibility is pictured as follows. There is a kink in the curve of \(S(U, X)\). At that point of critical internal energy \(U_c\), there is an entire interval of possible \(\beta\). What we would notice is that at that critical internal energy and critical entropy, the system can be in equilibrium with any heat bath with any temperature between \([T_{c, min}, T_{c, max}]\). As far as I know, such systems do not exist, as all physically real systems have a unique temperature at all possible states.

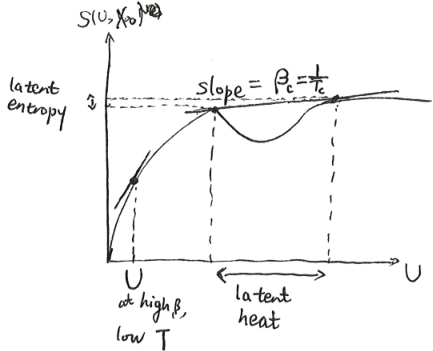

The second possibility is pictured as follows. There is a bump in the curve, such that we can draw a double tangent over the bump, with slope \(\beta_c\). At that critical inverse temperature, the system can be either at the lower tangent point, or the upper tangent point. It cannot be anywhere in-between, because as we saw, such points do not minimize \(f(\beta, X)\), and thus are unstable.

For example, if we confine some liquid water in a vacuum chamber, and bathe it in a cold bath, then at its critical \(\beta_c\), it would split into two parts, one part is all ice, and the other part is all water, mixed in just the right proportion to give it the correct amount of total internal energy. As it loses internal energy, the ice part grows larger, until it is all ice, at which point the system has finally gotten over the bump, and could cool down further.

At the critical point, \((\partial_{\beta}f)_X\) changes abruptly. So if we plot \(\beta \mapsto f(\beta, X)\), the curve will kink there.

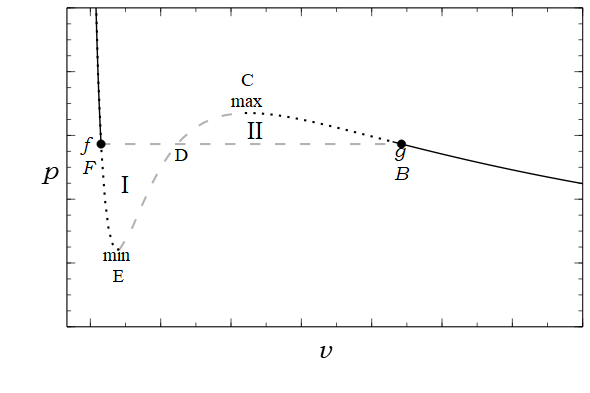

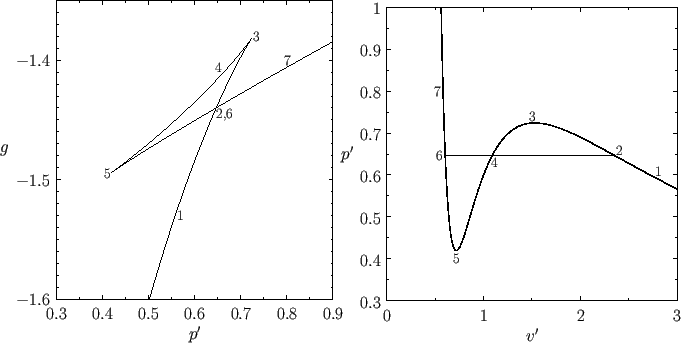

Theorem 5 (Maxwell equal area rule) In a first-order phase transition at a fixed temperature and varying pressure/volume, the \(P, V\) diagram has a horizontal line going from \((P_c, V_1)\) to \((P_c, V_2)\), such that

\[\int PdV = P_c(V_2-V_1)\]

Fix the system’s internal energy \(U\), and its temperature \(T\), and plot the \(V, S\) curve.

When pressure is at a critical value \(P_c\), the line of slope \(\beta P_c\) is tangent to the \(V \mapsto S(U, V)\) curve at two different points, with volumes \(V_1, V_2\). This is that first-order phase transition.

Now, move the system state from the first point to the second. During the process, \[\int PdV = \int (TdS -dU) = \int TdS = T \Delta S = T \beta P_c(V_2 - V_1) = P_c(V_2-V_1)\]

Other free entropies and energies

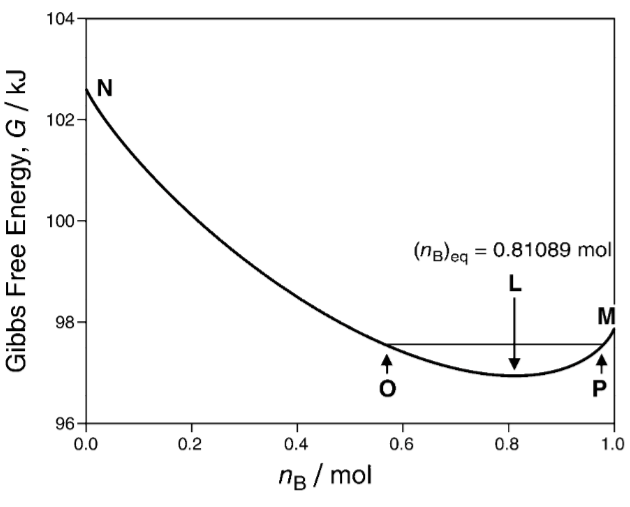

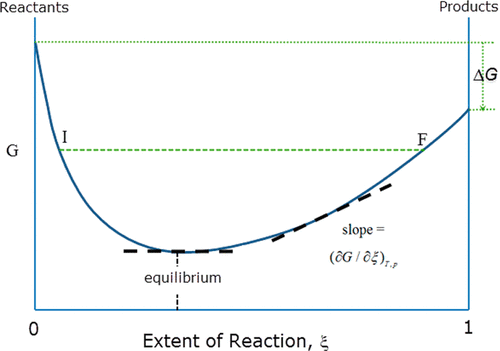

Consider a thermodynamic system whose entropy function is \(S(U, V, X)\), where \(U\) is the internal energy and \(V\) is the volume. Its Gibbs free entropy is

\[ g(\beta, \beta P, X) = \max_{U, V} (S(U, V, X) - \beta U - (\beta P)V) \]

In other words, it’s the convex dual of entropy with respect to energy and volume. Similarly, its Gibbs free energy is \(G = -g/\beta\).

Theorem 6 (Gibbs free entropy is maximized) Let a thermodynamic system be in equilibrium with an energy-and-volume bath of prices \(\beta, \beta P\). If the system has constraint on the state by \(C(X) = 0\), then the system equilibrates at

\[ \begin{cases} \max_{X} g(\beta, \beta P, X)\\ C(X) = 0 \end{cases} \]

And if \(S\) is strictly concave and differentiable at that point, then \(dg = (\partial_X S)_{U, V} dX - Ud\beta - V d(\beta P)\).

Theorem 7 (Gibbs free energy difference is available for work) Connect a system to an energy-and-volume bath at marginal entropies \(\beta, \beta P\), and a mechanical system of arbitrary design. The system starts at \(\beta, \beta P, X_0\) and ends at \(\beta, \beta P, X_1\). Then,

\[ W\leq G(\beta, \beta P, X_0) - G(\beta, \beta P, X_1) \]

where \(W\) is “work”, that is, the increase in internal energy of the mechanical system. If the process is reversible, then equality holds.

Theorem 8 (envelope theorem for Gibbs) For any inverse temperature \(\beta > 0\), any pressure \(P\), and other thermodynamic properties \(X\), let \(U^*, V^*\) be the optimal internal energy and volume that maximizes Gibbs free entropy, then

\[ \begin{aligned} \beta &= (\partial_U S)_{V, X} \\ \beta P &= (\partial_V S)_{U, X}\\ dg &= (\partial_X S)_{U, V} dX - U^* d\beta - V^* d(\beta P) \end{aligned} \]

if \(S\) is differentiable and strictly concave at that point. Here, the left sides of all equations are evaluated at \(U=U^*(\beta, \beta P, X), V=V^*(\beta, \beta P, X), X = X\).

Exercise 2 Prove the above theorems for Gibbs free entropy.

Similarly, if a system is in energy-volume-chemical contact with an energy-volume-chemical bath, then the following Landau free entropy is useful:

\[ \omega(\beta, \beta P, -\beta\mu_1, \dots, -\beta\mu_n) = \max_{U, V, N_1, \dots, N_n} \left(S(U, V, N_1, \dots, N_n, X) - \beta U - (\beta P)V - \sum_{i=1}^n (-\beta \mu_i) N_i \right) \]

In other words, it’s the convex dual of entropy with respect to energy, volume, and particle numbers.

Exercise 3 Formulate and prove the analogous theorems for Landau free entropy.

Exercise 4 If we consider a system, surrounded by gas, inside an adiathermal piston under an atmosphere, then we can consider the following form of free energy: \(\tilde s(U, \beta P, X) := \max_{V} (S(U, V, X) - \beta U - (\beta P)V)\). Formulate and prove the analogous theorems for this free entropy.

Take-home lessons:

- When a system is in contact with a \(Y\)-bath, then it is useful to consider the convex dual of the entropy with respect to \(Y\), that is, \(\max_Y (S(Y, X) - p_Y Y)\), where \(p_Y\) is the marginal entropy of \(Y\) of the bath. That is, the price of entropy on the bath-market.

- Free entropy is maximized when the system equilibrates with a bath. Free energy is minimized.

- Change in free energy is the maximal amount of work extractable when the system equilibrates with both a bath and a mechanical system. This maximal amount of work is extracted precisely when the process is reversible. The process is irreversible precisely when less than maximal amount of work is extracted.

Maxwell relations

Since \(dS = \beta dU + \beta P dV\), we have the first Maxwell relation

\[ \partial_U \partial_V S = (\partial_V \beta)_U = (\partial_U(\beta P) )_V \tag{3}\]

In economic language, it states

\[ \partial_{q_i}\partial_{q_j} S = (\partial_{q_i} p_j)_{q_j} = (\partial_{q_j} p_i)_{q_i} \tag{4}\]

where \(q_i\) is the quantity of commodity \(i\), and \(p_i\) is its marginal utility. In economics, we usually prefer writing demanded quantity as a function of marginal price as

\[(\partial_{p_j}q_i)_{q_j} = (\partial_{p_i}q_j)_{q_i}\]

This is a symmetry of the cross-price elasticity of demand.4

4 Samuelson used the Maxwell relations, and other relations, to justify neoclassical economics. His idea is that, while utility functions are unobservable, and we do not have a scientific instrument to measure “economic equilibrium”, we can make falsifiable predictions from assuming that the economy is in equilibrium – such as the symmetry of the cross-price elasticity of demand.

For example, if \(i, j\) are noodles and bread, then \((\partial_{q_i} p_j)_{q_j}\) is how much the marginal price of bread would rise if I have a little more noodle. As noodles and bread are substitutional goods, we expect the number to be negative, meaning that having more noodles, I would price bread less. The Maxwell relation then tells us that it is exactly the same in the other direction: If I am given a little more bread, I would price noodles less. Not only that, I would want less by exactly the same amount.

The second relation is a bit hard to explain, since enthalpy really does not have a good representation in entropy-centric thermodynamics. However, it turns out to be just the third relation with \(i, j\) switched.

Since \(df = \beta P dV - Ud\beta\), we have the third Maxwell relation

\[ \partial_\beta\partial_V f = -(\partial_V U)_\beta = +(\partial_\beta(\beta P))_V \tag{5}\]

In economic language, we have

\[ \partial_{p_i}\partial_{q_j} \left[\max_{q_i}(S(q) - p_i q_i )\right]= -(\partial_{q_j} q_i)_{p_i} = (\partial_{p_i}p_j)_{q_j} \tag{6}\]

Continuing from the previous example, if \(i, j\) are noodles and bread, and we open a shop with an infinite amount of noodles always at the exact price, then I would buy and sell from the noodle shop until my marginal price of noodles is equal to the shop’s price. Now, \((\partial_{q_j} q_i)_{p_i}\) is how much noodles I would buy if I am given a marginal unit of bread. As noodles and bread are substitutional goods, this number is negative. This then means \((\partial_{p_i}p_j)_{q_j} > 0\), meaning that if the noodle price suddenly increases a bit, then I would sell a bit of noodles until I have reached equilibrium again. At that equilibrium, since I have less noodles, I would price higher its substitutional good, bread, by an equal amount as the previous scenario.

Since \(dg = -Ud\beta - Vd(\beta P)\), we have the fourth Maxwell relation

\[ -\partial_{\beta}\partial_{\beta P}g = (\partial_{\beta P}U)_\beta = (\partial_\beta V)_{\beta P} \tag{7}\]

In economic language, we have

\[ \partial_{p_i}\partial_{p_j} \left[\max_{q_i, q_j}(S(q) - p_i q_i - p_j q_j)\right]= -(\partial_{p_j} q_i)_{p_i}= -(\partial_{p_i} q_j)_{p_j} \tag{8}\]

This is another symmetry of cross-price elasticity of demand.

Continuing from the previous example, if \(i, j\) are noodles and bread, and we open a shop with an infinite amount of noodles and bread, then I would of course buy and sell from the shop until my marginal prices of noodles and bread are equal to the shop’s prices. Now, if the shop suddenly raises the price of bread by a small amount, I would sell off some bread until my marginal price for bread increases to the shop’s new price. Now my marginal price for noodles increases too by substitutional effect, so I buy some noodles. Thus \((\partial_{p_j} q_i)_{p_i} > 0\). Switching the scenario, we find that raising the price of noodles would make me buy bread by an amount equal to that in the previous scenario.

We use the notation of economics here.

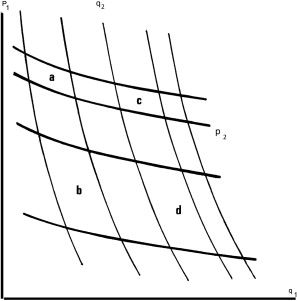

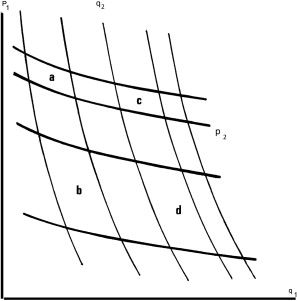

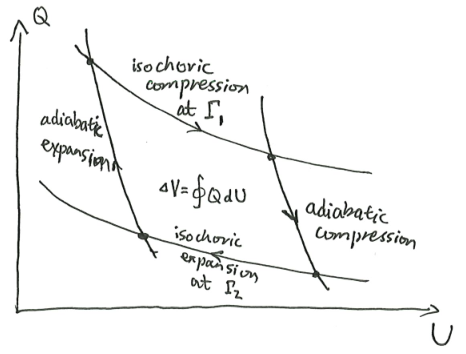

Suppose we have commodities \(1, 2, \dots, n\). We pick two commodities \(i, j\), and fix all other commodity quantities. Thus, we can write \(dS = p_i dq_i + p_j d q_j\).

Since knowing \(n\) properties of the thermodynamic system allows us to know its exact state, and we have already fixed \(n-2\) properties of it, there only remain two more degrees of freedom. We can parameterize this by \((q_i, q_j)\), or \((p_i, q_j)\), or \((p_i, p_j)\), or any other reasonable coordinate system.

If the thermodynamic system undergoes a cycle, then

\[0 = \oint dS = \oint p_i dq_i + \oint p_j dq_j\]

and thus, if we take the cycle infinitesimally small, we find that \(dp_i\wedge dq_i = -dp_j \wedge dq_j\). That is, the map \((p_i, q_i) \mapsto (p_j, q_j)\) preserves areas, but reverses orientation. In particular, we have a Jacobian \[ \frac{\partial(p_i, q_i)}{\partial(p_j, q_j)} = -1 \]

Now, let \((x, y)\) be an arbitrary coordinate transform. By the chain rule for Jacobians, \[ \frac{\partial(p_i, q_i)}{\partial(x, y)} = \frac{\partial(p_i, q_i)}{\partial(p_j, q_j)} \frac{\partial(p_j, q_j)}{\partial(x,y)} = -\frac{\partial(p_j, q_j)}{\partial(x,y)} \]

This allows us to derive all the Maxwell relations. For example, setting \((x, y) = (p_i, q_j)\) gives us the third relation

\[(\partial_{q_j} q_i)_{p_i} = -(\partial_{p_i}p_j)_{q_j}\]

Caratheodory’s thermodynamics

In the early 1900s, Constantin Caratheodory discovered a new way to “geometrize” thermodynamics, with the austere beauty of Euclidean geometry. Though his formulation fell into obscurity, it was reborn in neoclassical economics as utility theory.

Entropy and temperature

Consider a thermodynamic system with \(n+1\) dimensions of state space. Give the state space coordinates \(q_0, q_1, \dots, q_n\). For example, for a tank of ideal gas where the particle number is fixed, we have \(q_0 = U, q_1 = V\).

Let the system be at a certain state \(\vec q\), and wrap the system in a perfectly insulating (adiathermal) blanket. The system can undergo many different kinds of adiathermal motion, but there are certain motions that it cannot undergo.

For example, for a piston of ideal gas, the possible motions are adiabatic expansion, adiabatic compression, Joule expansion, and any combination of them. However, “Joule compression” is impossible – the gas will not spontaneously contract to the left half of the system, pulling in the piston head, anymore than a messy room will spontaneously tidy itself.

We say that \(\vec q'\) is adiathermally accessible from \(\vec q\) if there exists a path from \(\vec q\) to \(\vec q'\), such that the path is infinitesimally adiathermal5 at every point.

5 The word “adiathermal” means “heat does not pass through”, while “adiabatic” has an entire history of meaning that makes it hard to say what exactly it is (see my essay on Analytical Mechanics). Personally, I think “adiabatic” means “zero entropy change”, and all its other meanings derive from it.

Here is Caratheodory’s version of the second law of thermodynamics:

- In any neighborhood of any point \(\vec q\), there are points adiabatically inaccessible from it.

- Furthermore, for any two points, \(\vec q, \vec q'\), at least one of them is adiabatically accessible from the other.

The effect of these two axioms is that we can define a total ordering \(\preceq\) on state space, where we write \(\vec q \preceq \vec q'\) to mean that \(\vec q\) can adiabatically access \(\vec q'\), and write \(\vec q \sim \vec q'\) to mean that they are mutually adiabatically accessible.

Interpreted economically, we say that the system is an economic agent, that each \(\vec q\) is a bundle of goods, that \(\vec q \preceq \vec q'\) means that \(\vec q'\) is preferable to the agent, and that \(\vec q \sim \vec q'\) means they are equally preferred.

The total ordering partitions the state space into contour surfaces of equal accessibility, or indifference surfaces. Assuming the state space is not designed to be pathological, these indifference surfaces will be differentiable.

Let us consider the indifference surface passing state \(\vec q\). The indifference surface is locally a plane, so it has equations

\[ dq_0 - \sum_i \tilde p_i dq_i = 0 \]

where \(\tilde p_i = (\partial_{q_i}q_0)_{q_1, \dots, q_n}\). For example, a tank of (non-ideal) gas satisfies \(dU + PdV = 0\) over its indifference curves.

Theorem 9 (existence and uniqueness of temperature and entropy) Let \(\omega = dq_0 - \sum_i \tilde p_i dq_i\). If \(\omega\) is nonzero everywhere, then there exists functions \(\beta, S\) on the state space, such that \(dS = \beta \omega\).

Furthermore, they are unique up to a monotonic transform. That is, if we have another solution \(\beta', S'\), then there exists a strictly monotonic function \(f\) such that \(S' = f \circ S\).

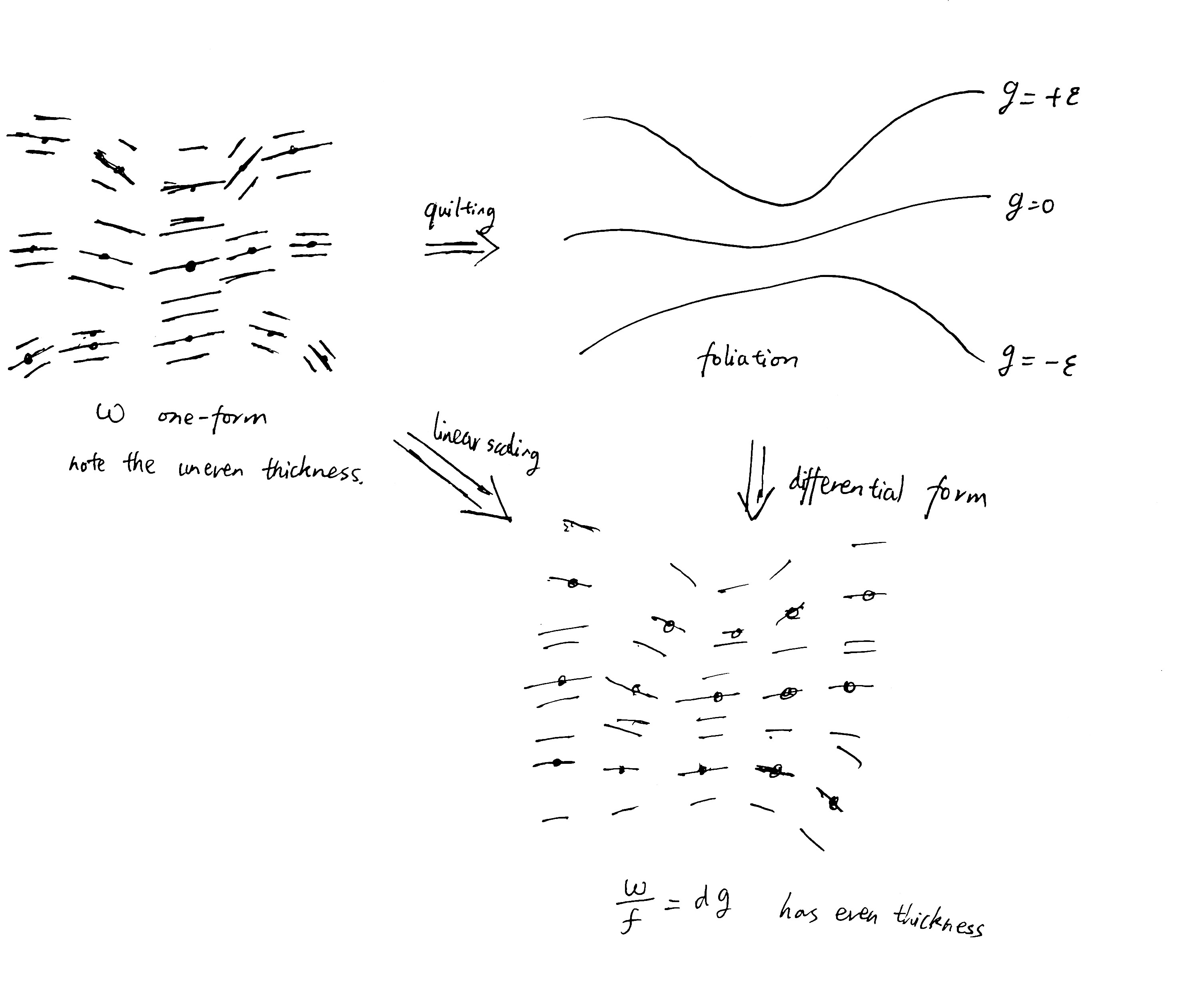

At each point \(P\) the one-form \(\omega(p)\) is visualized as a stack of parallel planes. The planes are quilted together, but with “uneven thickness”. By scaling the one-forms just right at every point, the thickness becomes equalized, and so \(\beta \omega = dg\) for two real-valued functions \(\beta, S\).

Given any other solution \(\beta', S'\), both \(S\) and \(S'\) must have the same contour lines, so there exists some function that maps the \(S\)-height of a contour line to its \(S'\)-height.

Cardinal and ordinal utilities

Economically speaking, \(\tilde p_i\) is the marginal worth of \(q_i\) denoted in units of \(q_0\). For example, we can say that \(q_0\) are cowry shells, which themselves are pretty and give us some utility. However, it can also be used as a monetary unit. Then, if \(i\) is bread, then \(\tilde p_i\) is the marginal amount of cowry shells that we would pay for a marginal amount of bread.

If we were to visit a free market where we can buy and sell items denoted in cowry shells, then we would buy bread if \(\tilde p_i > \tilde p_{i, market}\), and sell bread if \(\tilde p_i < \tilde p_{i, market}\). Right at the border of \(\tilde p_i = \tilde p_{i, market}\), we would be indifferent about buying or selling bread. When \(\tilde p_i = \tilde p_{i, market}\) for all \(i\), we would be completely indifferent about the market.

\(S\) is the utility, and \(\beta\) is the marginal utility of cowry shells. The theorem tells us that just by knowing how we order the goods (” \(S(\vec q) > S(\vec q')\) “), we can extract a numerical value for the goods (” \(S(\vec q) - S(\vec q') = 1.34(S(\vec q'') - S(\vec q'''))\) “). Out of ordinal utility, we have achieved cardinal utility.

There used to be a debate between “ordinalists” and “cardinalists” of utility theory. The “cardinalists” were the more venerable of the two camps, tracing back to Bentham’s felicific calculus and the marginalist revolution. They argued that utility is real-valued, like entropy and temperature. The “ordinalists” countered that a nobody has ever measured a utility in anyone’s brain. The only thing we can observe is preferences: I prefer this over that – I can order everything that can ever happen to me on a numberless line of preferences. Similarly, nobody can ever actually measure temperature or entropy, only that energy flows from this gas to that gas, which presumably has lower temperature, and that one chunk of gas in one state ends up in another state, which presumably has higher entropy.

The debate has been mostly resolved by the work of Gérard Debreu, who showed that under fairly reasonable assumptions, cardinal utility is possible (Debreu 1971).6

6 Out of all those famous economists I have seen, Gérard Debreu is perhaps the most mathematically austere. Reading his works, I felt like he was another G. H. Hardy, a Bourbaki of economics. He did economics not to improve the world, not to help people, and not to advance a political agenda, but to simply uncover an ꙮmmatidium of eternity.

This theorem, or rather, this family of theorems, have several names, as befitting for such a versatile and productive family. In calculus, it’s called the integrability of Pfaffian forms. In differential geometry, it’s called Darboux’s theorem, or Frobenius theorem. In economics, it’s called the integrability of demand, or the cardinal-ordinal utility representation theorem.

When cast in the language of economics, cowry shells are not special. We could denote prices in cowry shells, or cans of sardine, or grams of gold. That is, we are free to pick any numéraire we want, as long as we are consistent about it.

Similarly, energy is not special. For example, with ideal gas, we could write the first law of thermodynamics as the conservation of energy, like

\[dU - (-P)dV = \beta^{-1}dS\]

or as the conservation of volume, like

\[dV - (-P^{-1})dU= (\beta P)^{-1}dS\]

and from the perspective of classical thermodynamics, there is no possibility of saying that energy is more special than volume. Energy is exactly as special as volume, and no more special than that.

When I realized this difference, I was so incensed at this mistake that I wrote an entire sci-fi worldbuilding sketch about an alien species, for which it is the conservation of volume that is fundamental, not energy, and which discovered stereodynamics, not thermodynamics.

Extensive entropy

While we have constructed the temperature \(T\) and the entropy \(S\) of an isolated system, it is not unique: we can stretch and compress the entropy function \(S\) arbitrarily by a monotonic function, and as long as we modify the temperature function \(T\) just right, the two modifications cancel out, and we have \(TdS = T' dS'\).

In order to uniquely fix an entropy function, we need further assumptions. The most commonly used method is by considering what happens to the entropy of a compound system. In general, there is no reason to expect entropy to be extensive – if we take compound two systems together, the entropy of the compound system should be the sum of the two subsystems. However, if we make some assumptions on “rationality”, then the entropy would be uniquely fixed, and would be extensive.

Exercise 5 (von Neumann–Morgenstern entropy construction) Study the statement of the von Neumann–Morgenstern utility theorem, and translate it to thermodynamics. It should be of the following form:

Assuming that the adiabatic accessibility of any compound system satisfies the following properties

- …

- …

…

then the entropy of any compound system is the sum of the entropies of its subsystems, and the entropy function is unique up to adding a constant and multiplying by a positive scalar.

Some hints:

- It might help your intuition if you anthropomorphize Nature as a “vNM-rational agent”.

- The standard formulation of the vNM theorem uses lotteries of form \(pM + (1-p)N\), where \(p\in (0, 1)\) is a probability, and \(M, N\) are bundles of goods. However, it is impossible generally to “take \(0.37\) of a system \(M\) and compound it with \(0.63\) of system \(N\)”. To bypass this difficulty, replace that with “take \(37\) copies of system \(M\) and compound them with \(63\) copies of system \(N\)”.

Bonus: Geometric thermodynamics

Although geometrical representations of propositions in the thermodynamics of fluids are in general use, and have done good service in disseminating clear notions in this science, yet they have by no means received the extension in respect to variety and generality of which they are capable.

Contact geometry

Every mathematician knows it is impossible to understand an elementary course in thermodynamics. The reason is that thermodynamics is based—as Gibbs has explicitly proclaimed – on a rather complicated mathematical theory, on the contact geometry. Contact geometry is one of the few ‘simple geometries’ of the so-called Cartan’s list, but it is still mostly unknown to the physicist – unlike the Riemannian geometry and the symplectic or Poisson geometries, whose fundamental role in physics is today generally accepted.

V.I. Arnol’d (Caldi et al. 1990, 163)

To explain this mysterious remark, we take a plunge into abstraction. We know that a real gas has properties \(P, V, T, S, \dots\), and that they satisfy the differential equation:

\[ dS = \beta dU + \beta PdV \]

To clean up the notation, we can change the notation to

\[ dS = p_1 dq_1 + p_2 dq_2 \]

This formula has a clear interpretation in economics: if the marginal utility of commodity \(1\) is \(p_1\), and the marginal utility of commodity \(2\) is \(p_2\), then if we receive \(\delta q_1, \delta q_2\), our utility would increase by \(p_1 \delta q_1 + p_2 \delta q_2\).

The difficult thing about classical thermodynamics is that there are so many quantities, such as \(T, V, N, \dots\). The saving grace is that it turns out that there are only a few degrees of freedom.7

7 The Parthian shot is that now you are burdened with dozens of equations relating these quantities. I cannot remember any of the Maxwell relations, so I look at Wikipedia every time I need to calculate with them.

However, why is it that a macroscopic lump of matter, whirling with \(10^{23}\) molecules, turn out to be characterized by only a few degrees of freedom? Why is it that a national economy, swarming with \(10^8\) people, has macroeconomic laws? For the first question, the answer is given by classical thermodynamics: the lump of matter is maximizing its entropy under constraints, so its degrees of freedom are exactly as many as the number of constraints it is laboring under. For the second question, the answer is given by neoclassical economics: the national economy behaves as if it is maximizing a social utility function under its resource constraints.

With that brief look at philosophy, we return to abstract thermodynamics. We have a lump of matter (such as ideal gas in a piston), of which we can measure five different properties: \(p_1, p_2, q_1, q_2, S\). In general, we expect that the space of possible measurements is 5-dimensional, but it turns out that they collapse down to a 2-dimensional curved surface. This is why we could completely fix its state knowing just its \(V, U\), or just its \(P, T\), etc.

The question now arises: Why is it possible to collapse things down to this curved surface?

Things are already interesting when we have just one commodity:

\[dS - pdq = 0\]

and we ask: Why is it possible to collapse the space of \((q, p, S)\) from 3 to 1 dimension?

In modern geometry, an expression like \(dS - \sum_i p_i dq_i = 0\) defines a field of planes in \(\mathbb{R}^3\). That is, at each point \((q, p, S)\), we construct a plane defined by

\[ \{(q + \delta q, p + \delta p, S + \delta S): \delta S - p \delta q = 0\} \]

For example, in three dimensions, the field of planes \(dS - pdq = 0\) would look like it is constantly twisting as \(p\) increases. The study of geometric structures definable via the field of planes is contact geometry.8

8 This explanation might sound like an anticlimax, and I imagine someone would object “Thermodynamics is reduced to contact geometry… but what is contact geometry?”. My answer is that contact geometry simply is. It is not supposed to be a generator of intuitions. Instead, it is a common language that bridges between intuitions. By casting thermodynamics, economics, mechanics, etc, into the language of contact geometry, we would then be able to translate intuition from one field to another field. Saying that “thermodynamics is contact geometry” is not telling you an intuitive way to see thermodynamics, but rather, an intuitive way to see contact geometry.

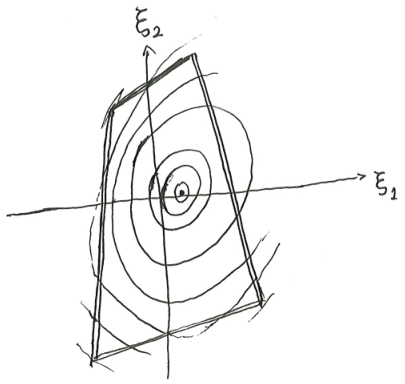

Given such a field of planes \(dS - \sum_{i=1}^n p_i dq_i\) in \(\mathbb{R}^{2n+1}\), we say that a manifold is a Legendrian submanifold iff the manifold has \(n\) dimensions, and is tangent to the field of planes at every point.

For example, when \(n=1\), a Legendrian submanifold is a curve that winds around \(\mathbb{R}^3\) and is always tangent to the plane at every moment.

Let \(S(q)\) be a differentiable function. We can interpret \(S(q)\) as the amount of money we can earn if we produce something using the bundle of raw materials \((q_1, \dots, q_n)\).

Given any market price for the raw materials, \(q^* = \mathop{\mathrm{argmax}}_q (S(q) - \braket{p, q})\) is the profit-maximizing production plan, and \(\Pi(p) = \max_q (S(q) - \braket{p, q})\) is the profit.

Theorem 10 The “profit-maximization surface” defined by \(p \mapsto (q^*, p, S(q^*))\) is a Legendrian submanifold.

Conversely, given any Legendrian submanifold parameterized by \(p \mapsto (q(p), p, S(q(p)) )\), then \(q(p)\) is profit-stationarizing. That is,

\[\nabla_q (S(q) - \braket{p, q}) = 0\]

at \(q(p)\). If \(S\) is strictly concave, then \(q(p)\) is profit-maximizing.

The first part is proven by Hotelling’s lemma.

The second part is proven by plugging in \(dS - \sum_i p_i dq_i = 0\). And if \(S\) is strictly concave, then \(q \mapsto S(q) - \braket{p, q}\) is also strictly concave, and so zero gradient implies global maximum.

Economically speaking, \(\max_q (S(q) - \braket{p, q})\) means to maximize profit. What does it mean, thermodynamically speaking? It means minimizing \(\braket{p, q} - S(q)\), which is the Gibbs free entropy! Maximizing profit when a factory has access to a market is the same as minimizing Gibbs free entropy when a system is in contact with a bath.

Samuelson’s area-ratio thermodynamics

Philosophy

In Paul Samuelson’s Nobel prize lecture of 1970, among comments of classical mechanics, variational principles, and neoclassical economics, he said something curious about the analogy between classical thermodynamics and neoclassical economics:

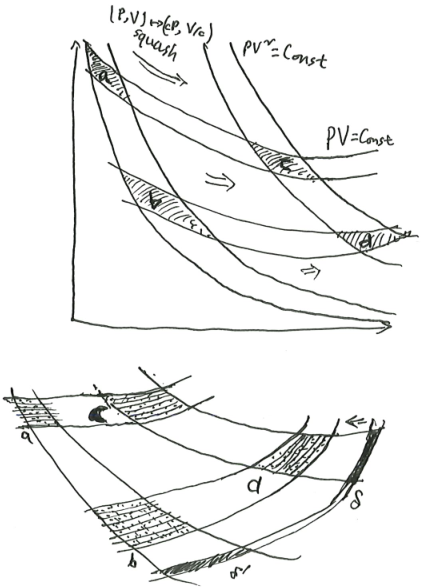

However, if you look upon the monopolistic firm hiring 99 inputs as an example of a maximum system, you can connect up its structural relations with those that prevail for an entropy-maximizing thermodynamic system. Pressure and volume, and for that matter absolute temperature and entropy, have to each other the same conjugate or dualistic relation that the wage rate has to labor or the land rent has to acres of land. Figure 2 can now do double duty, depicting the economic relationships as well as the thermodynamic ones.

If someone challenged me to explain what the existence of [utility] implies, but refused to let me use the language of partial derivatives, I could illustrate by an equi-proportional area property… I may say that the idea for this proposition in economics came to me in connection with some amateurish researches in the field of thermodynamics. While reading Clerk Maxwell’s charming introduction to thermodynamics…

This intriguing little remark piqued my interest, and after a little digging, I figured it out.9

9 Based on research by James Bell Cooper, who seems to be the world expert in this obscure field (Cooper and Russell 2006; Cooper, Russell, and Samuelson 2001).

Samuelson is alluding to a deep problem in economics theory: Nobody has ever seen a utility function, anymore than anybody has ever seen an entropy. If this is the case, then how do we know that agents are maximizing a utility, or that systems are maximizing an entropy? In his long career, he searched for many ways to answer this, coming down to the idea that, even though we cannot measure utility, we can measure many things, such as how firms respond to prices. Given some measurable quantities, we can then prove, mathematically, that something is being maximized. At that point, we can simply call that something “utility”, and continue doing economics as usual.

Philosophically, Samuelson was greatly influenced by operationalism, a philosophy of science akin to logical positivism. As stated by the definitive work on operationalism, “we mean by any concept nothing more than a set of operations; the concept is synonymous with the corresponding set of operations” (Bridgman 1927).

In his early work, particularly Foundations of Economic Analysis (1947) and the development of revealed preference theory (1938), Samuelson embraced operationalism as a means of purging economics of non-observable, and thus scientifically meaningless, concepts like utility. He sought to rebase economic theory on purely observable behavior and measurable quantities. Revealed preference theory, for instance, would eliminate utility functions, and derive a consumer’s preference ordering directly from observable consumer choices at different price levels.

Over time, Samuelson’s stance on operationalism softened to a more pragmatic approach, recognizing the value of unobservable concepts as theoretical tools, as long as they can be based on direct observables.

For example, while Samuelson initially sought to eliminate utility functions, he later argued that even if utility is not directly observable, it can be uniquely determined from observing agents’ preferences, by invoking some utility representation theorems – provided that the preferences satisfy certain properties. (Samuelson 1999)

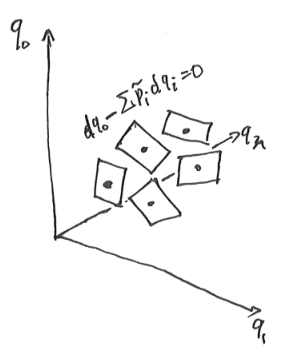

Area ratio construction

Theorem 11 (area ratio law implies a new coordinate system) Consider an open rectangle \(R\) in the plane \(\mathbb{R}^2\). Let there be two families of curves

Suppose that each curvy parallelogram formed by the two families is contained in \(R\), and the curves satisfy the area-ratio rule, then we can define another coordinate system \((z, w)\) on \(\mathbb{R}^2\), such that the coordinate system preserves areas: \[ dx \wedge dy = dz \wedge dw \]

and that the two families of lines are the constant \(z\) and constant \(w\) curves.

The coordinate system is unique up to an affine squashing transform, that is, \((z, w) \mapsto (cz + d, w/c + e)\) for some constants \(c, d, e\) with \(c \neq 0\).

Fix an arbitrary point as \((z, w) = (0, 0)\). Pick an arbitrary curve in one of the families, not passing \((0, 0)\) point, and call it the \(w=1\) line. By continuity, there exists a unique curve in the other family, such that their curved parallelogram has unit area. Call that other curve the \(z=1\) line. Now we can label its four corners as \((z, w) = (0, 0), (0, 1), (1, 0), (1, 1)\).

For any other point, its \((z, w)\) coordinates can be constructed as shown in the picture, with

\[z = a+b, \quad w = b+d\]